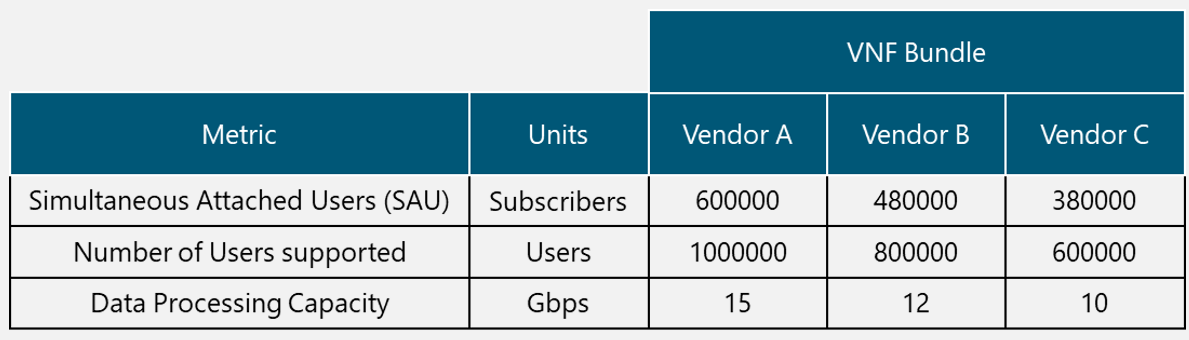

Network as a Service (NaaS) PlayBook

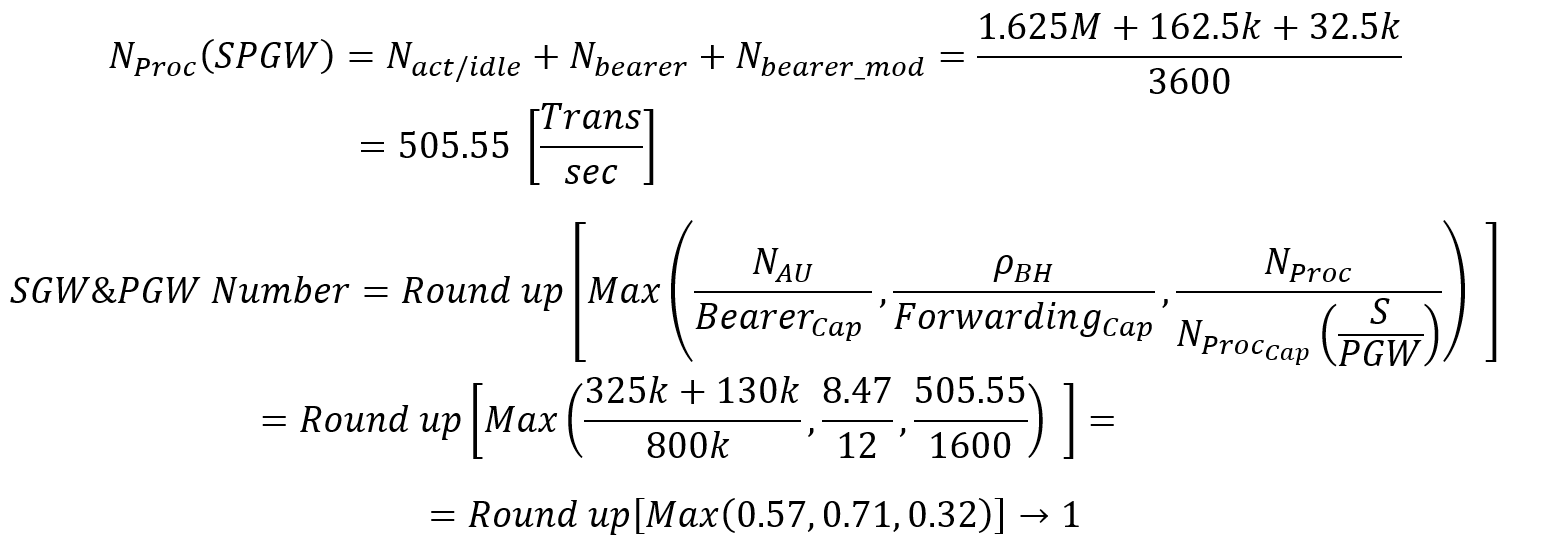

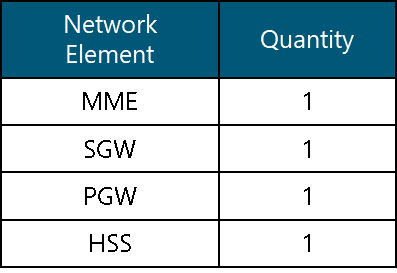

1. Tx Network High-Level Design Introduction

The Tx Network High-Level Design (HLD) module provides the NaaS operator with background information and methodologies to elaborate a HLD for a Tx network. It provides guidelines about how to design the transport network that interconnects disparate networks, including a radio access network (RAN) and data centers. It further provides instruction about how to transform guidelines into actual design parameters that is necessary for Tx network HLD development.

The main output of this module is the Tx network HLD that includes, among others, the transport design that specifies physical and logical topology, the transport solution to be implemented, and a high-level bill of quantities (BOQ). The business case can be analyzed with this information. This module will guide the NaaS operator through the process of generating a technically compliant HLD to speed decision-making and the related deployment process.

1.1 Module Objectives

This module will enable a NaaS operator to standup, run, and manage a Tx network HLD initiative. The specific objectives of this module are to:

- Provide an information base and fundamentals to perform tasks associated with Tx network HLD.

- Provide detailed how-to instruction regarding key HLD engineering tasks.

- Provide an overview of the end-to-end Tx network HLD process, with instructions for tailoring it to specific NaaS environments.

- Provide guidelines to develop a formal Tx network HLD recommendation.

1.2 Module Framework

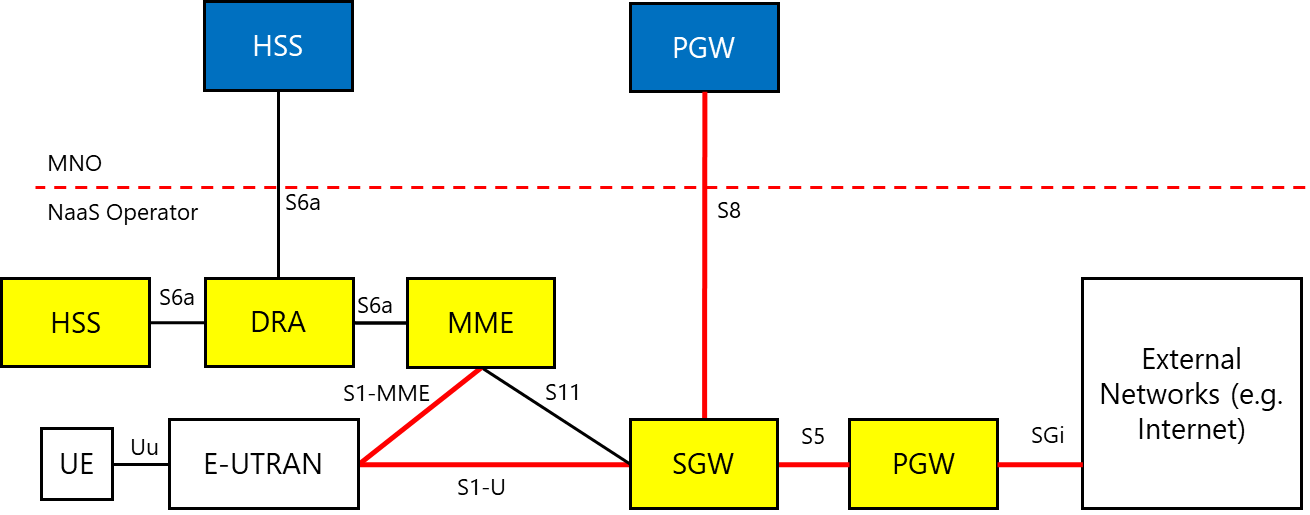

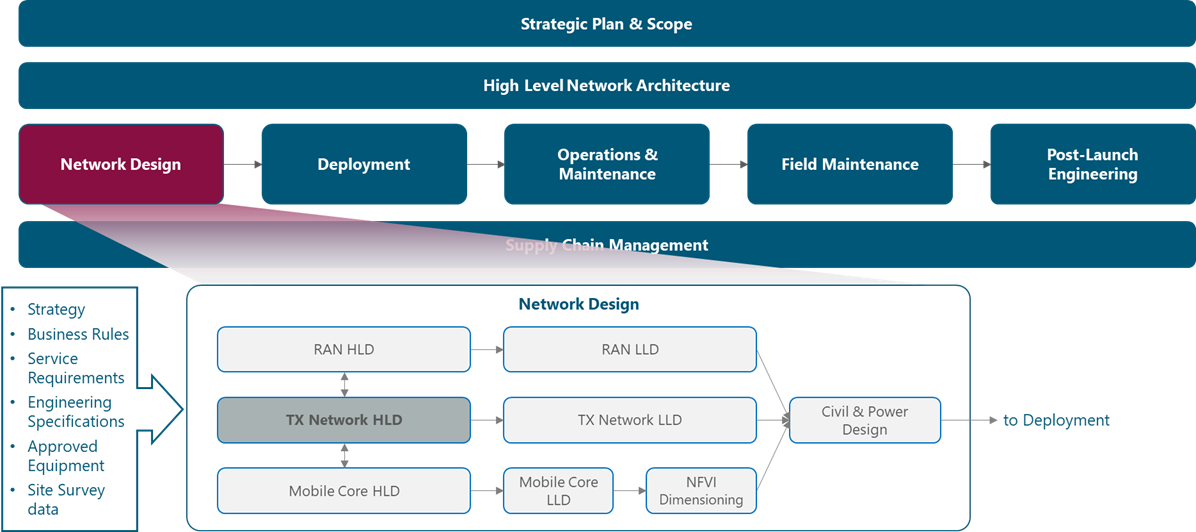

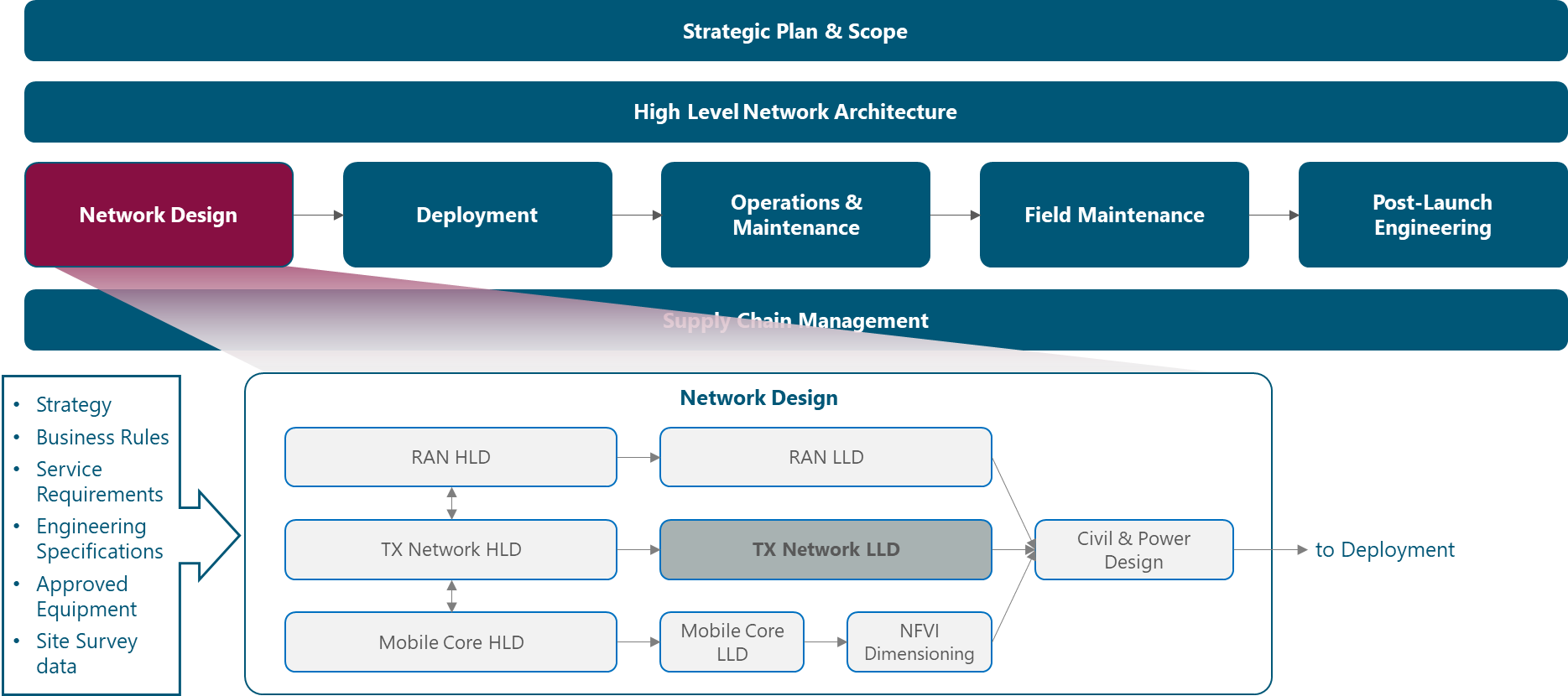

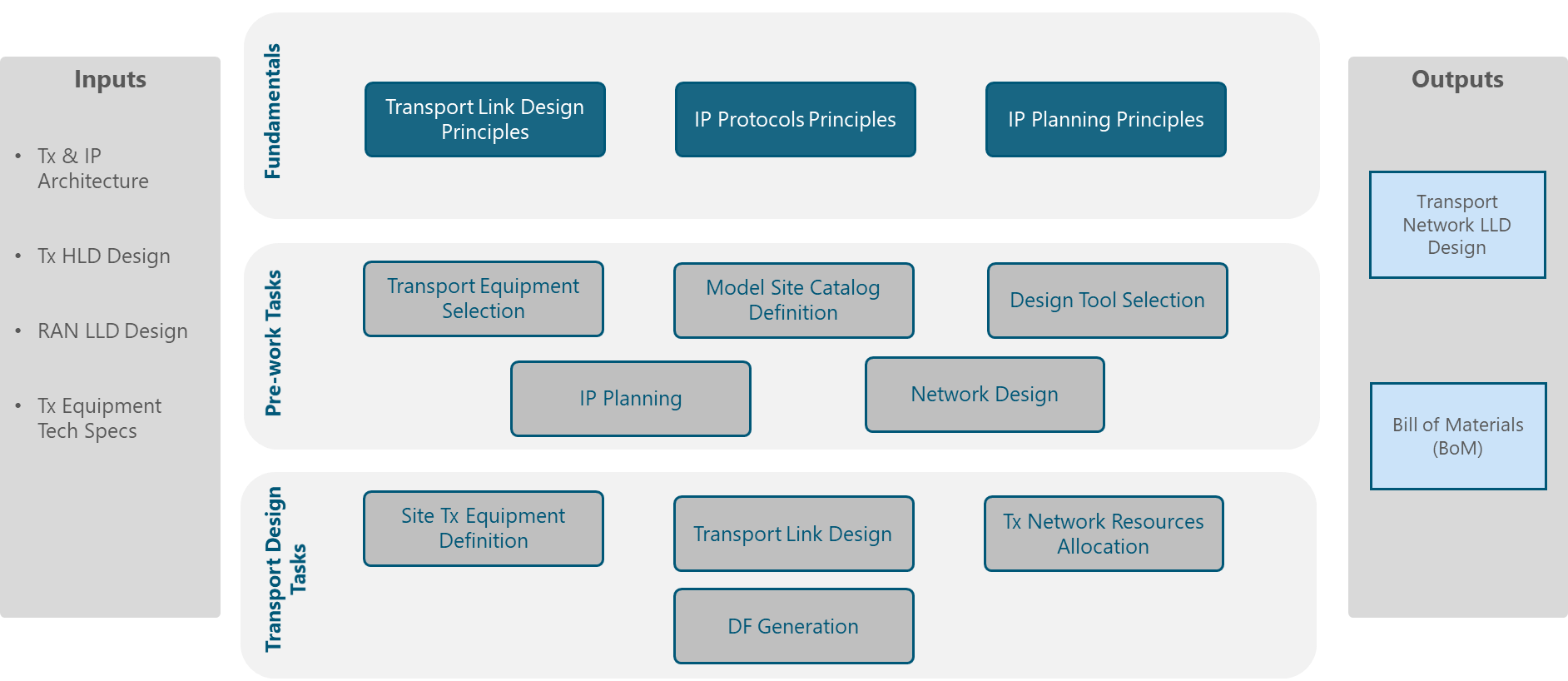

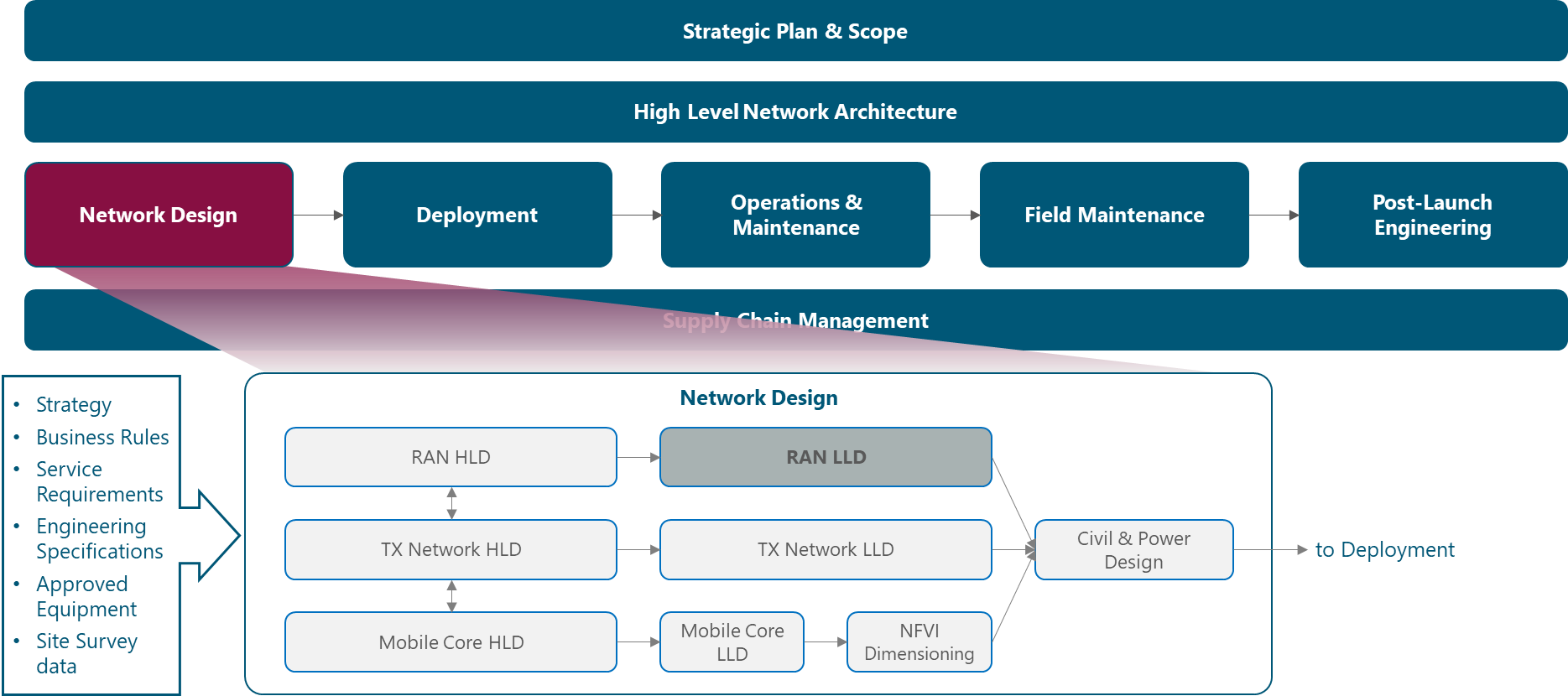

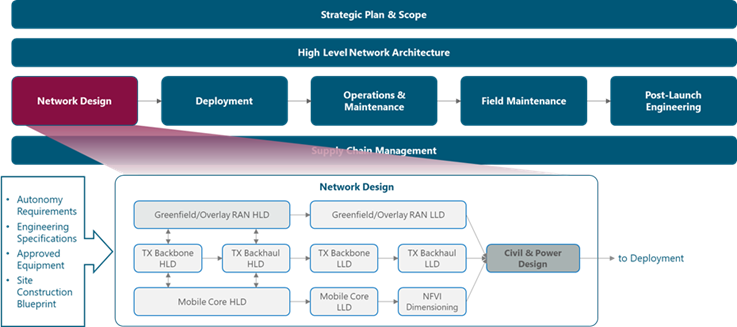

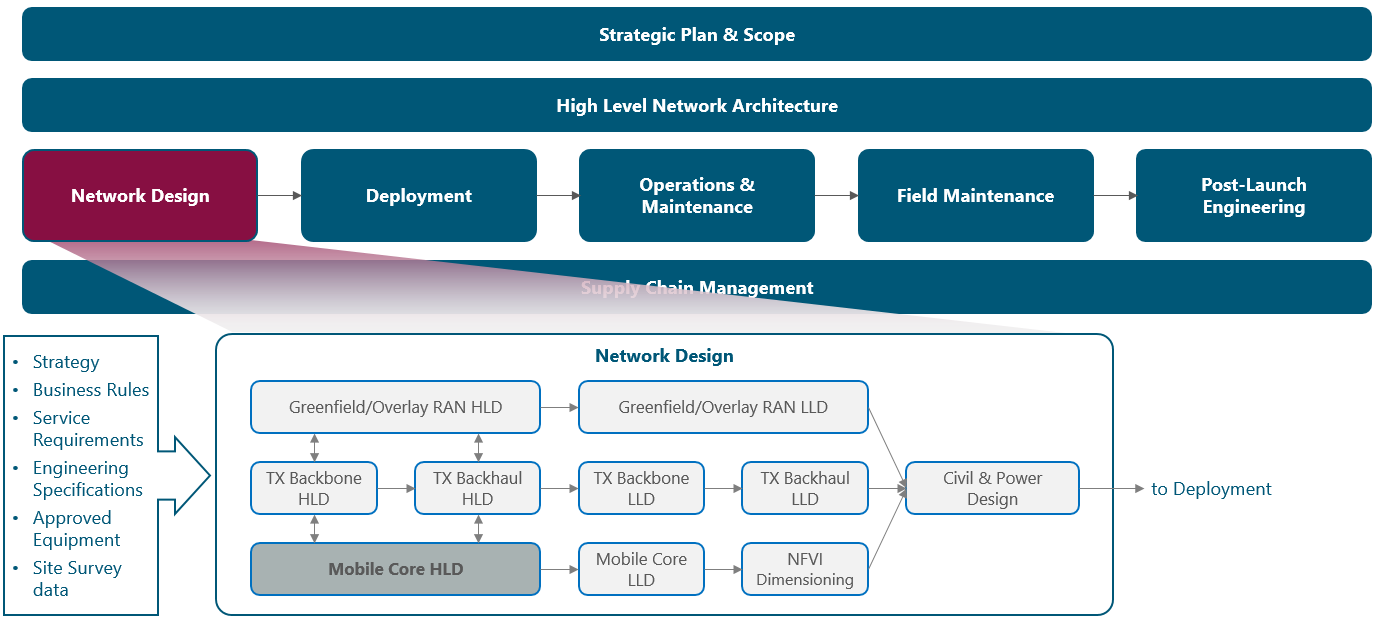

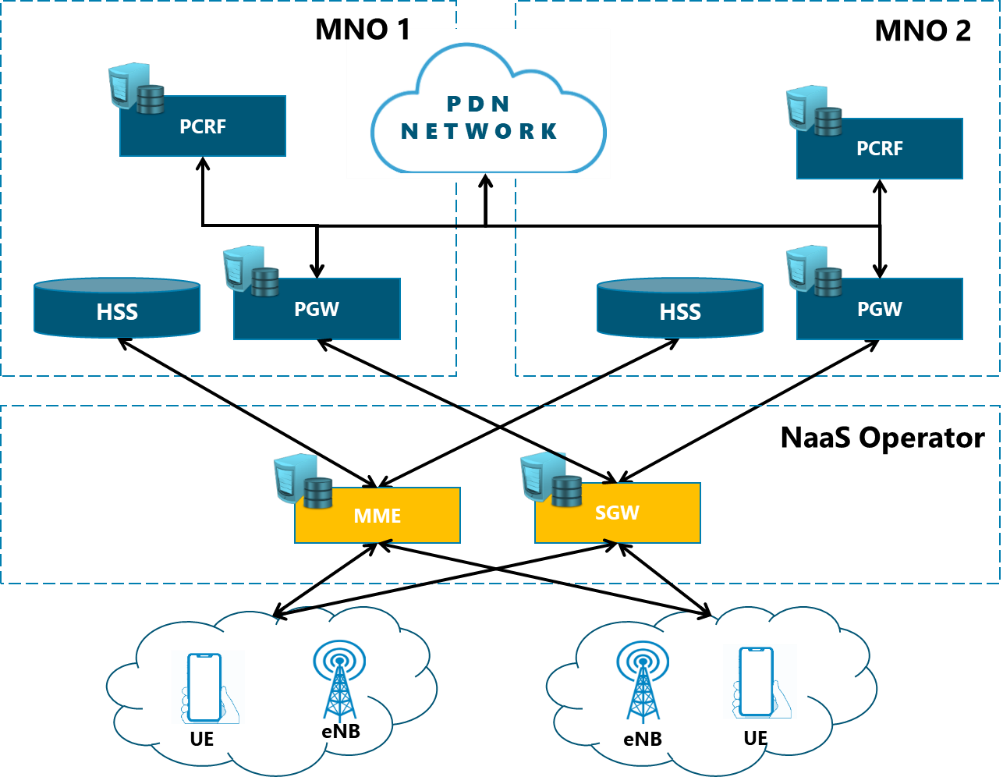

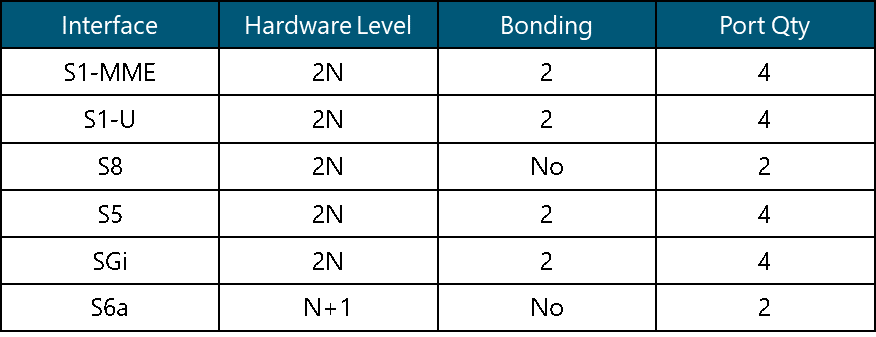

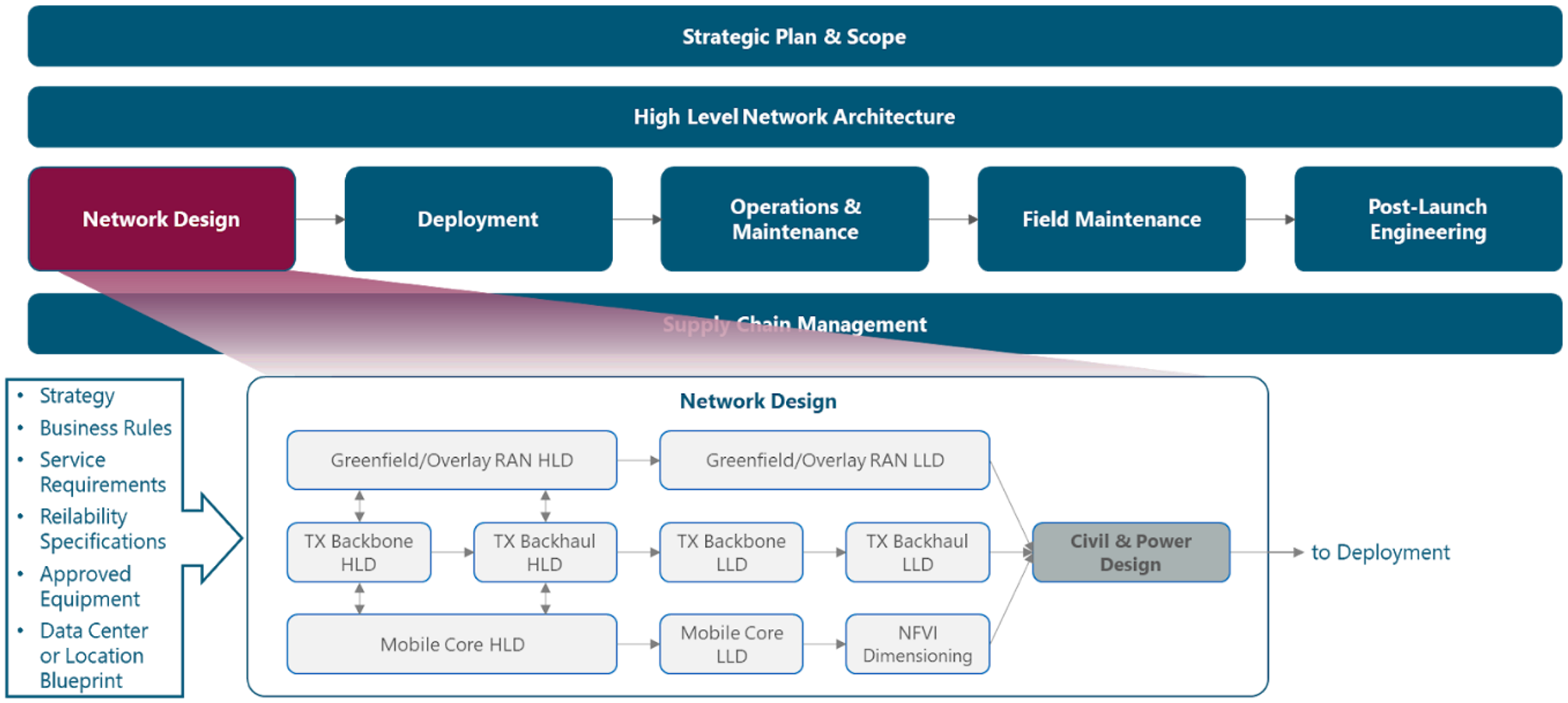

The module framework in Figure 1 describes the structure, interactions, and dependencies among NaaS operator areas.

Strategic plan and scope, along with high-level network architecture drive the strategic decisions to forthcoming phases. Network design is the first step in an implementation strategy supported by supply chain management.

The Tx network HLD module is included within the network design area and has direct relation with RAN HLD and mobile core HLD. The generated output of this module will serve as a required input for the Tx network LLD module.

Figure 1 ‒ Module framework

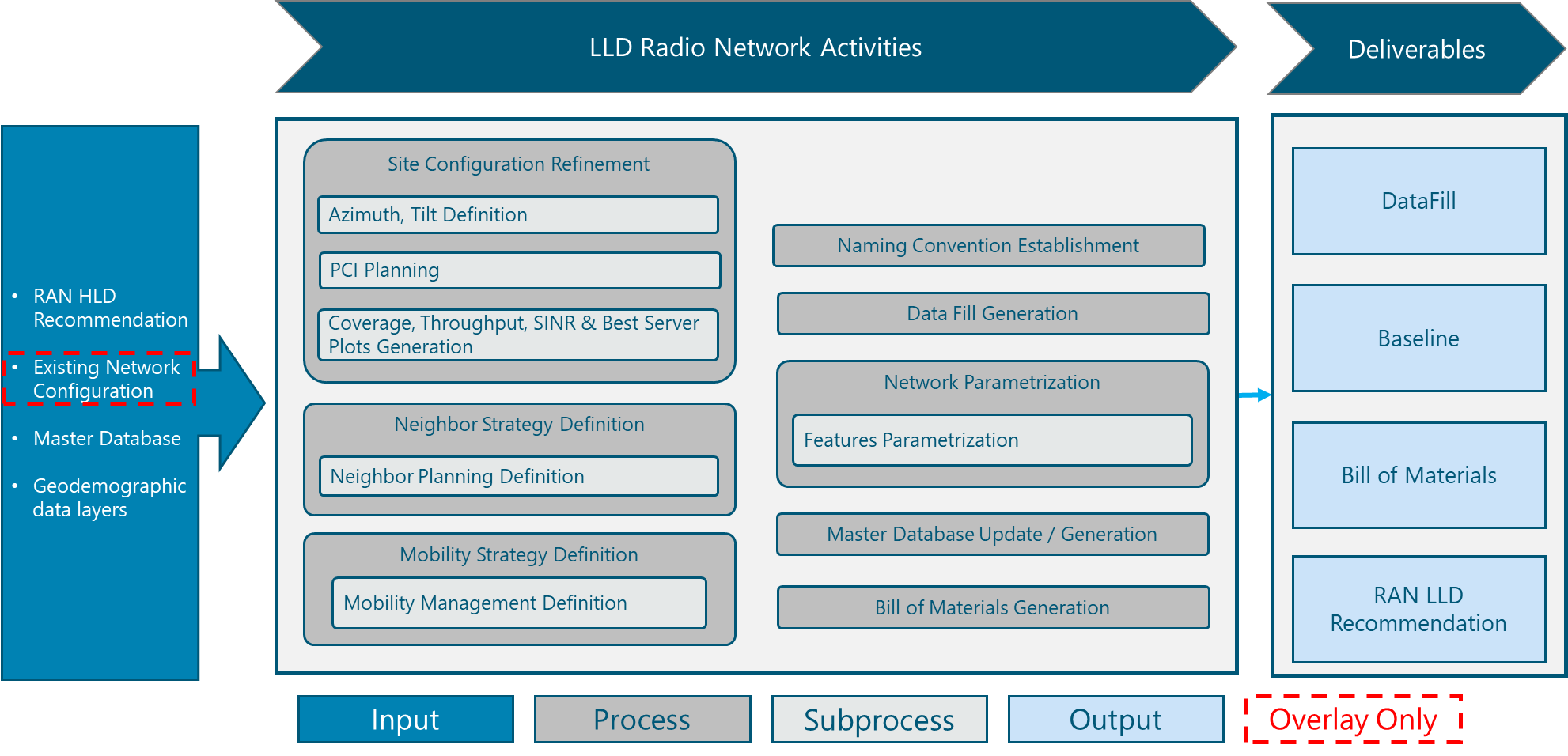

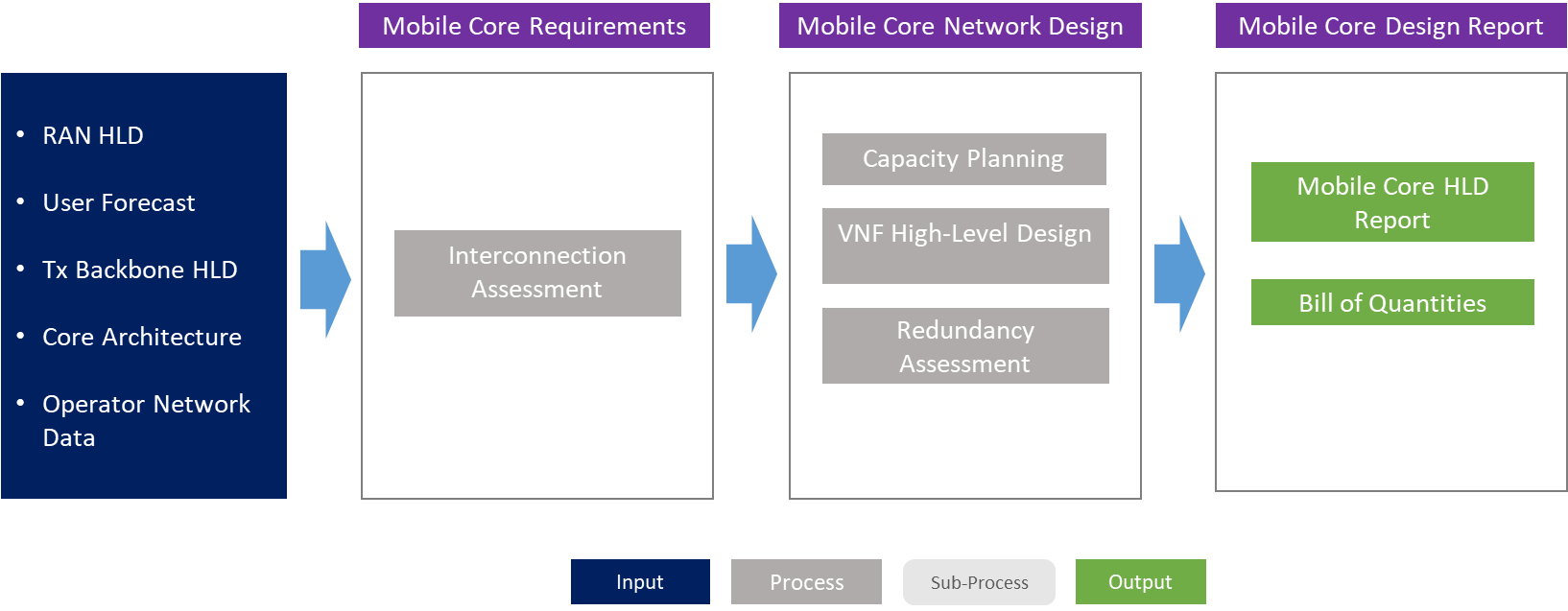

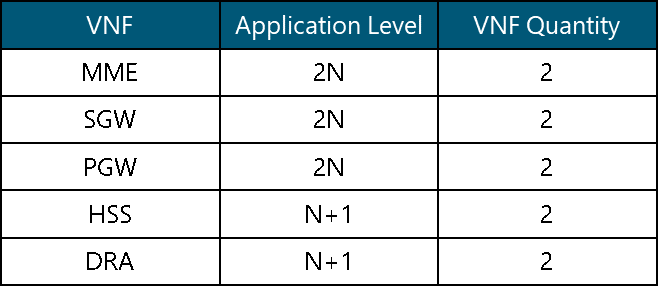

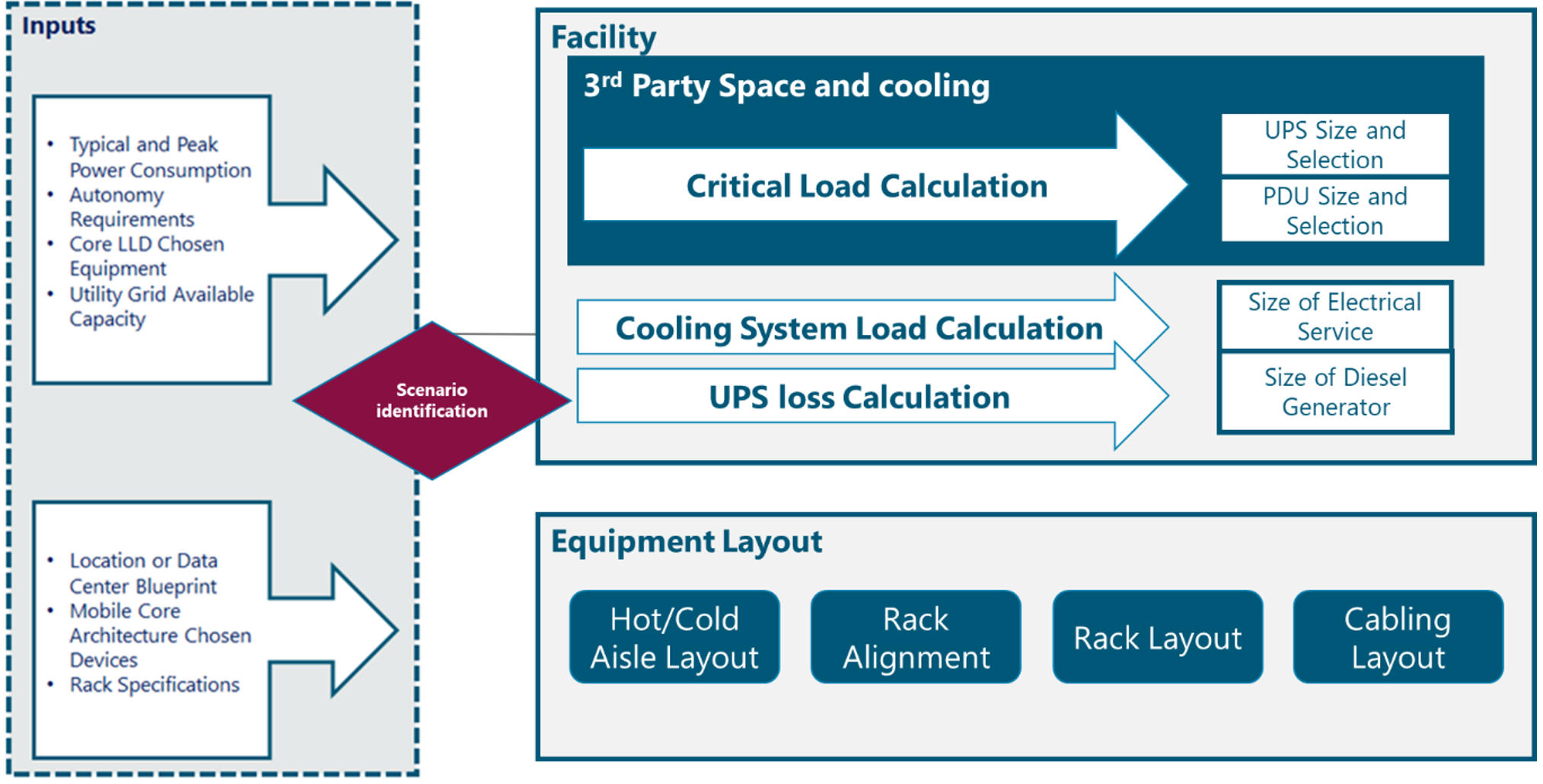

Figure 2 presents the Tx network HLD functional view, where the main functional components are exhibited. Critical module inputs are further described and examined in Section 2.2. In addition, guidance and methodologies to execute the tasks included within each function are described in Section 3.

Figure 2 ‒ Module functional view

The remainder of this module is structured as follows: Section 2 is a high-level overview of the Tx network fundamentals involved in its design. Once such knowledge is acquired, Section 3 focuses on showing a hands-on view of the involved design tasks. Section 4 organizes the tasks on an end-to-end process flow that can be used as-is, or be adapted by NaaS operators to match their particular conditions. To conclude, Section 5 illustrates how to integrate previous elements in a comprehensive HLD recommendation.

Review of fundamental transport technologies are found in the Learning Repository and is a useful prerequisite to this module.

2 HLD Fundamentals

General overview of the baseline concepts to develop a Tx network design.

2.1 Tx Network Environment

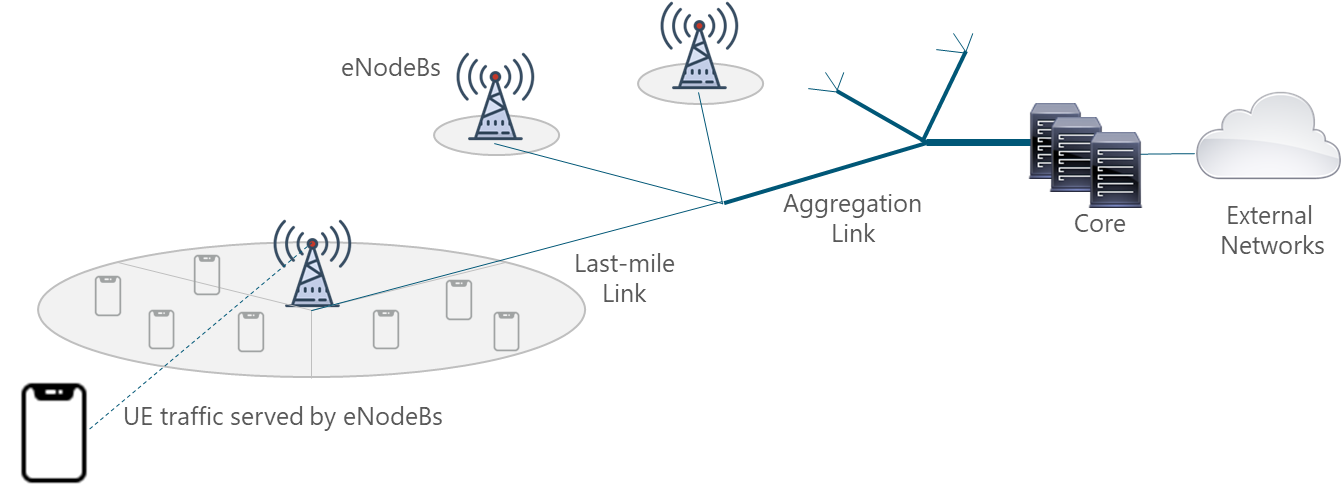

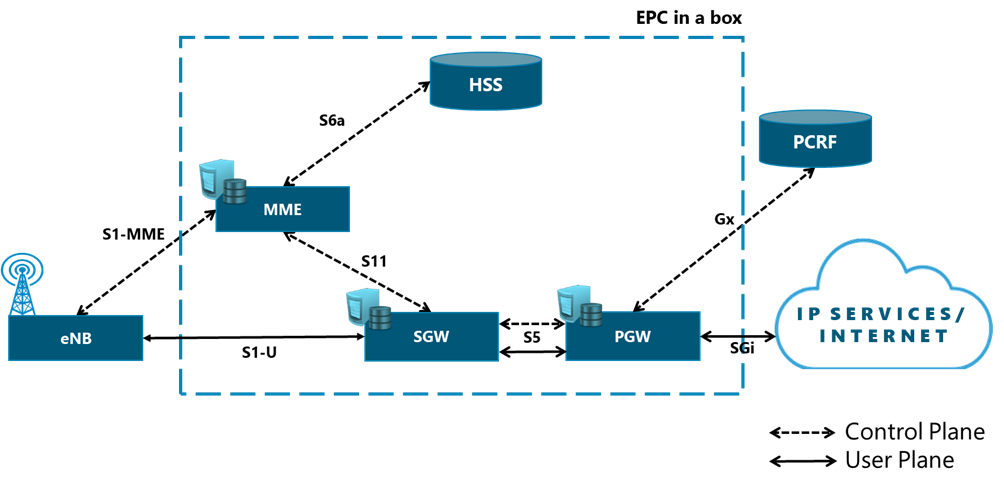

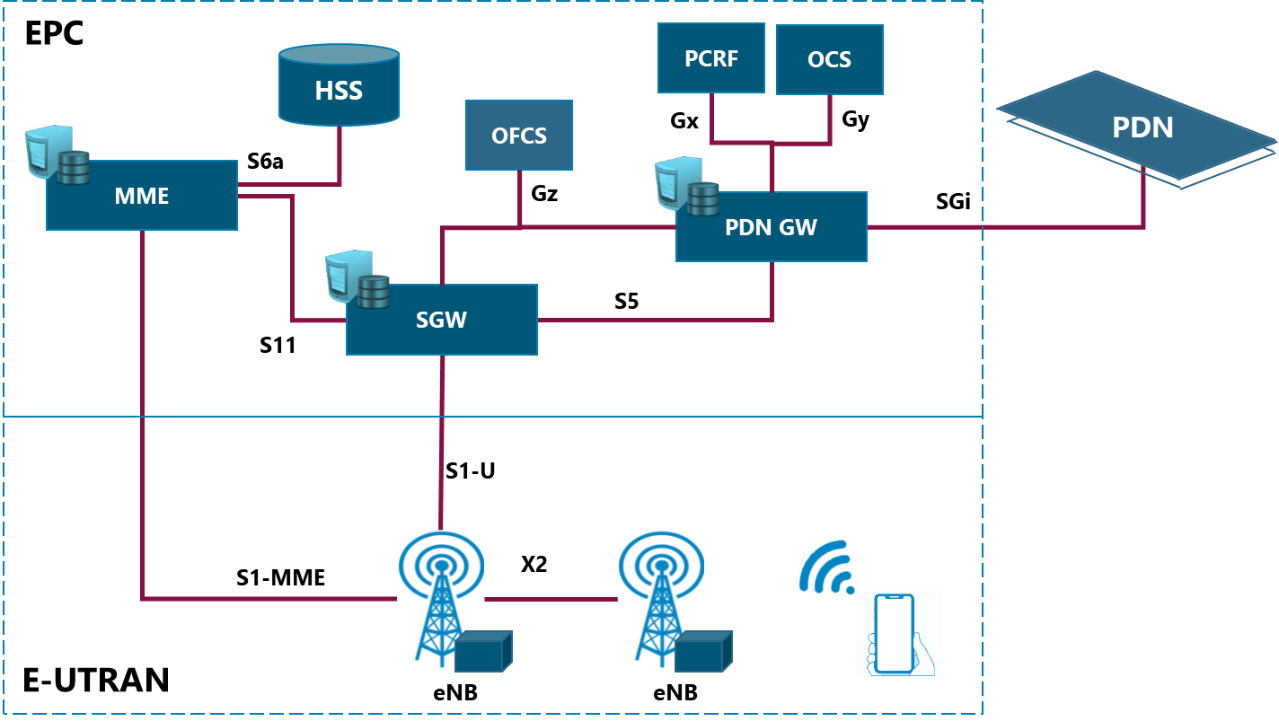

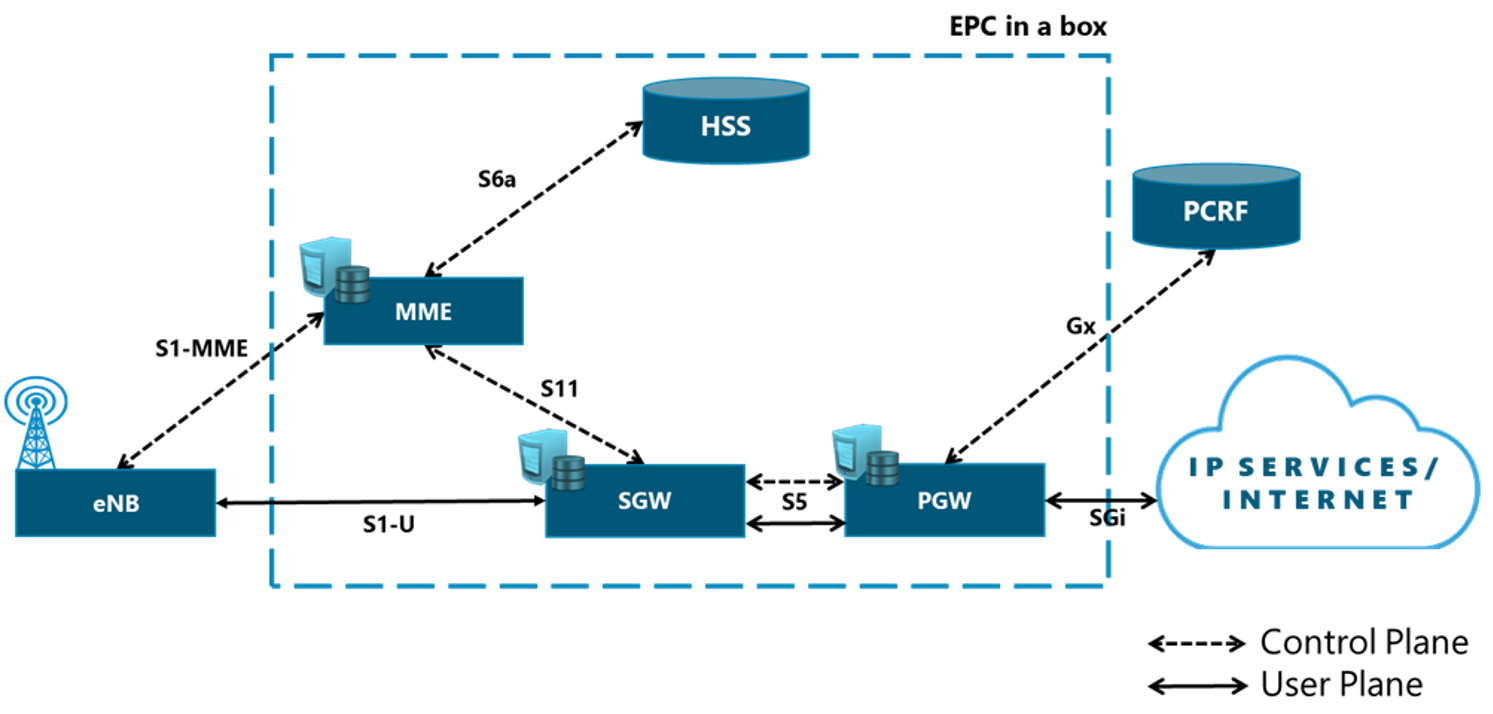

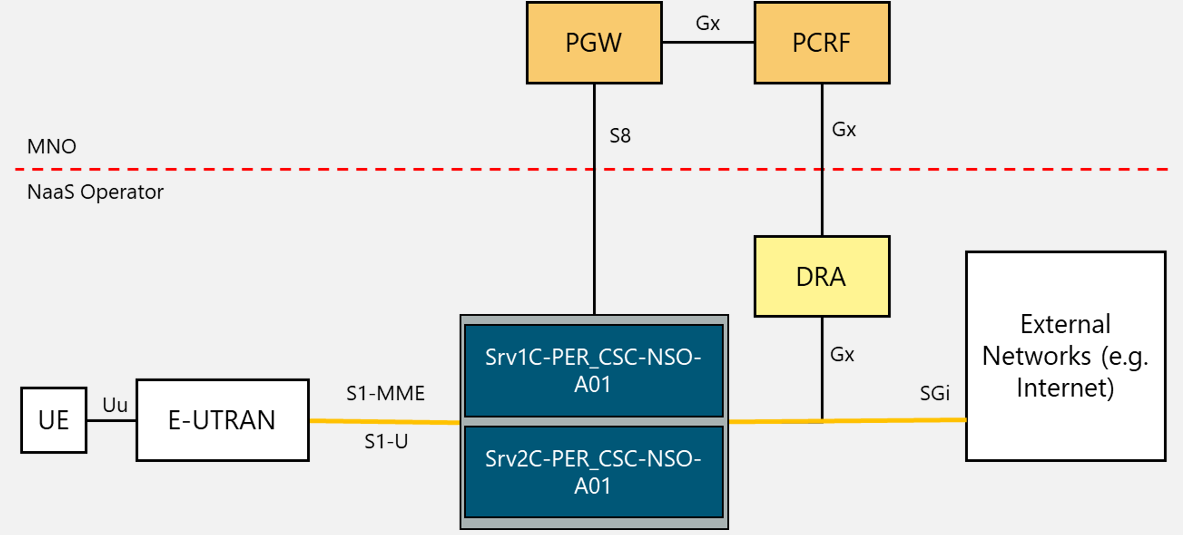

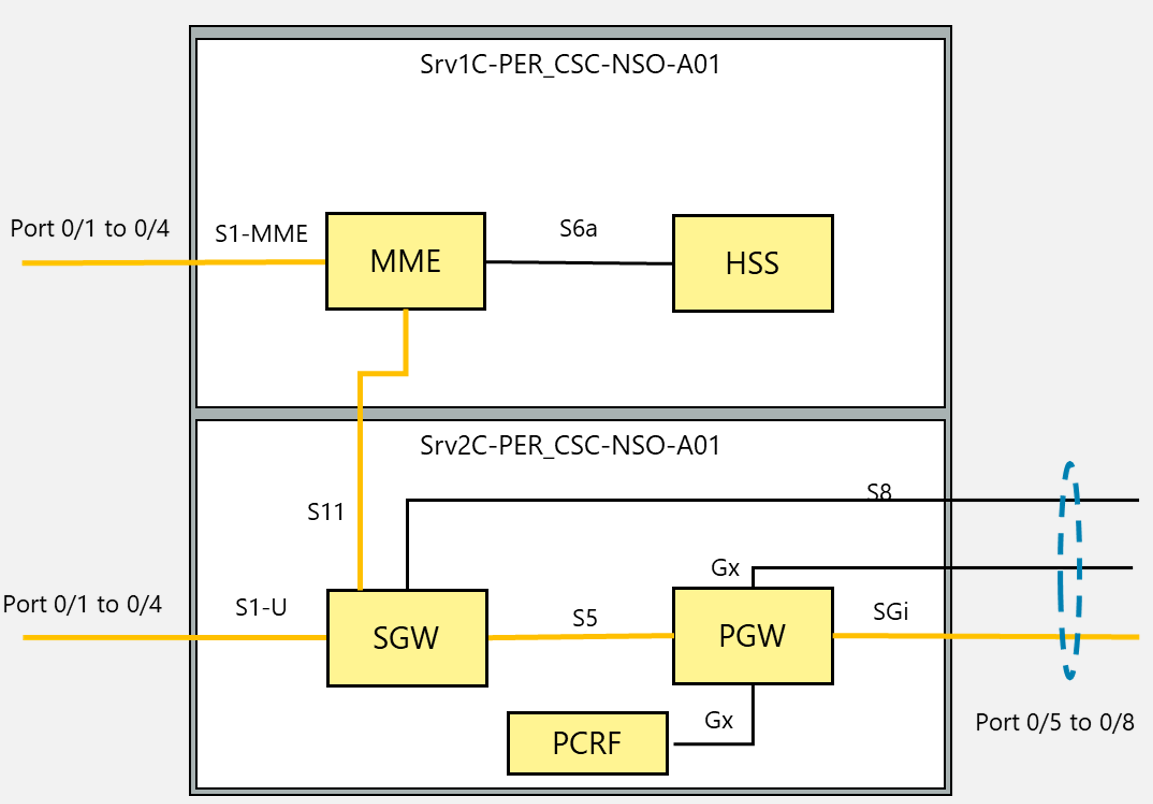

In a mobile environment, the transport network interconnects disparate networks, including the RAN, data centers, and external networks. Figure 3 displays the architecture of a typical transport network.

Figure 3 ‒ Typical transport network

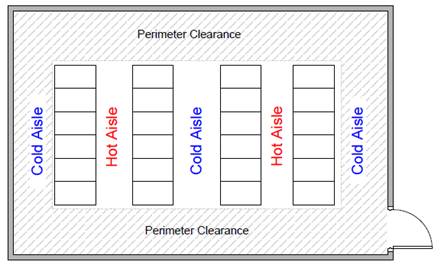

Mobile networks are ubiquitous and support a mix of traffic types originating from and terminating to mobile devices. All of this traffic must be conveyed between the mobile cellular base stations and transport network. For this reason, a number of aggregation levels exist on the transport network that for most NaaS operators can be classified as either: last-mile or aggregation level.

The implementation of 4G long-term evolution (LTE) imposes some requirements on transport networks, such as more network capacity and latency reduction. These are better served through terrestrial technologies (fiber optic and microwave). But in rural areas this becomes a challenge because satellite transport is usually the only feasible technology. For this reason, transport network infrastructure is an essential component of the NaaS operator network; its design must be performed with optimal processes and techniques.

2.1.1 Requirements for Transport Network

Capacity: Compared to 2G and 3G, LTE base stations support a considerable amount of traffic. In addition to user traffic, control, management, and synchronization traffic must be considered. A detailed explanation regarding traffic models is provided in Section 3.4.

Latency: In addition to capacity increase, latency reduction is one of the key goals of LTE deployments. The delay requirements for the transport depend on the end-to-end delay of end customer applications and on the delay budget given to the transport. Latency requirements can be an important limitation for some transport technologies such as satellite and can directly affect its feasibility.

Availability: The availability requirements of the transport network are derived from the those of the end-user service. A typical requirement for a transport network in a rural environment could be an availability value of 99.95%. In most cases availability is dominated by the last-mile link.

2.2 Network Design Inputs Description

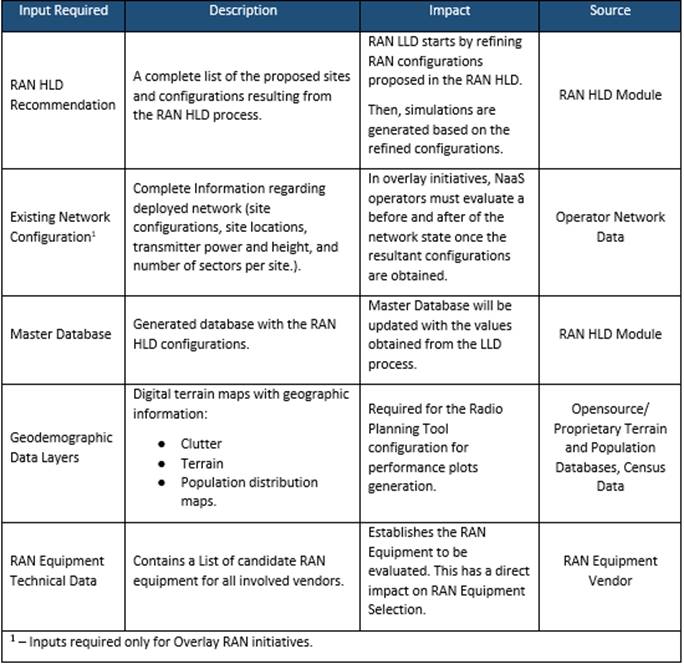

This section analyzes the module input data and their respective candidate sources, as presented in Table 1. In addition, the impact of module inputs on the design process is examined.

|

Input Required |

Description |

Candidate Source |

Impact |

|

RAN HLD Design |

Describes parameters related with RAN solution (site location, RAN equipment, covered population) |

RAN HLD Module |

– The total number of RAN sites determines tool selection to perform the transport feasibility analysis (details in following sections) |

|

Tx & IP Architecture |

Includes the technologies, equipment, and protocols to be considered in the design |

Tx & IP Architecture Module |

– Establishes the available transport technologies to be evaluated during the feasibility analysis and their respective technical considerations |

|

Operator |

Consists on consolidated information regarding operating data network (e.g., existing network elements, implemented protocols) |

– Network operators tools (e.g., inventory, OSSs) |

– Contains the existing transport nodes and technologies to be considered during feasibility analysis |

|

Offered Service Catalogue |

Contains a list of services to be provided and is an output from Tx & IP Architecture Module |

Tx & IP Architecture Module |

– Offered services determine the service level requirements for the transport network |

|

Commercial Criteria |

Guideline criteria to follow during design process |

Commercial area |

– Establishes guidelines to prioritize use of one transport provider over others during the feasibility analysis – Establishes the budget to acquire design tools during feasibility analysis |

Table 1 ‒ Module inputs analysis

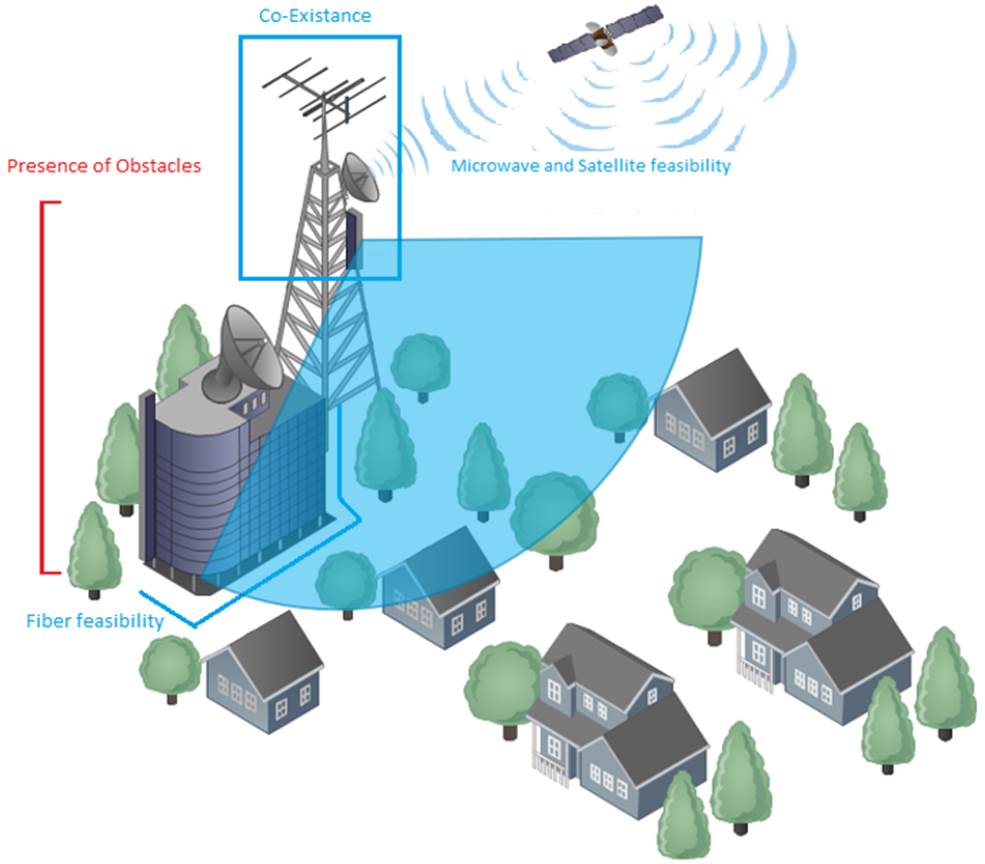

2.3 Transport Solution Feasibility Analysis

This section describes the feasibility considerations for different transport technologies to be used on the transport network. When it comes to technical transport solutions, mobile operators have several at their disposal. Mobile operators prefer fiber optic where available ‒ especially in city centers ‒ but microwave is the mainstay for last-mile traffic. Satellite transport is mainly deployed where existing transport infrastructure isn’t available.

2.3.1 Fiber Optic Technology

Fiber optic is the mainstay wired transport in mobile operator networks when its available because of its significant, inherent bandwidth-carrying ability. Several other techniques can be used to offset any bandwidth constraints and essentially render fiber assets future-proof.

While fiber optic has tremendous operational capacity, its main limitation is its cost and deployment logistics. Moreover, it can take several months to provision each cell site with fiber optic transport.

The fundamental aspects of fiber optic technology design are discussed in the Primer on Fiber Optic Technologies Principles.

2.3.2 Microwave Technology

Most operators heavily rely on microwave transport solutions in the 5 GHz – 80 GHz bands (in rural environments, frequencies above 15GHz are not generally feasible). Microwave is a low-cost option for mobile transport, as it can be deployed in a matter of days and support a range of up to several tens of kilometers.

The main limitation of microwave links is its line of sight (LOS) requirement between transmitter and receiver. This represents a drawback for its implementation, especially in rural areas due to steep terrain conditions that often exist. In addition, in many cases microwave requires a license to be obtained. And in high frequencies, microwave links are subject to atmospheric effects or rain fade, which can attenuate the signal and limit its range.

The fundamental aspects of microwave technology design are discussed in the Primer on Microwave Technologies Principles..

2.3.3 Satellite Technology

Satellite technology is deployed in fringe network areas, usually in rural locations within emerging markets. It may be deployed as a temporary measure as an operator waits for regulatory microwave licenses to be approved. Coverage is determined by satellite footprint. More details are provided in Section 3.

Table 2 presents a comparison of satellite orbits.

|

Orbit |

GEO |

MEO |

LEO |

|

Distance |

35,800 km |

2,000km-35,000km |

160km-2,000km |

|

Latency |

250-500 ms |

60-250 ms |

30-50 ms |

|

Throughput |

Up to 500 Mbps |

Up to 800 Mbps |

1Gbps+ *(See NOTE) |

|

No HO |

2-3 hours |

15-20 min |

Table 2 ‒ Satellite orbit comparison

*NOTE: As LEO systems have not been commercially launched, the 1Gbps value is used as a theoretical reference.

The cost of satellite terminal equipment located at the base station is in line with microwave equipment. However, the OPEX burden generated by the satellite service fee can impact the business case.

The fundamental aspects of satellite technology design are discussed in the Primer on Satellite Technologies Principles.

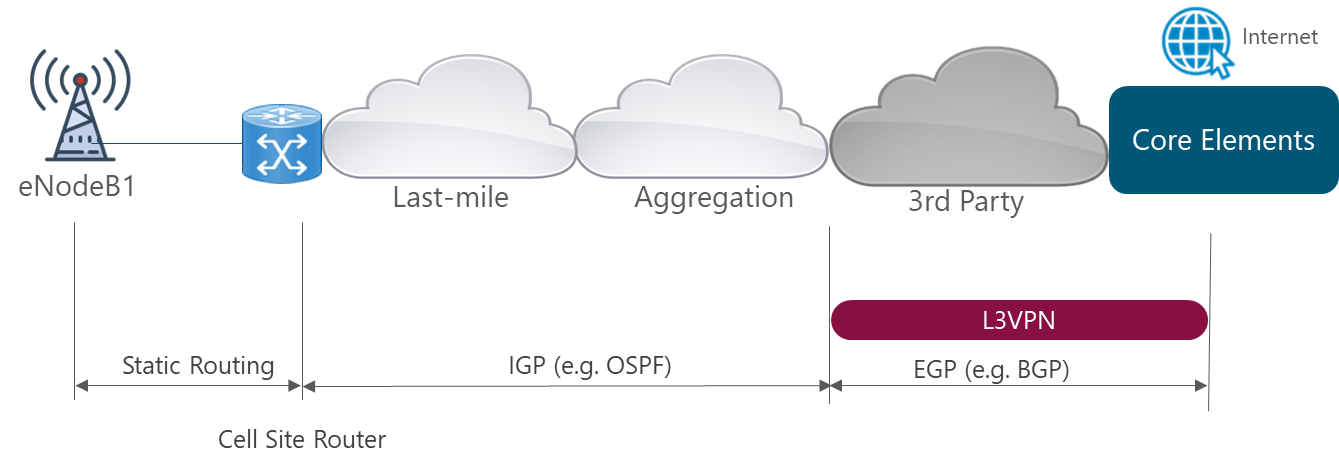

2.4 Physical Topology Design

As stated in Section 2.1, a typical transport network consists of two domains: Last-mile and aggregation. In most cases there might exist a connection provided by a third party network to connect to core elements. Domain borders are mostly defined by the technology and topology used within the domain. Domain characteristics in terms of physical topology are:

Figure 4 displays the transport network structure:

Figure 4 ‒ Basic structure of a mobile transport network

The depicted structure considers various physical technologies and topologies. From left to right, the last-mile connects a demarcation device (usually deployed at the cell site) to a first stage of traffic grooming and concentration. In turn, the aggregation network further aggregates traffic, adapts any technology change, and provides the hand over point to a higher level of aggregation network.

Physical connectivity represents any technology that can be used to connect nodes (details in section 3). In addition, a networking layer is implemented on top of the physical layer; it embraces all possible logical architectures needed to steer LTE traffic and applications. Details on network topology design are covered in the Tx LLD Module.

2.5 Capacity Planning Process

In simple terms, the transport network should be dimensioned to provide reliable service to users. They should be able to connect to the network and use subscribed services anytime inside the coverage area ‒ where service outages or connectivity problems should be rare. To achieve this, a capacity planning analysis must be performed to determine the traffic the transport network must support.

2.6 Transport Solution Definition

An assessment of implementing a transport network or link is dependent on feasible transport solutions and capacity requirements, as well as the following implications.

Feasible Transport Solutions Feasibility analysis is an essential step in defining the transport solution to be deployed, for not all the solutions are appropriate in rural areas. Thus, feasibility might be the most important constraint in selecting a transport technology.

Capacity Requirements In wireless-based solutions (MW and satellite), it’s essential to validate that sufficient capacity exists to satisfy traffic requirements. Capacity planning assesses how much traffic can be supported by transport technologies.

Deployment Cost Deployment cost can be a essential criterion when a network operator is faced with deploying several cell sites in one year.

Time to Deploy and Licensing Network operators are often under pressure to get a cell site operational as quickly as possible. Having to wait several months for a fiber optic connection to be provisioned to a cell site can prevent its selection in the near term.

Table 3 presents a high-level comparison of technology parameters to be implemented on the transport network.

|

Parameter |

Fiber Optic |

Microwave |

Satellite |

|

Future-proof Available Bandwidth |

High |

Medium |

Low |

|

Deployment Cost |

High |

Low |

Medium |

|

Interference Immunity |

Very-high |

Medium |

Medium |

|

Range (km) |

<80 |

<30* (5-15GHz) |

Subject to satellite footprint |

|

Time to Deploy |

Months |

Weeks |

Weeks |

|

Licensed Required |

No |

Both (Licensed/Unlicensed) |

No |

Table 3 ‒ Transport technologies high-level comparison

*Note: Typical value; however, microwave links can be engineered to reach larger distances.

3 Functions & Methodologies

This section presents recommended methodologies to perform critical tasks/subtasks involved in the Tx network design process.

3.1 Fiber Optic Path Design

3.1.1 Right of Way Definition

An actual fiber route will be determined by the physical locations along the way, local building codes or laws, and other considerations involved in the design. Laying fiber optic cable may cross long lengths of open fields; run along paved rural or urban roads; cross roads, ravines, rivers, or lakes; or ‒ more likely ‒ some combination thereof. It could require buried, aerial, or underwater cables. Cable may be in conduit, innerduct, or direct-buried; aerial cables can be self-supporting or lashed to a messenger. In rural areas, aerial cable is the most common deployment due to its low cost and fast implementation.

For this reason, a NaaS operator must define a set of rules (i.e., rights of way) that specify criteria to follow during fiber optic laying design. This set of rules prioritize the previously mentioned characteristics according to the operators requirements.

3.1.2 Geospatial Database

A geospatial database must be consolidated by the operator during the design process. It should contain road information, topographical data, and sometimes satellite images overlaid on roads (when available).

Other kinds of data to be considered are existing conduit, property boundaries, easements, gas pipelines, and areas of special interest. Each provides extra information that can be used to generate a fiber laying designeither things to avoid (e.g., gas pipes) or ways to save cost (e.g., existing conduit).

Streets and addresses are available as open source data in many places in the world (e.g., OpenStreetMap), but the remainder usually has to be sourced from local government councils or companies. A key step in using geospatial data is to confirm its quality, structure, and format so it can be useful for design.

3.1.3 Fiber Path Design

An identification of multiple paths to link origin and destination must be performed in designing the fiber optic path. These alternatives will likely include a combination of multiple rights of ways and have various optical lengths.

Although the specific steps can vary depending on the selected GIS tool, general steps in performing fiber path design are:

- Load the locations of origin and destination nodes in a GIS tool.

- Identify available routes linking the origin and destination nodes. Draw the path using the options provided by the GIS tool. Figure 5 shows an example of multiple path alternatives (A, B, C) from origin to destination nodes.

Figure 5 ‒ Example of fiber path design

- Using the GIS tool options, measure lengths of the alternatives; these represent the optical length of each path. Typically 20% is added as a margin to each measurement. Figure 5 shows each path length, with option A being the longest.

- Rank the alternatives to prioritize those that better comply with defined rights of ways having minimum length. A higher-ranked alternative will be selected as the final route.

3.1.4 Fiber Path Reporting

Once the fiber route is designed, create a path design report that includes:

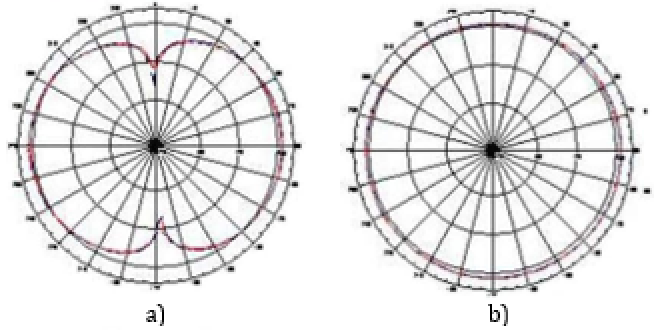

3.2 Microwave Line of Sight (LOS) Verification

A microwave LOS analysis determines whether a signal path is available between transmitting and receiving antennas. This assures that a clearance of the first Fresnel ellipsoid is achieved.

The absence of relevant obstacles along the path to ensure LOS clearance is based on geometrical calculations. These require topographical databases used for path profile extraction. Profiles are to be drawn in a path profile diagram, along with the expected path taken by a radio signal. The diagram will enable comparison of both plots (profile and radio signal path) to analyze possible diffraction or reflection effects of the terrain.

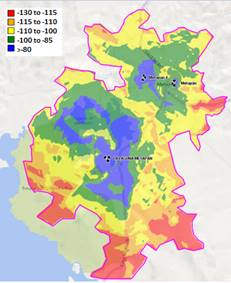

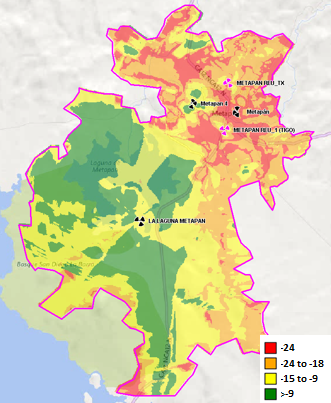

3.2.1 Digital Terrain Databases

In general, most propagation predictions are based on detailed topographical information. This information is provided by digital terrain elevation (DTE) models composed by digital terrain topographical databases and represented in the form of digital terrain maps. The models provide accurate information that can be used to evaluate path clearance and potential loss associated with diffraction. They’ll also be used in the algorithm required for determining interference to/from other stations of the link, or arriving to/from other radio systems sharing the same bands.

Depending on the tool selected to perform the design, DTE models are often included. If not, some DTEs are available as open source data in many places in the world (e.g., Google Maps Elevation API).

3.2.2 Profile Extraction, Clearance, and Obstructions

The LOS clearance analysis is based on studying terrain heights between stations of the hop and along the path profile; comparing these with the expected path followed by the radio signal (first Fresnel ellipsoid).

Although specific steps can vary depending on the selected verification tool, the general steps to perform microwave LOS verification are:

- Load the locations of the origin and destination nodes in the tool. Figure 6 displays the analysis for one site when two transport nodes are considered.

Figure 6 ‒ Example of microwave link design

- Load tower height information for each of the sites. As a margin, typically each height is considered to be 3m below actual to account for space to implement the MW antennas.

- Using options provided by the selected tool, measure the distance between the sites; this represents the microwave link length. Figure 6 shows two links, with option A having the longest length.

- Select the frequency to be used based on link length (11GHz in Figure 6).

- Generate the microwave link profile using options provided by the selected tool.

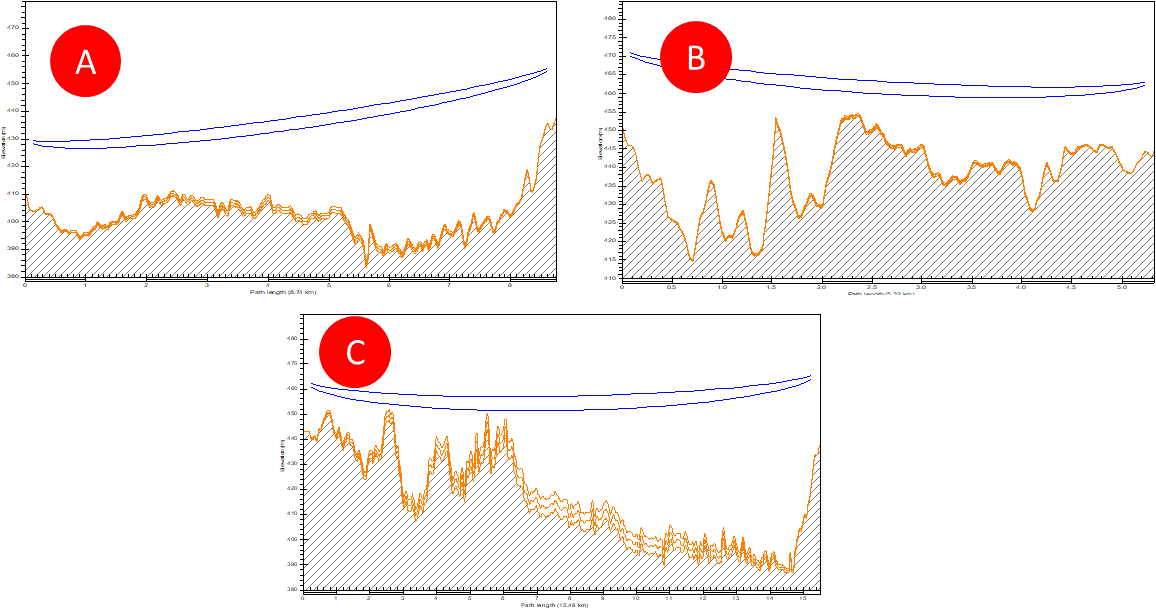

- Verify that the first Fresnel zone is obstacle free (LOS is validated when there are none). Figure 7 depicts profiles generated for the example, where LOS is only validated for link B.

Figure 7 ‒ LOS validation during microwave link design

3.2.3 Microwave LOS Validation Reporting

Once LOS clearance has been validated, create a link design report that includes the following information:

3.3 Satellite Link Validation

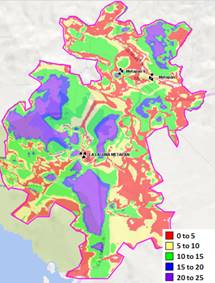

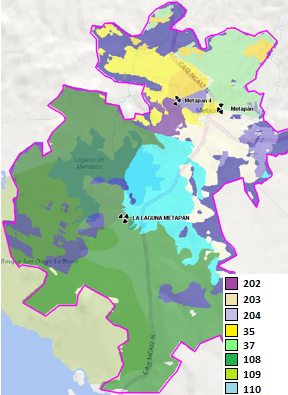

3.3.1 Satellite Coverage Verification

The satellite footprint ‒ the ground area covered by its radiation ‒ should be obtained to validate satellite coverage. Footprint size depends on satellite location within its orbit, the shape and size of beam produced, and its distance from Earth. Footprints usually show estimated signal strength in each area as measured in decibel watts (dBW).

The majority of commercial satellites provide their footprint in respective documentation, or you can access it from satellite footprint databases (e.g., Satbeams at satbeams.com/footprints).

To establish a satellite link according to service level requirements, the minimum signal strength on reception must be defined based on satellite service provider specifications. Given this data, coverage validation for a specific site location can be performed using GIS operations. The aim is to determine whether the location is inside the polygon defined by the footprint and has the minimum level of strength required for the implementation. Depending on the selected tool using methodology in section 4, these operations can be processed in batch mode.

3.3.2 Satellite Parameter Calculation

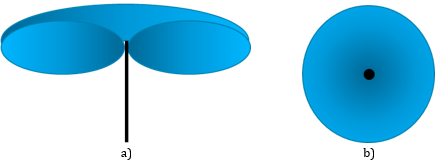

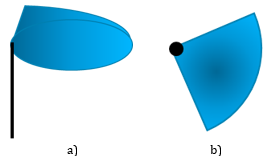

The methodology presented in this section helps determine parameters for geostationary satellites. Azimuth and elevation angles are referred to as the look angles from the earth station (EA) to the satellite. Figure 8 shows the geometry and definitions of look angles with respect to the earth station reference.

Figure 8 ‒ Look angles with respect to ES reference

The azimuth angle, ϕ, is measured from true north in an eastward direction to the projection of the satellite path onto the local horizontal plane. The elevation angle, θ, is the angular distance between the satellite and the observers local horizon or the observers local vertical plane.

Look angles from an earth station to a satellite are determined from:

![]()

![]()

Where:

S = Satellite longitude in degrees

N = Site longitude in degrees

L = Site latitude in degrees

![]()

3.3.3 Satellite Coverage Validation Reporting

Once satellite coverage is validated and look angles calculated, create a link design report that includes the following information:

3.4 Capacity Forecast Analysis

This section presents a methodology to obtain the total amount of traffic the transport network needs to support. It can be summarized as:

3.4.1 Sector Throughput

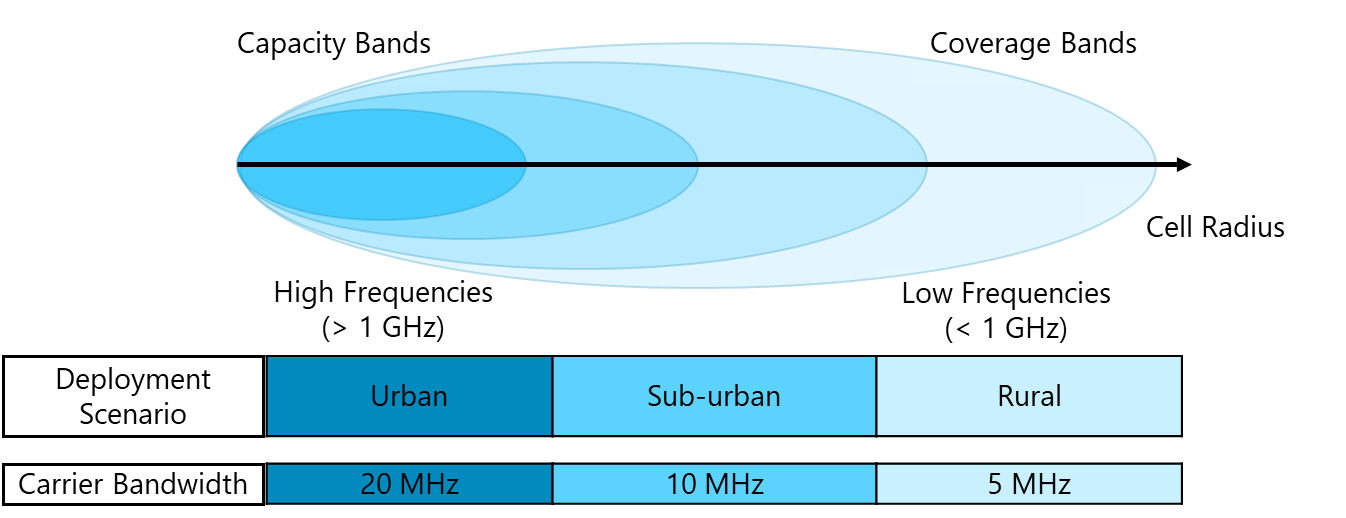

In a typical LTE cell, throughput varies due to the averaging effect of much user equipment (UEs) using the network. It’s during quiet times that peak (and thus last-mile link) throughputs occur, when one UE with a good link has the entire cell to itself. Last-mile provisioning should ensure that advertised peak rates are at least feasible. Figure 9 shows sector throughput variations.

Figure 9 ‒ Illustration of cell throughput variations

Define the following inputs to model traffic behavior presented in Figure 9:

|

Bandwidth |

Scenario |

Sector Average Throughput |

|

|

DL (Mbps) |

UL (Mbps) |

||

|

5 |

Urban |

8 |

5 |

|

Suburban |

6 |

3 |

|

|

10 |

Urban |

17 |

10 |

|

Suburban |

13 |

7 |

|

|

15 |

Urban |

25 |

15 |

|

Suburban |

20 |

10 |

|

|

20 |

Urban |

33 |

20 |

|

Suburban |

26 |

14 |

|

Table 4 ‒ Typical average sector throughput for LTE-FDD (considering MIMO 2×2)

3.4.2 Cell Throughput

In an LTE network, UEs are served by one of many sectors in the coverage area. A macro LTE base station (eNodeB) typically manages three sectors; micro and pico eNodeBs typically only control one sector. Transport traffic per eNodeB is the total traffic generated by all sectors and controlled by it. In real scenarios, its highly unlikely that the peaks of multiple sectors occur at the same time; however, the average capacity occurs in all sectors simultaneously. Equation 1 displays a common approach to calculate the transport capacity for multiple sectors:

![]()

(Eq. 1)

Where:

#Sectors4G is the total number of sectors (3 in macro, 1 in micro/pico)

3.4.3 Last-Mile Link Capacity

In addition to user plane traffic, transport traffic comprises a number of additional components:

Equation 2 displays a high-level approach to calculate last-mile transport capacity for a single eNodeB:

![]()

(Eq. 2)

3.4.4 Aggregation Link Capacity

Peak cell throughput is mainly applicable to the last mile of the transport network, or when aggregating a small number of eNodeBs. Toward the core, traffic of many cells is aggregated and the average capacity is the dominant factor.

Moreover, when multiple eNodeBs are aggregated, a statistical gain can be applied as eNodeBs wont likely use all available capacity at the same time. This gain is represented by the overbooking factor (OBF). Equation 3 displays the approach to calculate transport capacity required for a link that aggregates multiple eNodeBs:

![]()

(Eq. 3)

Where:

Capacity forecast analysis can be performed using the Tx Network Capacity Forecast Widget.

3.5 Availability Calculation

This section describes a methodology to perform a generic availability calculation that can be used to compare network topologies. This calculation must consider failures due to a link, equipment, power outage, or weather conditions. In rural environments, additional considerations must be considered due to environmental phenomenon (e.g., fiber cuts, equipment failure due to storms).

Availability is calculated using commonly known formulas for mean time between failures (MTBF) and mean time to repair (MTTR). Equipment vendors provide MTBF values for equipment and hardware modules. MTTR is the time taken to repair a failed system. Systems may recover from failures automatically or by manual repair. MTTR varies from operator to operator, depending (for example) on replacement hardware availability and the time it takes to deliver it to the site and change it out.

Availability (A) is calculated from the MTBF and MTTR, as presented in Equation 4:

![]()

(Eq. 4)

Table 5 shows the downtime period per year according to different values of availability.

|

Availability |

Downtime per year |

|

99% |

3.65 days |

|

99.9% |

8.76 hr |

|

99.99% |

52 min |

|

99.999% |

5 min |

Table 5 ‒ Availability and corresponding downtime per year

3.5.1 Availability of a Serial System

A single transport link can be seen as an array of elements connected in a serial manner. Redundancy is considered by raising the probability of failure to a power equal to the number of redundant elements. The mathematical expression to calculate availability is given in Equation 5:

![]()

(Eq. 5)

Availability of each element (![]() ) can be calculated with (Eq. 4). If data is not available, generic values can be used according to equipment and link type.

) can be calculated with (Eq. 4). If data is not available, generic values can be used according to equipment and link type.

3.5.2 Availability Calculation Example

Again, availability calculations can be performed to compare network topologies. In this example, the availability of a single MW link (Figure 10) is calculated.

Figure 10 ‒ Availability calculation for a MW link

The first step is to calculate availability of the different equipment taking into account its replacement time. MTBF values vary depending on vendor, with the Table 6 values used in this example:

|

Equipment |

MTBF(years) |

MTTR (hours) |

Availability |

|

Microwave outdoor unit |

80 |

6 |

99,9991% |

|

Microwave indoor unit, 1+0 |

60 |

4 |

99,9992% |

|

Microwave outdoor unit, hot stand-by |

– |

– |

100,0000% |

|

– |

– |

100,0000% |

Table 6 ‒ Availability calculations for various hardware configurations

Use of redundancy is a simple technique to leverage link availability. In this example, hardware protection options are considered to implement redundancy. For the outdoor unit, a hot standby configuration is considered. For the indoor unit, a 1+1 configuration is considered. The availability calculations for these configurations are included in Table 6.

Using equation 5 (presented in Table 7) to calculate total MW link availability considering different equipment, indoor and outdoor units, as well as availability due to climatic factors. In this example, MW link availability of 99.999% due to climatic factors is considered.

|

Equipment and link configuration |

Calculation |

|

Non hot standby + (1 + 0) indoor unit |

99,9958% |

|

Hot standby + (1 + 0) indoor unit |

99,9975% |

|

Hot standby + (1 + 1) indoor unit |

99,9990% |

Table 7 ‒ Availability calculations for a MW link with various redundancy options

From Table 7 it can be seen that the total availability calculation is governed by the smallest system availability value.

Availability of a microwave link chain can be calculated considering the number of hops (displayed in Figure 11).

Figure 11 ‒ Availability calculation for a MW chain

Table 8 displays the result calculated for the Figure 11 scenarios and different redundancy types.

|

# Links |

Non hot standby |

Hot standby |

Hot standby, 1 + 1 indoor unit |

|

1 |

99,9958% |

99,9975% |

99,9990% |

|

2 |

99,9923% |

99,9957% |

99,9980% |

|

3 |

99,9888% |

99,9940% |

99,9970% |

Table 8 ‒ Availability comparison for MW chain types

Methodology presented in this section can be used to calculate a microwave link by considering the equipment involved and link characteristics.

Availability calculations can be performed using the Tx Network Availability Calculation Widget.

4 E2E Process Flow

This section presents a generic yet customizable end-to-end process flow to design a transport network.

4.1 End-to-End Process Overview

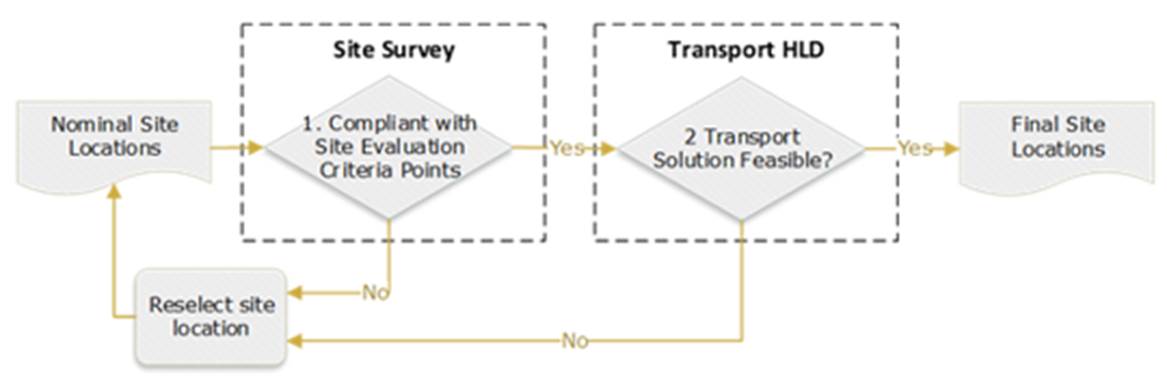

The generic end-to-end (E2E) process flow displayed in Figure 12 shows general tasks to perform a transport network HLD in a logical and well-structured sequence.

Figure 12 ‒ Generic E2E process flow

Step details and customization options are reviewed in the following sections. In addition, a Tx HLD Process Flow Designer is provided as part of the methods of engagement.

4.2 Step-by-Step Analysis

Based on NaaS operator requirements and constraints, this section examines each process step to identify, isolate, and describe the range of implementation options on the path toward customization. Dependencies among tasks are addressed in the corresponding subsection.

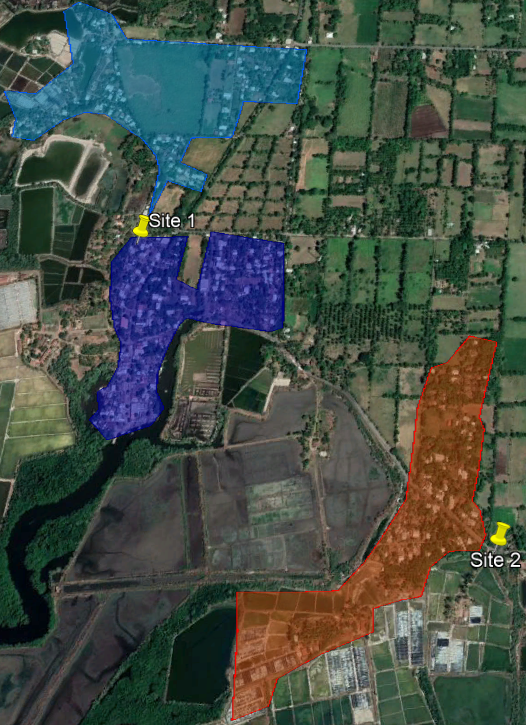

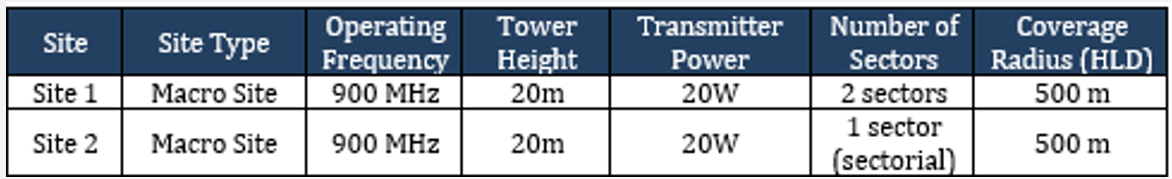

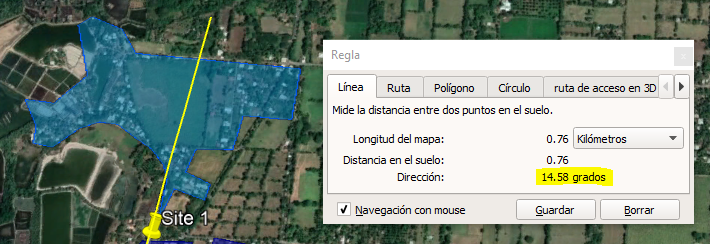

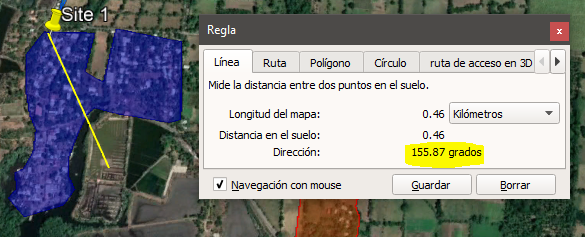

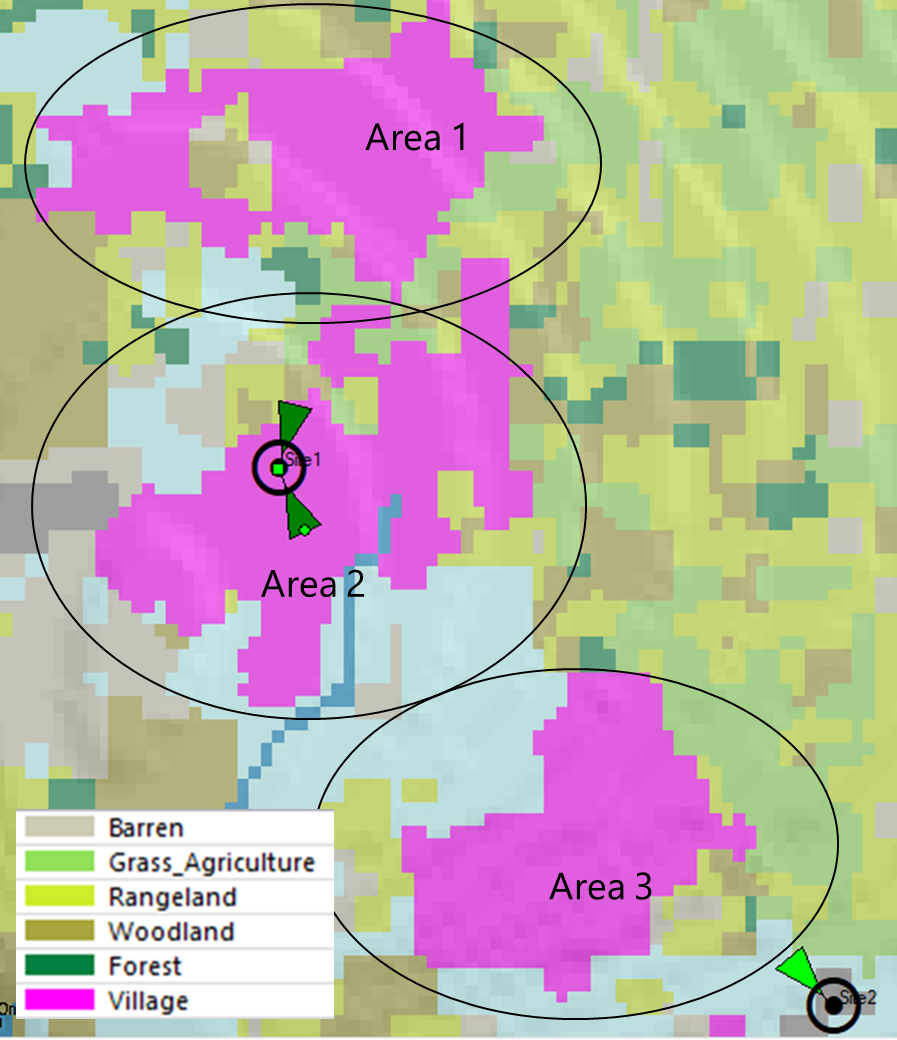

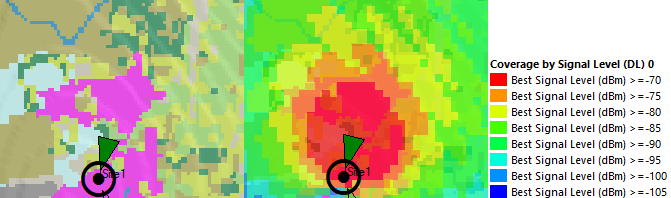

Design Example

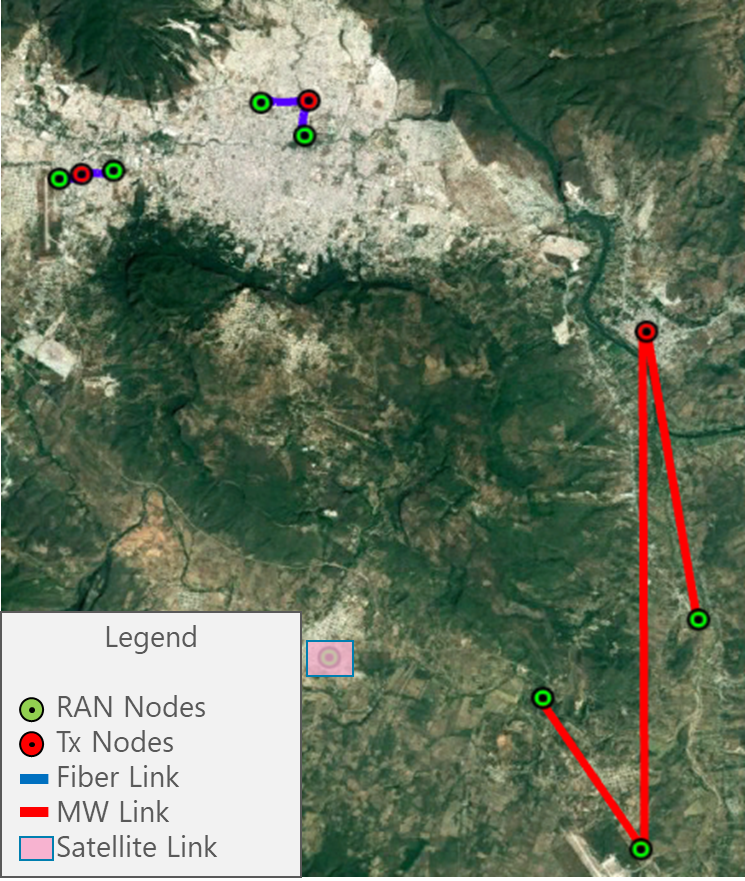

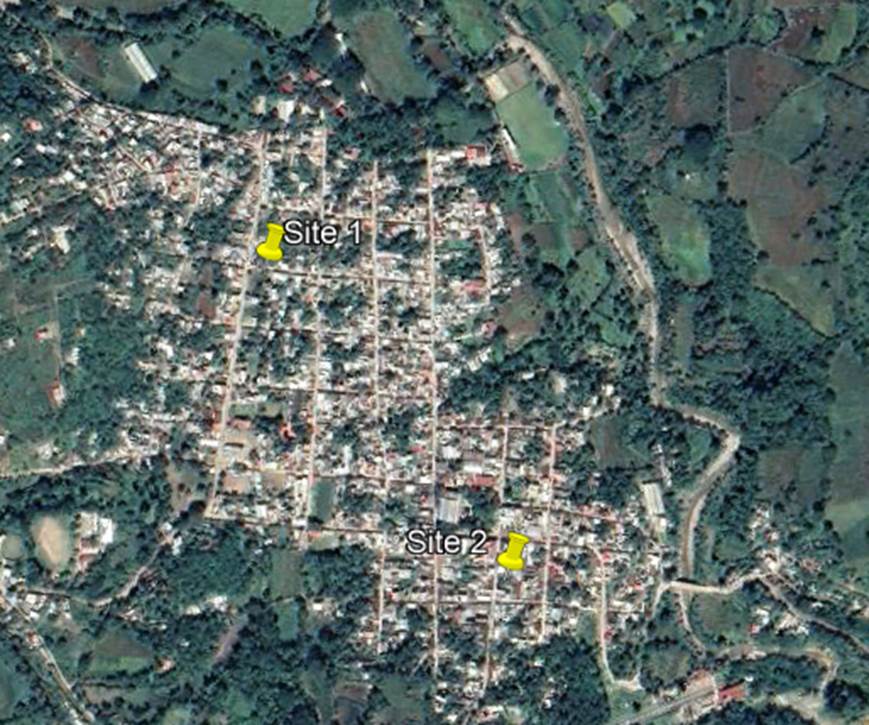

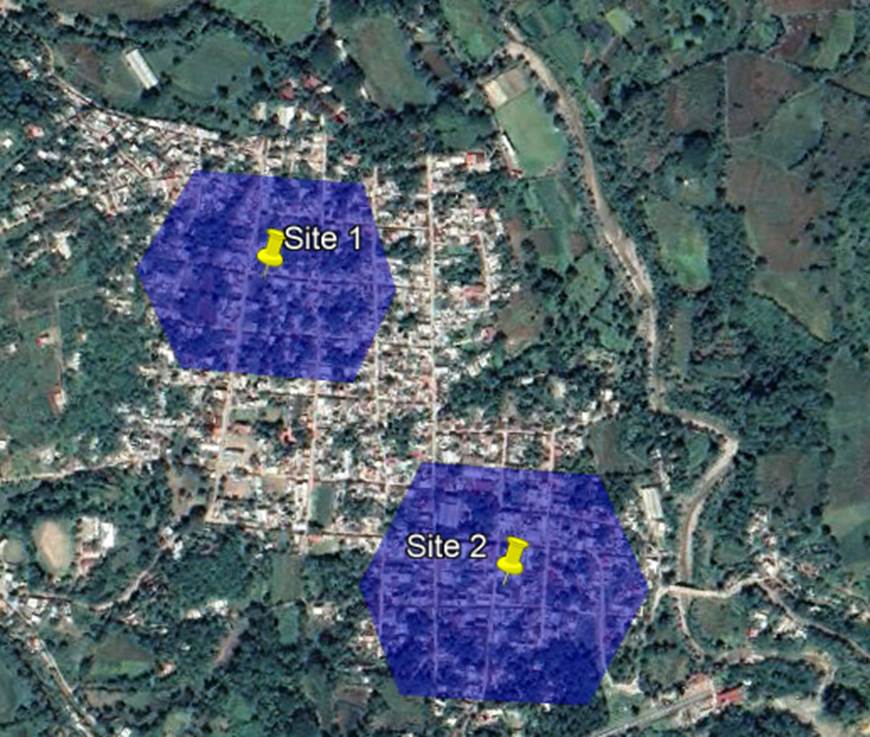

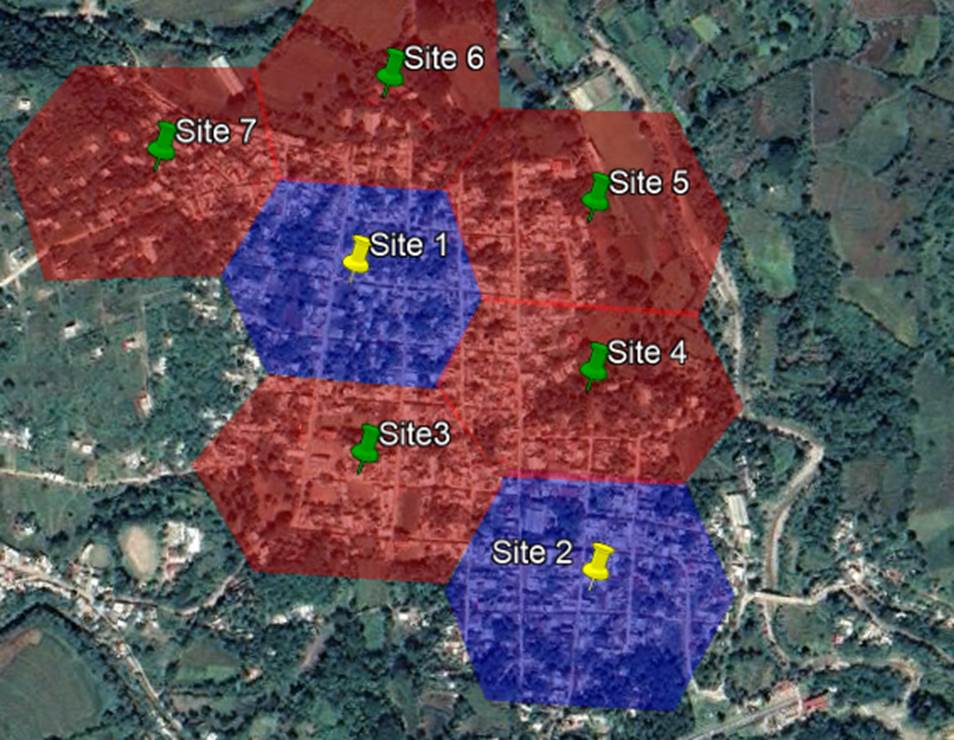

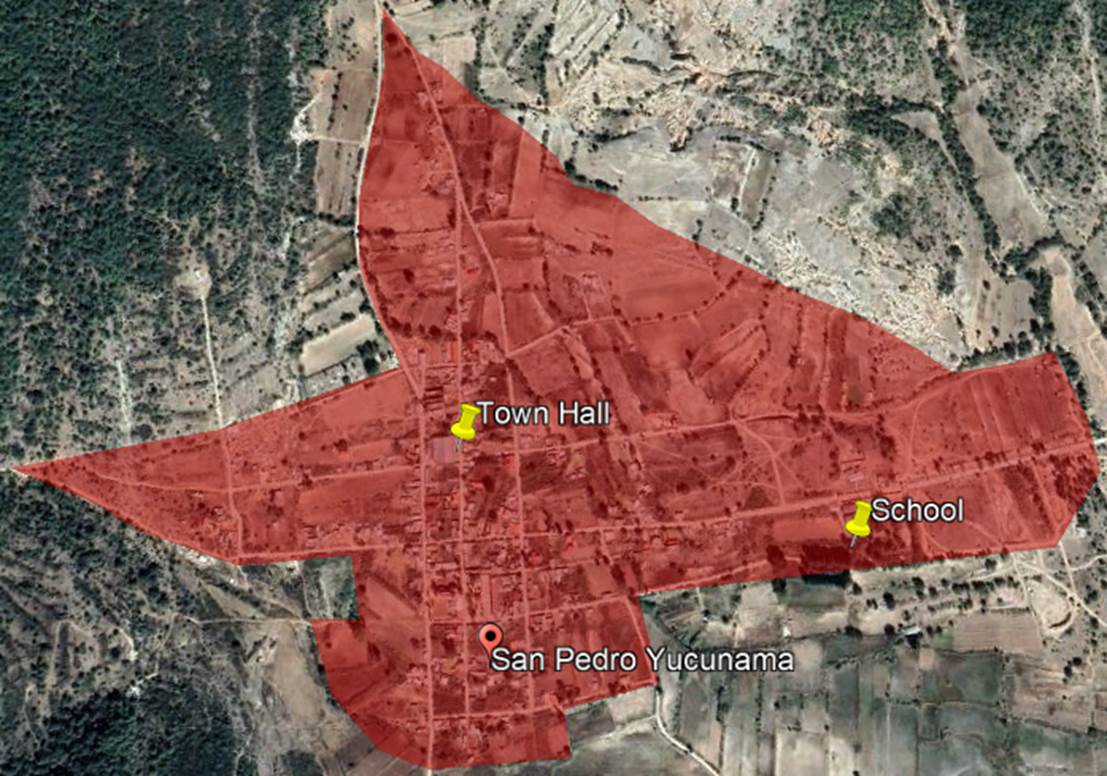

To demonstrate a practical exercise in implementing design flow, each process step was followed to create a HLD from the scenario presented in Figure 13.

Figure 13 ‒ HLD example

This scenario comprises eight RAN sites to be analyzed in performing the transport HLD. It considers three available transport nodes that provide connection to the core network. Technologies to be evaluated are: Fiber Optic, Microwave, and Satellite. The total availability target for the transport network is 99.5%.

4.2.1 Service Level Requirements Definition

Operators must define levels of service according to network domains (last-mile/aggregation). Satellite transport links must be considered as a special case. In the transport network, the main parameters that must be defined are: latency, packet loss ratio, and availability.

Table 9 shows a definition example for three LTE deployment service levels.

|

Latency – RTT |

Packet Loss Ratio |

Availability |

|

|

Last-mile Link |

40 |

0,1 |

99,9 |

|

Aggregation Link |

20 |

0,01 |

99,99 |

|

Satellite Link |

200 |

0,1 |

99,9 |

Table 9 ‒ Service requirement definition example

Design Example

Table 9 values will be considered in the design example.

Dependencies with other tasks

The Service Level Requirements Definition presents the following dependencies:

Prerequisites

Outputs

4.2.2 Tx Standard Configurations Definition

This definition is to standardize possible transport equipment configurations to be implemented. By doing this, design options are constrained (simplifying the overall process).

The detail level for possible scenarios must be defined in creating the configurations. Possible alternatives to be used are: Transport Technologies, Tx Equipment Vendor, RAN Equipment, and Transport Vendor. Its highly recommended that available transport technologies be selected to minimize the number of possible alternatives and simplify the design process.

First perform a detailed identification of all possible scenarios. Next, identify general transport equipment characteristics for each scenario.

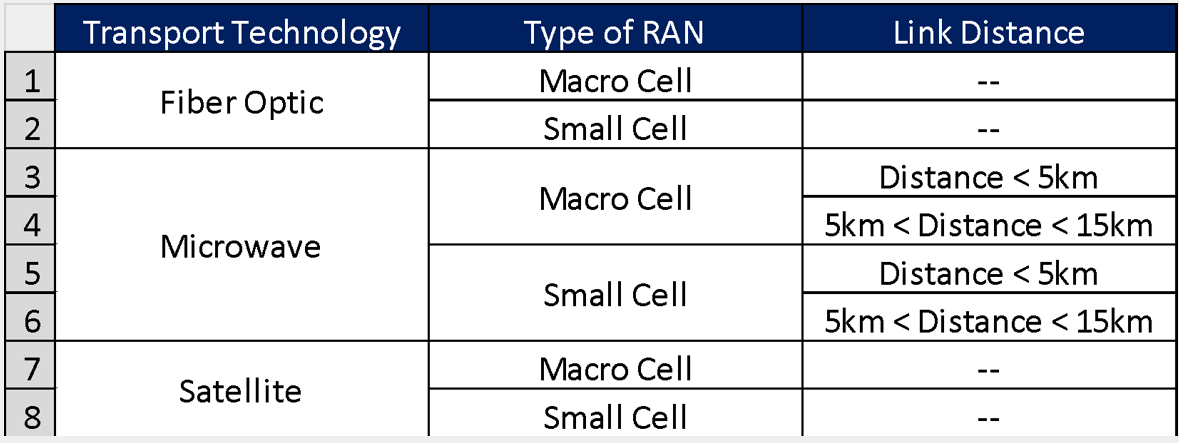

Figure 14 displays a brief example of the Standard Configurations Generation considering the available transport technologies and RAN configuration. A NaaS operator can use this example to customize its own definition process.

Figure 14 ‒ Example of Tx Standard Configurations Definition

Figure 14 ‒ Example of Tx Standard Configurations Definition

Available transport technologies will be considered in creating the standard configurations. Table 10 shows the standard configuration considered in the design example for fiber optic equipment.

Table 10 ‒ Standard fiber optic configurations considered

Table 11 lists standard microwave equipment configurations considered in the HLD example.

Table 11 ‒ Standard microwave configurations considered

Table 12 shows standard satellite equipment configurations considered in the example.

Table 12 ‒ Standard satellite configurations considered

Dependencies with other tasks

The Transport Standard Configurations Definition presents the following dependencies:

Prerequisites

Outputs

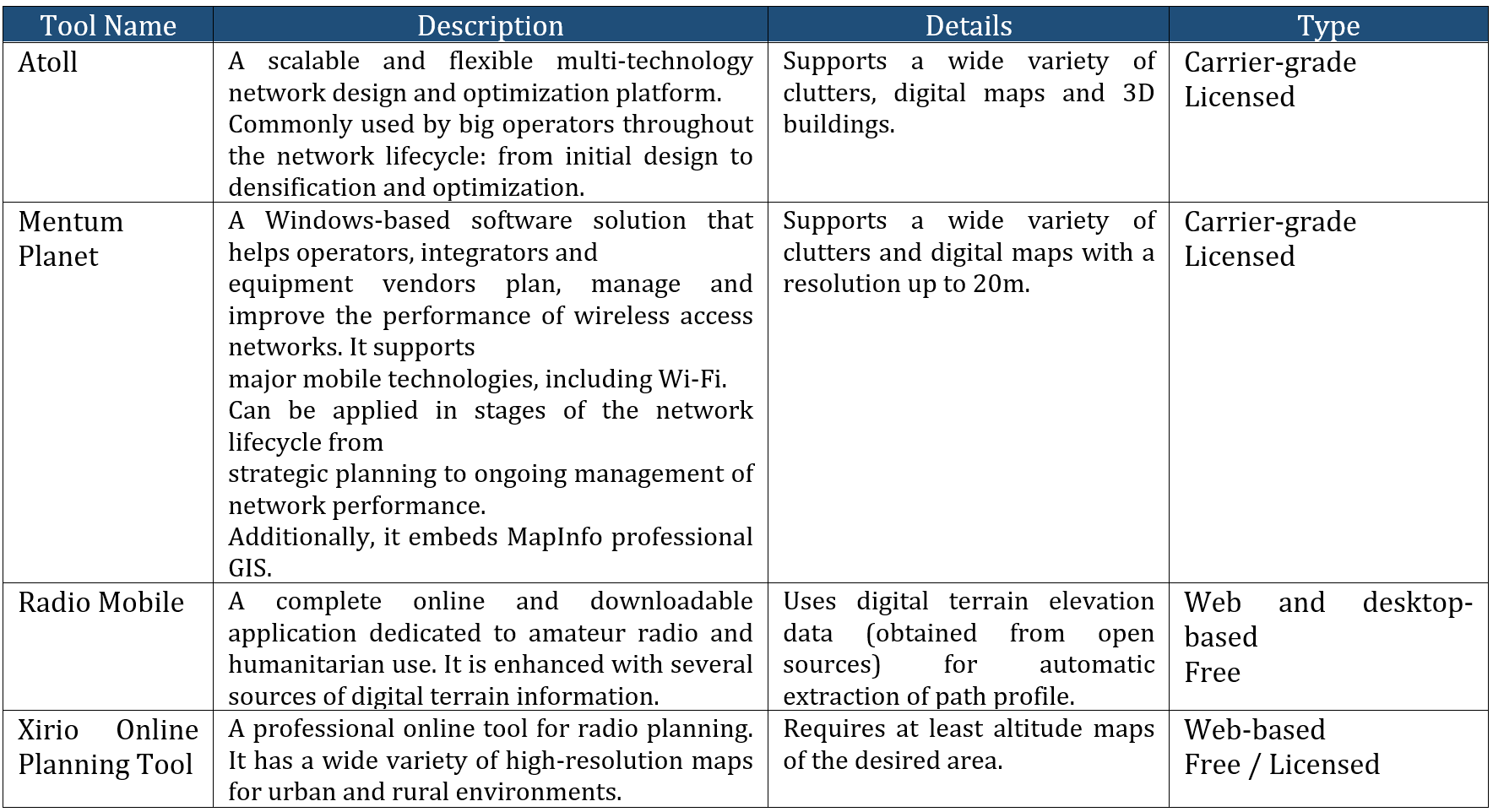

4.2.3 Design Tool Selection

This section provides general criteria for selecting the most suitable tool set and level of automation to be implemented during the design phase.

A classification of available tools is required to select the appropriate design tools to perform the transport HLD. Table 13 displays a generic tool type classification to serve as a base in performing the selection.

|

Tool Type |

Implementation type |

Type of License |

Automation |

Customization |

Support Type |

|

Tier 1 |

Commercial tool |

License cost |

High/Medium: Batch mode usually based on scripts |

High: Customization options provided by developer |

Dedicated support (extra charges can apply) |

|

Tier 2 |

Open-source code |

Free |

High/Medium: Batch mode usually based on scripts |

Medium/High: Customization options implemented by users |

Wiki + Community Groups |

|

Tier 3 |

Web-based, APIs |

Free (sometimes usage limited per day) |

Low: Usually, one by one approach |

Low: Usually only proprietary parameters are defined |

Limited support |

|

Tier 4 |

Home-grown (e.g Spreadsheet) |

Free |

Medium: Automation options requires high cycle times |

Medium: Customization options requires high cycle times |

No support |

Table 13 ‒ Tool type classification

Specific classifications for each transport solution are addressed in corresponding subsections.

Dependencies with other tasks:

Design Tool Selection presents the following dependencies:

Prerequisites

Outputs

Transport Solution Feasibility Analysis ‒ Selected tools from this section are used during a feasibility analysis.

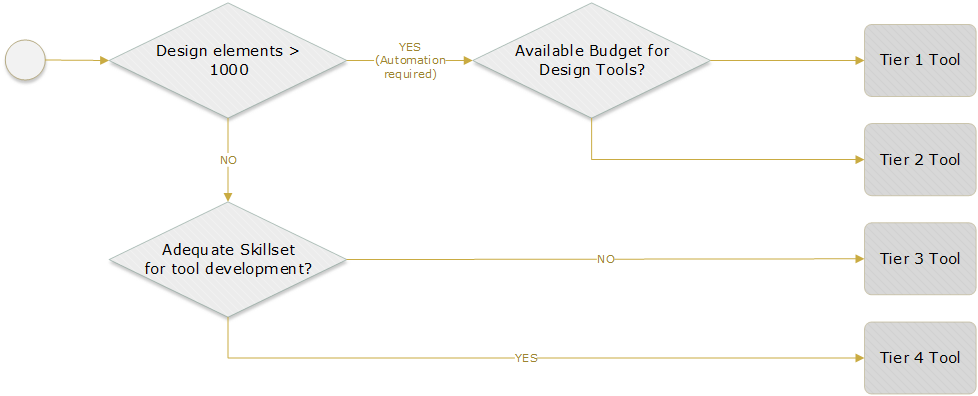

4.2.3.1 Tool Selection Process

The selection process must consider different aspects regarding project scope and NaaS operator characteristics. A list of examples follows:

Figure 15 displays a generic process to perform tool selection. A NaaS operator can use this process as a basis in defining its own version according its own priorities.

Figure 15 ‒ Tool selection process

4.2.3.2 Fiber Path Design Tool Selection

Tools used to perform fiber path design are listed in Table 14 according to defined parameters.

|

Tool Type |

Name |

Description |

|

Tier 2 |

QGIS |

Free and open-source cross-platform desktop geographic information system application that supports viewing, editing, and analysis of geospatial data |

|

Tier 3 |

Google Earth |

Google Earth is a computer program that maps the Earth by superimposing satellite images, aerial photography, and GIS data onto a 3D globe, allowing users to see cities and landscapes from various angles. |

Table 14 ‒ Tool classification for fiber optic path design

Following section 4.2.3 methodology, a NaaS operator can select a fiber path design tool.

Design Example

Google Earth will be the GIS tool used to perform the Fiber Path Design.

4.2.3.3 Microwave LOS Verification Tool Selection

Tools used to perform the microwave LOS verification are shown in Table 15 according to defined parameters.

|

Tool Type |

Name |

Description |

|

Tier 3 |

Google Elevation API |

The Elevation API provides elevation data for all locations on the surface of the earth |

|

airLink |

LoS Evaluator to verify and validate LoS in MW Links. The tool is available through a web-based interface |

Table 15 ‒ Tool classification for microwave LOS verification

Following methodology presented in 4.2.3, the NaaS operator can select a microwave LOS verification tool.

Design Example

airLink will be used to perform the LOS validation in the HLD example.

4.2.3.4 Satellite Coverage Verification Tool Selection Process

Tools used to perform Satellite Coverage Verification are classified in Table 16 according to defined parameters. A QGIS tool must be selected to validate satellite coverage. An additional tool can be used to calculate satellite parameters (e.g., look angles).

|

Tool Type |

Name |

Description |

|

Tier 2 |

QGIS |

Free and open source, cross-platform desktop geographic information system application that supports viewing, editing, and geospatial data analysis |

|

Tier 3 |

Satbeams |

Provides satellite footprint from commercial satellites |

Table 16 ‒ Tool classification for satellite coverage verification

Following methodology presented in 4.2.3, a NaaS operator can select a satellite coverage verification tool.

Design Example

Satbeams will be used as the satellite coverage verification tool in the design example.

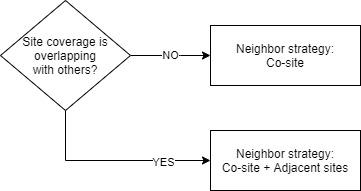

4.2.4 Transport Solution Feasibility Analysis

A Technology Feasibility Analysis helps determine feasible alternatives considering an operators available technologies. An operator can select one of the following approaches to perform the analysis:

Depending on characteristics of selected tools to perform technology evaluations, the analysis can be done in batch mode or in a one-by-one fashion.

Figure 16 displays a high-level view of approaches to perform the Transport Solution Feasibility Analysis.

Figure 16 ‒ Transport solution feasibility approaches

Design Example

The design example used Approach 2. Analysis for a specific node will stop when a transport technology is feasible. The technology ranking becomes: fiber optic, microwave, then satellite.

Dependencies with other tasks

Transport Solution Feasibility Analysis presents the following dependencies:

Prerequisites:

Outputs

4.2.4.1 Fiber Optic (FO) Technology Evaluation

- Based on the origin node, select a list of candidate transport nodes within a radius equal to FO Maximum Distance defined in the Architecture Module. FO Maximum Distance is a design parameter based on the maximum cost permissible to deploy fiber optic.

- For each candidate node, apply the methodology presented in section 2 to define the fiber path to candidate transport nodes. The candidate transport node list will only contain nodes with a FO path length less than FO Maximum Distance.

- Define FO Tx Node as the most suitable candidate considering commercial criteria.

Figure 17 displays the FO Solution Evaluation process.

Figure 17 ‒ FO solution evaluation process

Design Example

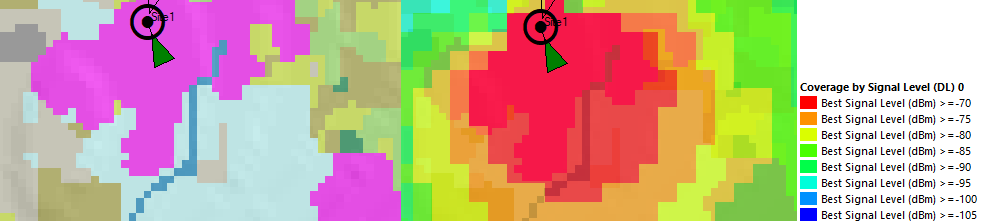

Section 3.1 methodology is applied to evaluate FO technology using Google Earth as a GIS tool. Figure 18 shows the analysis results, where four nodes are feasible to use a fiber optic link according to the requirements.

Figure 18 ‒ FO technology evaluation in design example

Analysis results are recorded in the TX HLD Report Template.

4.2.4.2 MW Technology Evaluation

- Based on the origin node, select a list of candidate transport nodes within a radius equal to MW Maximum Distance defined in the Architecture Module. MW Maximum Distance is a design parameter based on the maximum link distance covered by MW equipment.

- For each candidate transport node, apply the methodology presented in section 2 to evaluate LOS for candidate transport nodes. This transport node list will only contain nodes with a MW link distance less than MW Maximum Distance.

- Define MW Tx Node as the most suitable candidate considering commercial criteria.

Figure 19 displays MW Solution Evaluation process.

Figure 19 ‒ MW solution evaluation process

Design Example

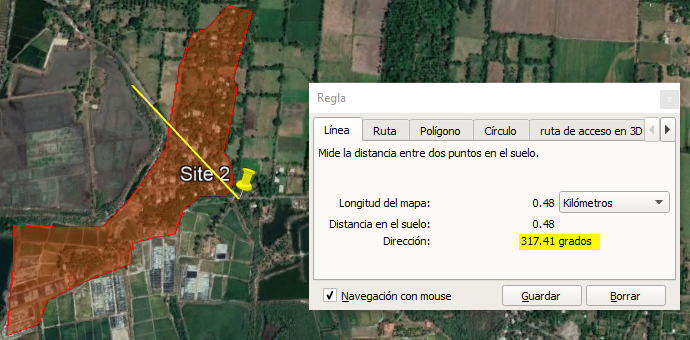

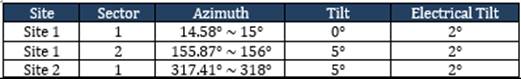

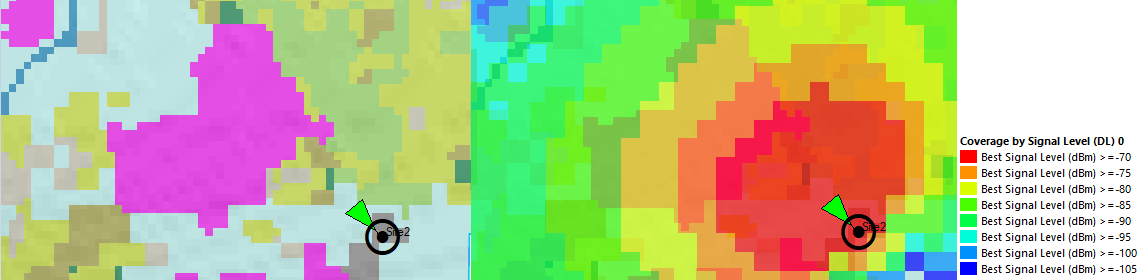

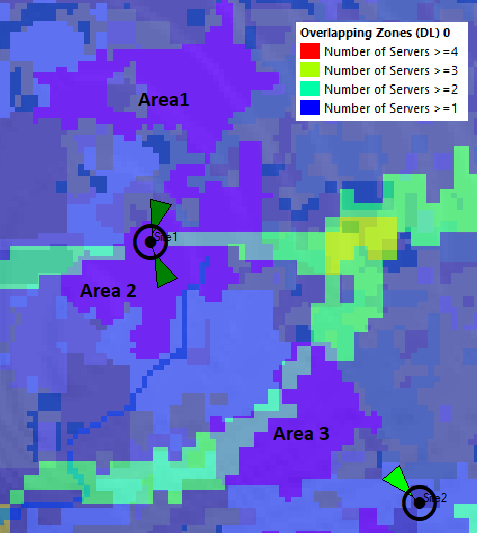

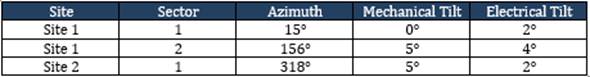

Using AirLink as a LOS validation tool, section 3.2 methodology is applied to perform the MW technology evaluation. Figure 20 displays the analysis results, where three nodes are feasible to use a microwave link according to requirements. The remaining three links present path obstruction, so a microwave link isn’t feasible.

Figure 20 ‒ MW technology evaluation into design example

Analysis results are recorded in the TX HLD Report Template.

4.2.4.3 Satellite Technology Evaluation

- Based on the origin node, apply section 2 methodology to evaluate satellite coverage in all available solutions.

- Define Satellite Tx Solution as the most suitable candidate considering commercial criteria.

Figure 21 displays the Satellite Solution Evaluation process.

Figure 21 ‒ Satellite solution evaluation process

Design Example

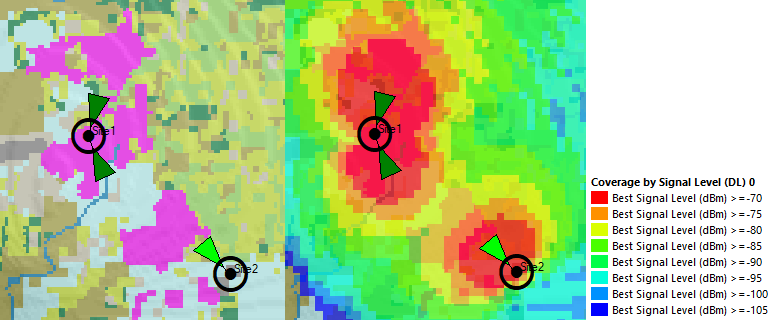

Section 3.3 methodology is applied to perform the satellite link validation using footprints available on Satbeams. According to the requirements, Figure 22 shows its feasible to implement a satellite link using the node undergoing analysis.

Figure 22 ‒ Satellite technology evaluation

Look angles for the sites are displayed in Table 17.

Table 17 ‒ Look angles calculated for the design example

The results of the analysis are recorded in the TX HLD Report Template.

4.2.5 Physical Topology Design

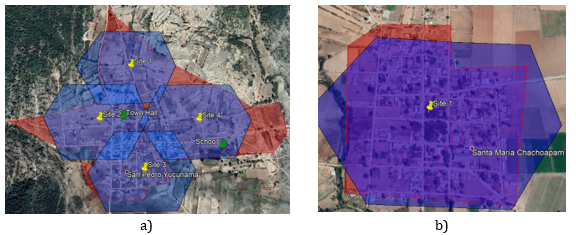

Depending on available transport solutions and how theyre combined during the feasibility analysis, a few transport network topologies are possible. Figure 23 presents some examples.

Figure 23 ‒ Examples of transport network topologies

The following set of tasks must be performed for each transport link to create the physical transport design:

Design Example

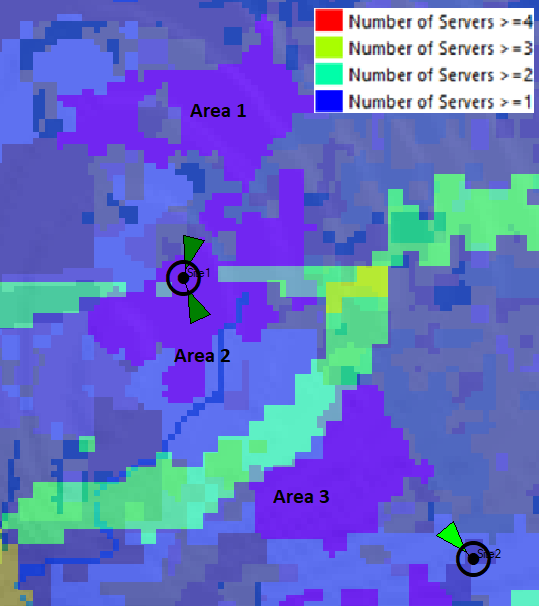

Figure 24 presents the three physical topologies identified for the design example.

Figure 24 ‒ Topology scenarios presented in the design example

Table 18 displays corresponding availability calculations for each scenario, as well as the total availability for the transport network. The transport network design complies with the availability requirement of 99.5%.

Table 18 ‒ Availability calculations for design example

Data presented in Table 18 were calculated using the Tx Network Availability Calculation Widget.

Dependencies with other tasks

Physical Topology Design presents the following dependencies:

Prerequisites

Outputs

4.2.6 Capacity Planning

Once the physical topology is defined, the capacity of each transport link can be dimensioned to calculate the traffic that needs to be supported. Section 3.4 methodology can be applied in considering the total of RAN site traffic each link is aggregating.

Design Example

Table 19 displays capacity calculations for each transport link in the design example. All links comply with requirements established in Section 4.2.1.

Table 19 ‒ Capacity Analysis in Design Example

Data presented in Table 19 were calculated using the Tx Network Capacity Forecast Widget.

Dependencies with other tasks

Capacity planning presents the following dependencies:

Prerequisites

Outputs

4.2.7 Transport Solution Definition

The Transport Solution Definition helps determine the final transport technology to be implemented in a specific node. The operator must consider the following solution characteristics in performing the selection:

Figure 25 is an example of a Transport Solution Definition Process that can be adapted by operators based on available technologies and commercial criteria.

Figure 25 ‒ Transport solution definition process

To select Tx equipment selection, create a mapping among defined transport solution characteristics and transport standard configurations. Task results will determine the total number and type of transport equipment required in the deployment.

Design Example

Methodology presented in the above section is applied to define the transport solution definition in the design example. Figure 26 displays the selected transport technology for each node.

Figure 26 ‒ Transport solution definition in design example

Analysis results are recorded in the Tx HLD Report Template.

Dependencies with other tasks

Transport Solution Definition presents the following dependencies:

Prerequisites

Outputs

4.2.8 Tx Equipment Bill of Quantities (BOQ) Generation

A BOQ is a comprehensive registry of transport solutions to be implemented during the deployment phase. The following is a high-level list of information to include in the BOQ record:

4.2.8.1 BOQ Best Practices

Following are key requirements that BOQ management should address to optimize the process:

A NaaS operator can use the Tx HLD Report Template, which includes a section for the BOQ, as a base in creating their own version.

Design Example

Methodology from the above section defines the transport equipment BOQ. Table 20 shows the fiber optic equipment BOQ

Table 20 ‒ Fiber Optic Equipment BOQ

Table 21 shows the microwave equipment BOQ

Table 21 ‒ BoQ of microwave equipment

Table 22 details the satellite equipment BOQ

Table 22 ‒ BOQ of satellite equipment.

4.3 NaaS operator End-to-End Process Definition

A NaaS operator can use the generic process design as a basis to develop its version according to its own limitations and constraints. A deeper analysis can be performed in adapting the generic process.

Analyzing the generic process design, activities can be classified into two groups:

The approach presented in this section focuses on continuous activities, as they consume the majority of the resources. Following are some guidelines the NaaS operator should consider in customizing its own process:

Its highly recommended that the NaaS operator execute a critical path analysis of its process version to further its optimization.

5 HLD Recommendation

Methodology to consolidate and generate a final HLD recommendation.

5.1.1 HLD Recommendation Format & Structure Generation

The main deliverable is the HLD recommendation. It contains the overall technical solution, basic design rules, and technologies and concepts required to describe the transport network. The HLD recommendation should contain the following aspects:

5.1.2 HLD Recommendation Generation

Generation of the HLD recommendation following the format and structure established. A NaaS operator can use the TX HLD Report Template as a reference to create its own version.

1. TX Network LLD

The Tx Network Low-level Design (LLD) module provides NaaS operators with background information and methodologies to elaborate a detailed design that includes the required information to implement the solution of the transport network. It provides methodologies and instructions to determine actual low-level design parameters necessary for the development of the Tx Network LLD.

Since the Tx Network LLD is required for the installation and commissioning of the transport equipment, it has a major impact on the transport network deployment. Therefore, a precise LLD is required to avoid delays in the deployment phase.

NaaS Operators span a range of size, geographies and network architectures. That is, there is not a one size fits all methodology. For this reason, a generic end-to-end process flow to perform the Tx Network LLD is presented along with proper guidelines to be tailored to match NaaS requirements methods for best in class LLDs.

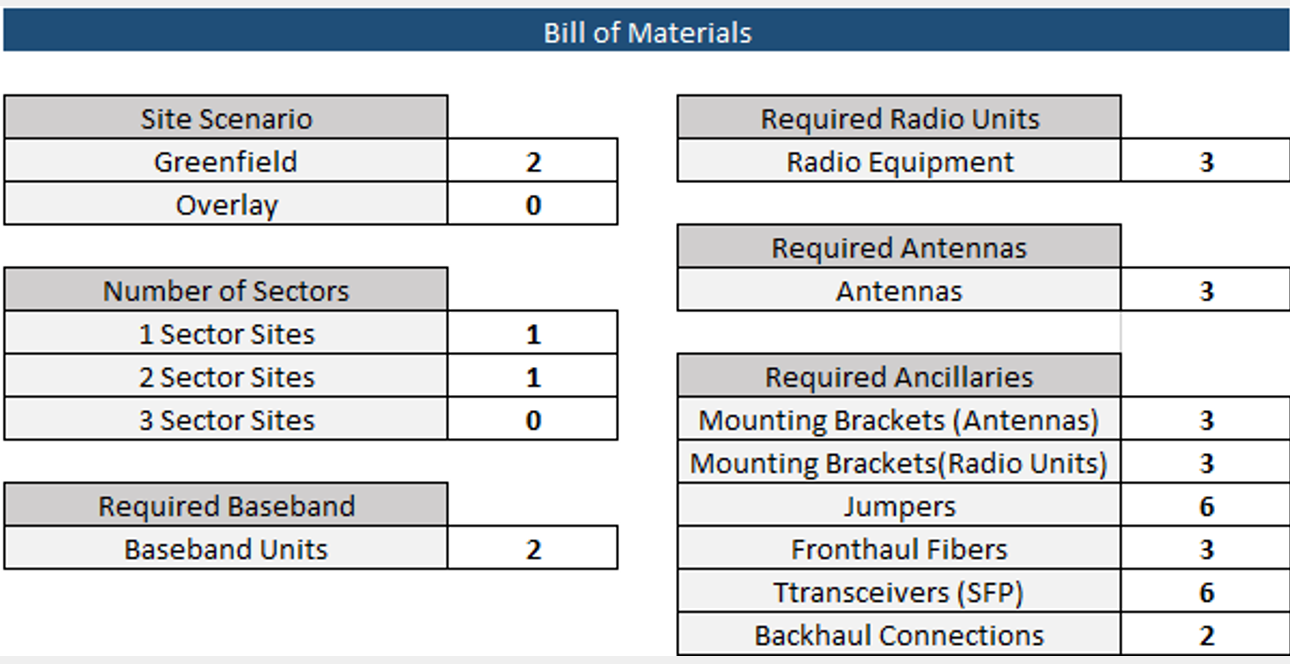

The main output of the module is the Tx Network LLD Design which includes, among others, the Transport Datafills that integrate the required information to implement the transport solution and the Bill of Materials. In addition, this module will guide the NaaS Operator through the process of generating technically compliant LLD reports.

1.1 Module Objectives

This module will enable a NaaS Operator to stand-up, run, and manage a Tx Network LLD initiative. The specific objectives of this module are to:

1.2 Module Framework

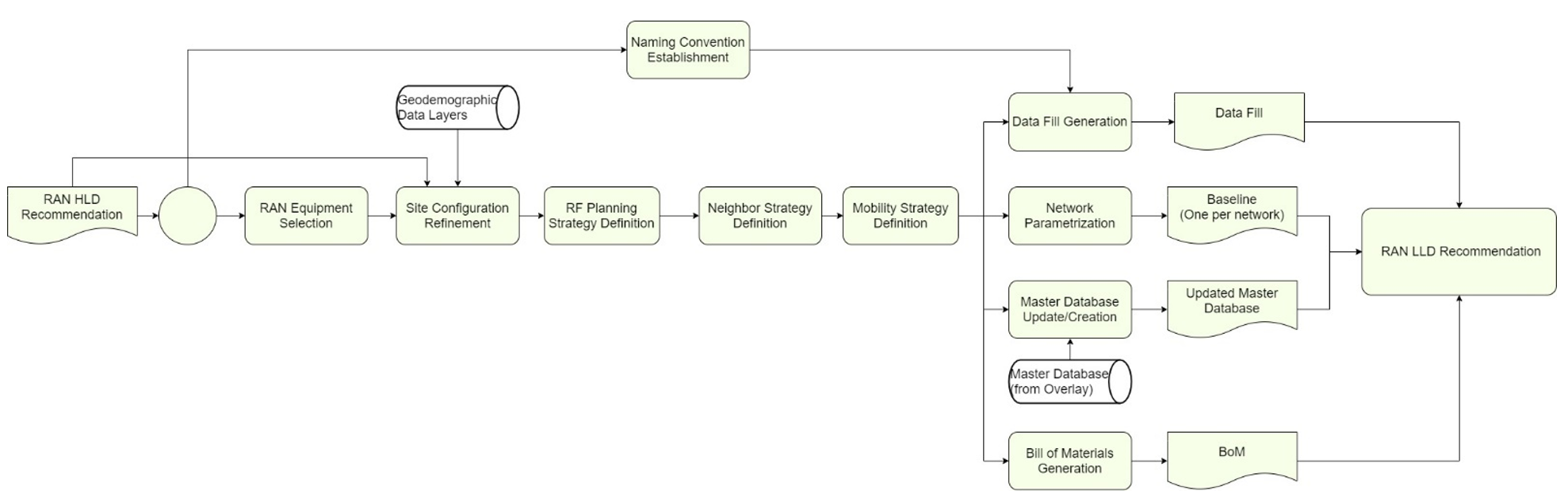

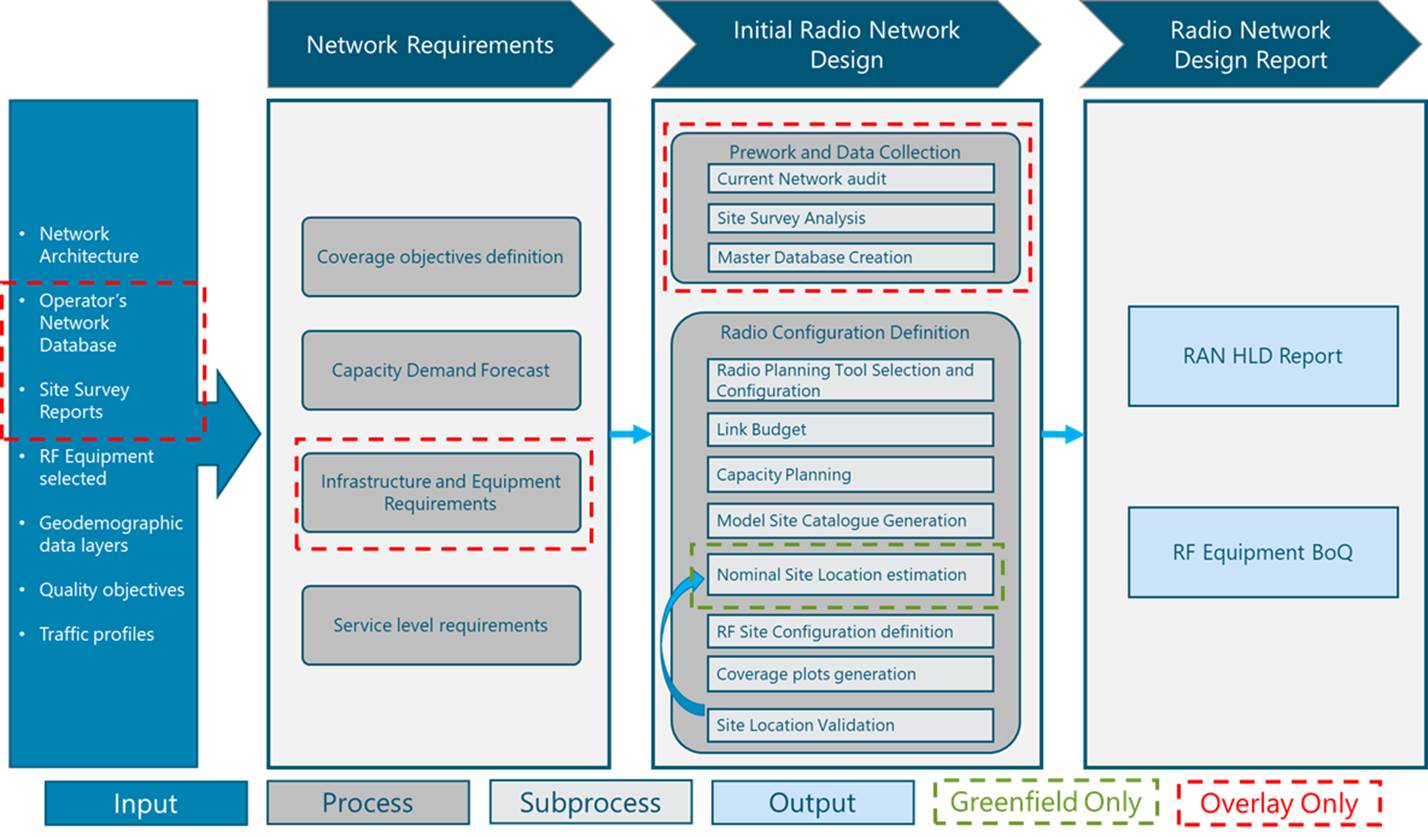

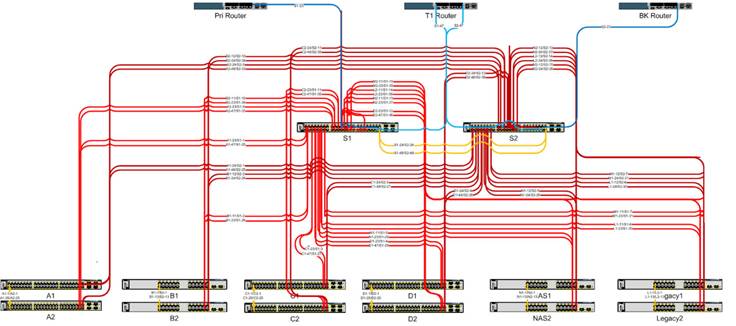

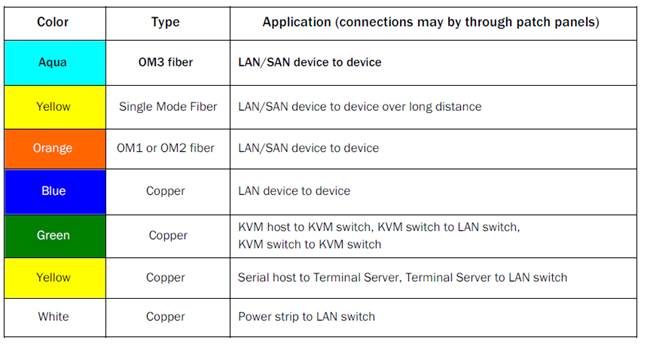

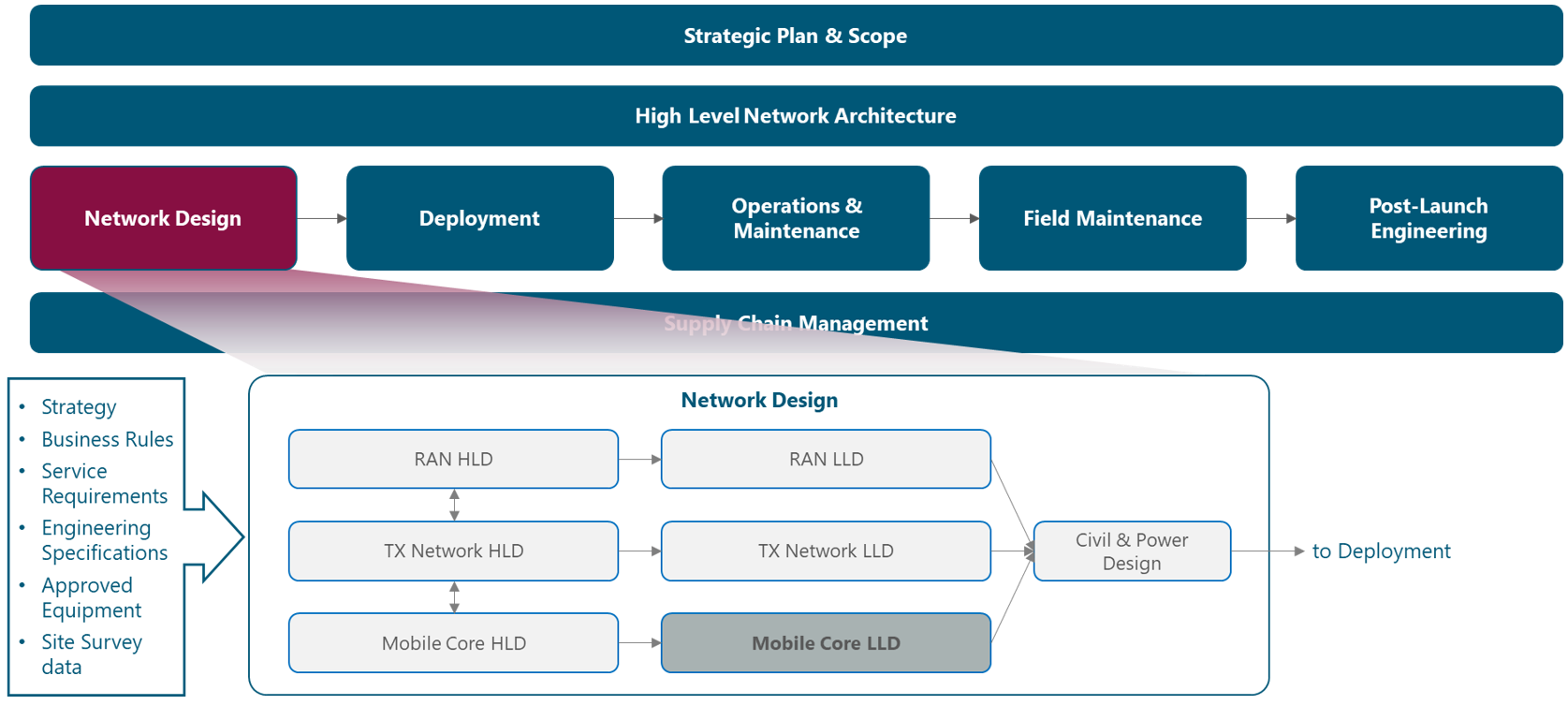

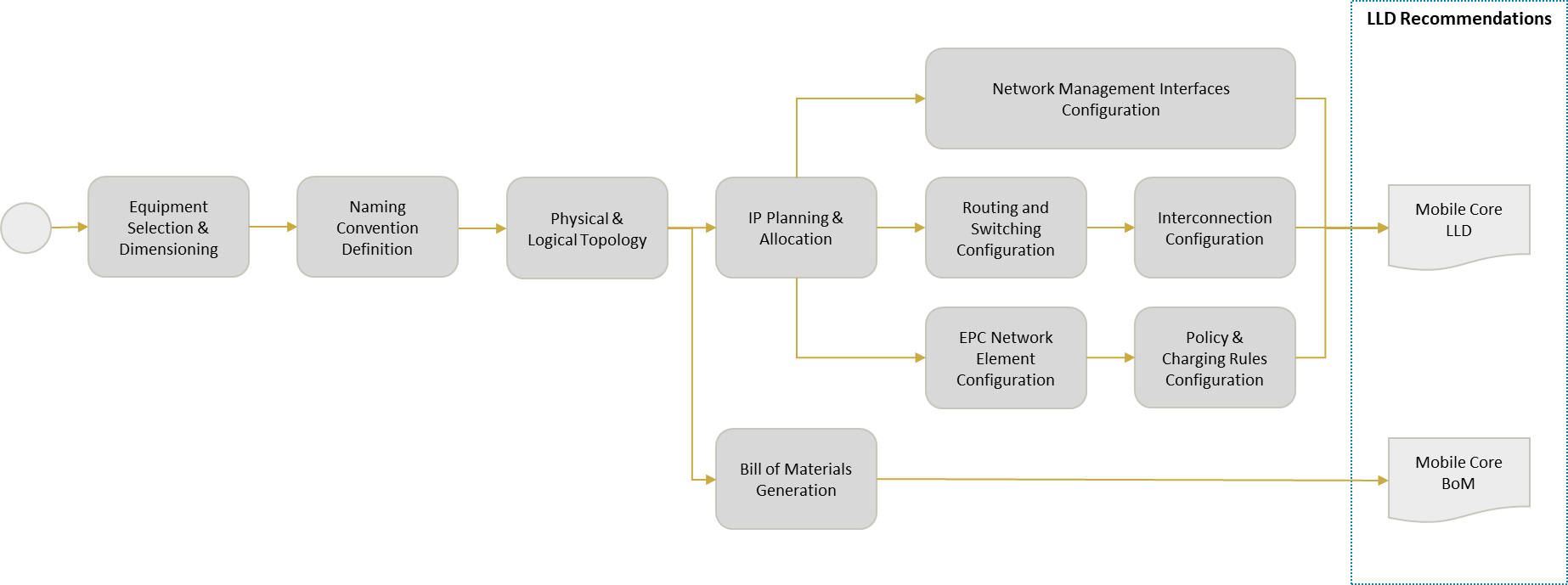

The Module Framework in Figure 1 describes the structure, interactions and dependencies among different NaaS operator areas.

Strategic Plan & Scope and High Level Network Architecture drive the strategic decisions to forthcoming phases. Network Design is the first step into implementation strategy which is supported by Supply Chain Management.

The Tx Network LLD module is included within the Network Design Area and has direct relation with Tx Network. The generated output of this module will serve as a required input for the Civil & Power Design module.

Figure 1. Module Framework

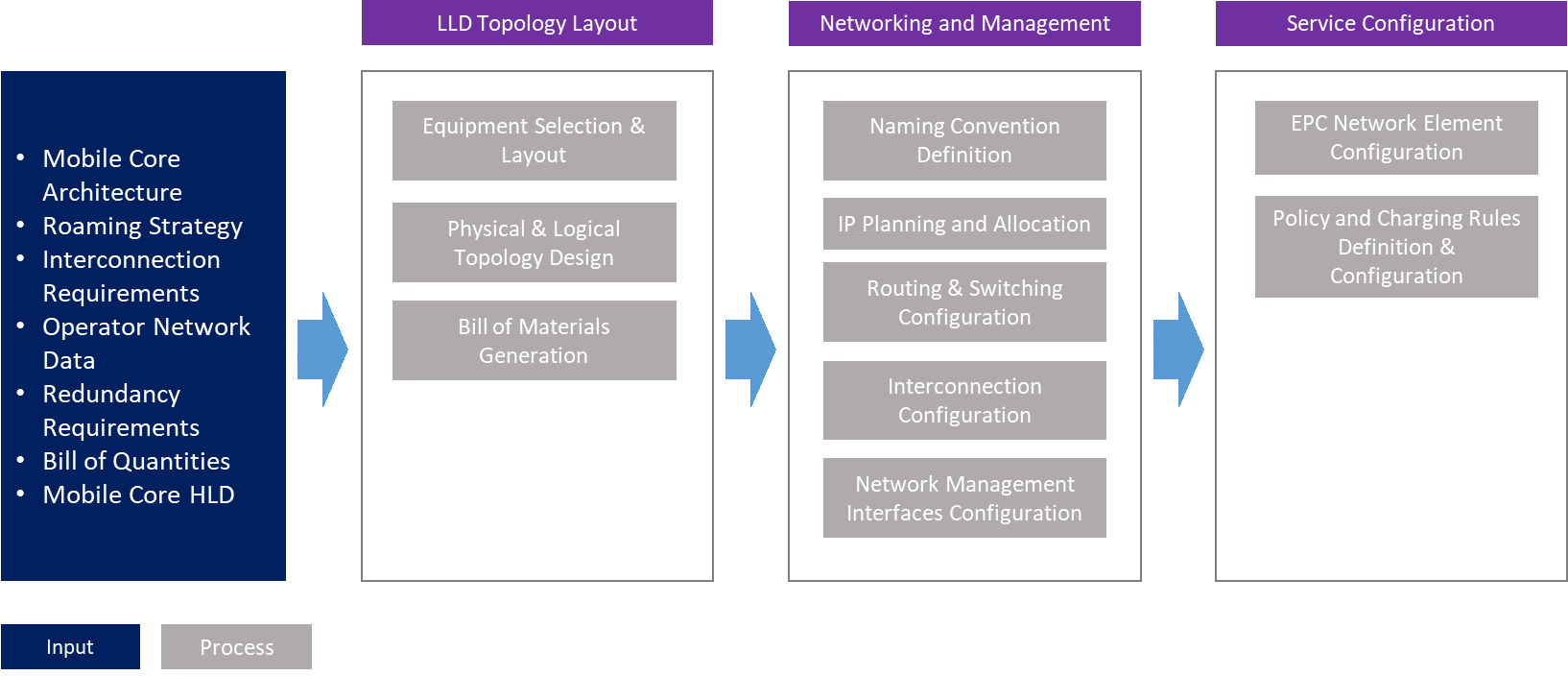

Figure 2 presents the Tx Network LLD functional view where the main functional components are exhibited. Critical module inputs are further described and examined in Section 2.2. In addition, guidance and methodologies to execute the tasks included within each function are described in Section 3.

Figure 2. Module Functional View

The rest of the module is divided into four sections. Section 2 is a birds-eye view of the Tx network fundamentals involved on its design. Once fundamental knowledge is acquired, Section 3 focuses in showing a hands-on view of the tasks involved on the design. Section 4 organizes functional tasks on an end-to-end process flow that can be used as-is or be adapted by NaaS operators to match with their particular conditions. Finally, Section 5 illustrates how to integrate previous elements into a comprehensive Low-level Design (LLD) recommendation.

2 LLD Fundamentals

This section provides a general overview of the baseline concepts to develop a Tx Network Low-level Design.

2.1 Tx Network Environment

In a mobile environment, the Transport Network interconnects different networks including the RAN (Radio Access Network), data centers and external networks. Figure 3 displays the architecture of a typical transport network.

Figure 3. Typical Transport Network

Mobile networks are ubiquitous and support a mix of different types of traffic originating from and terminating to mobile devices. All this traffic must be conveyed between the mobile cellular base stations through the transport network up to the Mobile Core. For this reason, different aggregation levels exist on the transport network which for most of NaaS Operators can be classified as: last-mile and aggregation level.

The implementation of 4G Long-Term Evolution (LTE) imposes some requirements on transport networks such as more network capacity and latency reduction. These requirements are better served through terrestrial technologies (fiber optic and microwave). However, in rural areas, this becomes a challenge because satellite transport is usually the only feasible technology. For this reason, transport network infrastructure is an essential component of the NaaS Operator network and its design must be performed with optimal processes and techniques

2.2 Tx Network Low-level Design Inputs Description

This section analyzes the module input data and their respective candidate sources, which is presented in Table 1. Furthermore, the impact of module inputs on the design process is examined.

|

Input Required |

Description |

Candidate Source |

Impact |

|

Tx & IP Architecture |

Includes the technologies and protocols to be considered on the design |

Tx & IP Architecture Module |

– Establishes the available transport technologies to be designed and their respective design guidelines. |

|

Tx HLD Design |

Describes the High-level Tx solution (transport technology, transport nodes) |

Tx HLD Module |

– The type and total number of Tx Links to be designed determines the selection of the tool to perform the Tx Link Design. |

|

RAN HLD Design |

Describes the parameters related to RAN solution (site location, RAN equipment, covered population) |

RAN HLD Module |

– The RAN equipment model has a direct impact on the Model Site Catalogue generation |

|

Transport Provider Network Data |

Contains specific information regarding Tx nodes (name, IP addresses, Port, S-VLAN) |

Transport Provided Network Database |

– The specific data of the Transport Node is used during the Datafill generation process. |

|

Tx Equipment Technical Data |

Contains a List of candidate Tx equipment for all involved vendors. |

Tx Equipment Vendor |

– Establishes the Transport (Transmission and IP) Equipment to be evaluated. This has a direct impact on Transport Equipment Selection and Model Site Catalogue generation. |

|

General IP Address Distribution |

Defines the general segments for transport equipment and services. |

Tx & IP Architecture Module |

– The General IP Address Distribution is used on IP Planning and Tx Network Resources Allocation steps. |

Table 1. Tx LLD Module inputs analysis

2.3 Tx Link Design

Transport links are subject to multiple phenomena (e.g. meteorological) that have a direct impact on the link performance. Therefore, an accurate Tx Link Design must be performed to ensure that the transport link will behave within the performance thresholds under different conditions.

This process must be performed individually for each transport link according to the engineering guidelines from Transport & IP Architecture. Furthermore, this process uses specialized link design tools (See Section 4.2.3 for further details) that consider additional parameters (e.g. weather conditions) and their impact on the transport link performance. Each transport technology has its particular parameters that must be calculated in order to maintain the transport link operating in optimal conditions.

Tx Link Design for Fiber and Microwave technologies is perform within this module to validate the site feasibility, and confirm physical and logical parameters. In, contrast, for Satellite technology, the most common implementation scenario is to use a Satellite Service provider and this validation is determined by the satellite footprint. Furthermore, the detailed Satellite Link Design is performed by the Satellite Service provider and is included as part of the equipment delivery.

2.4 Network Design

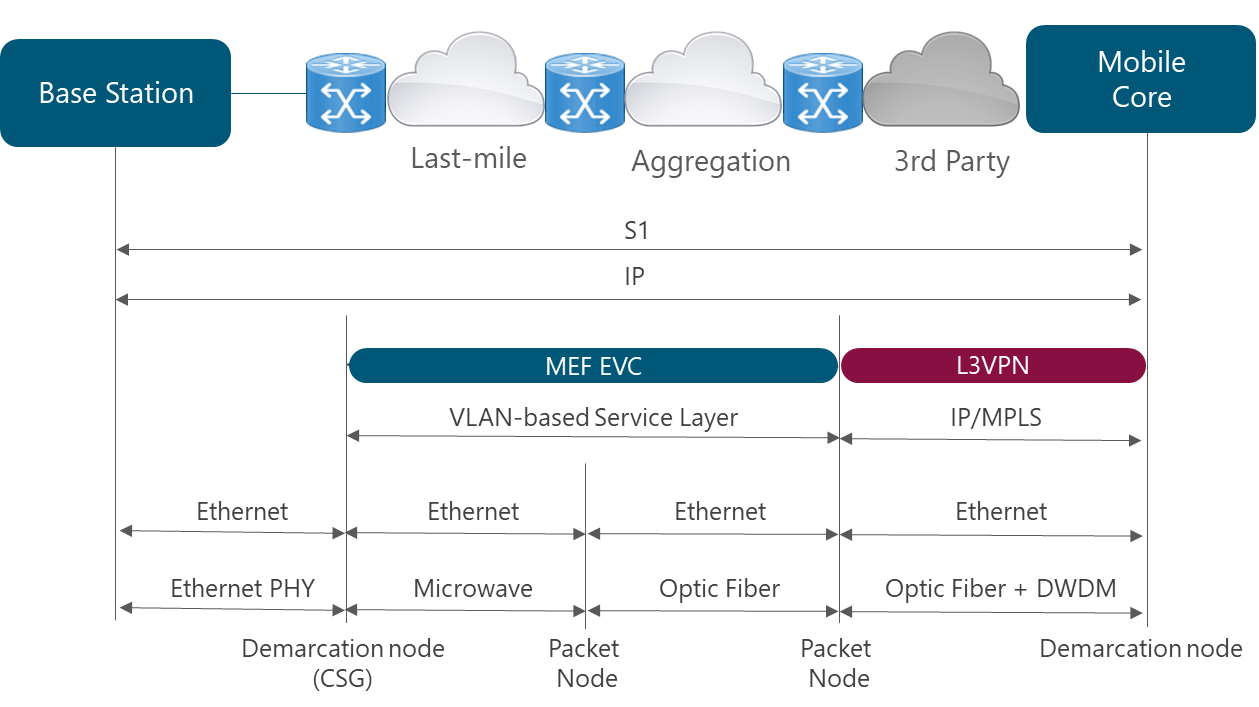

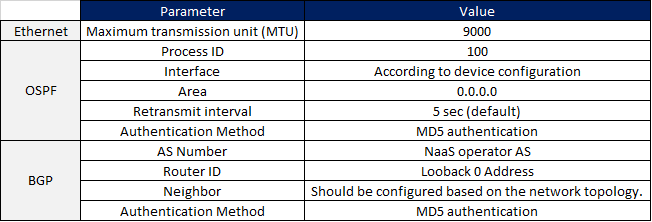

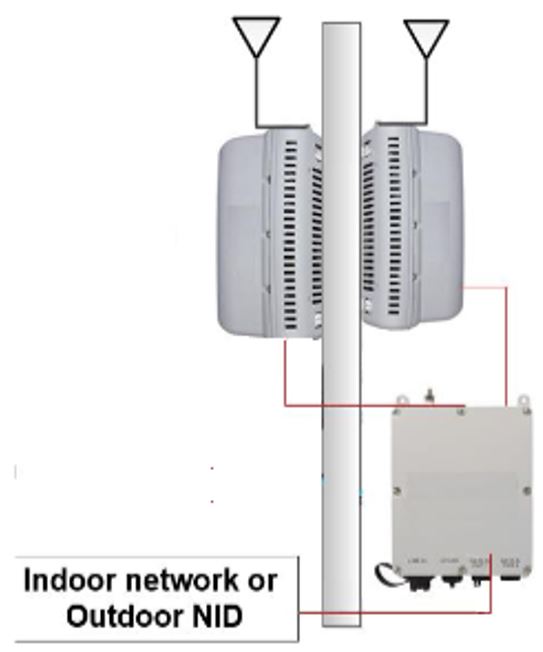

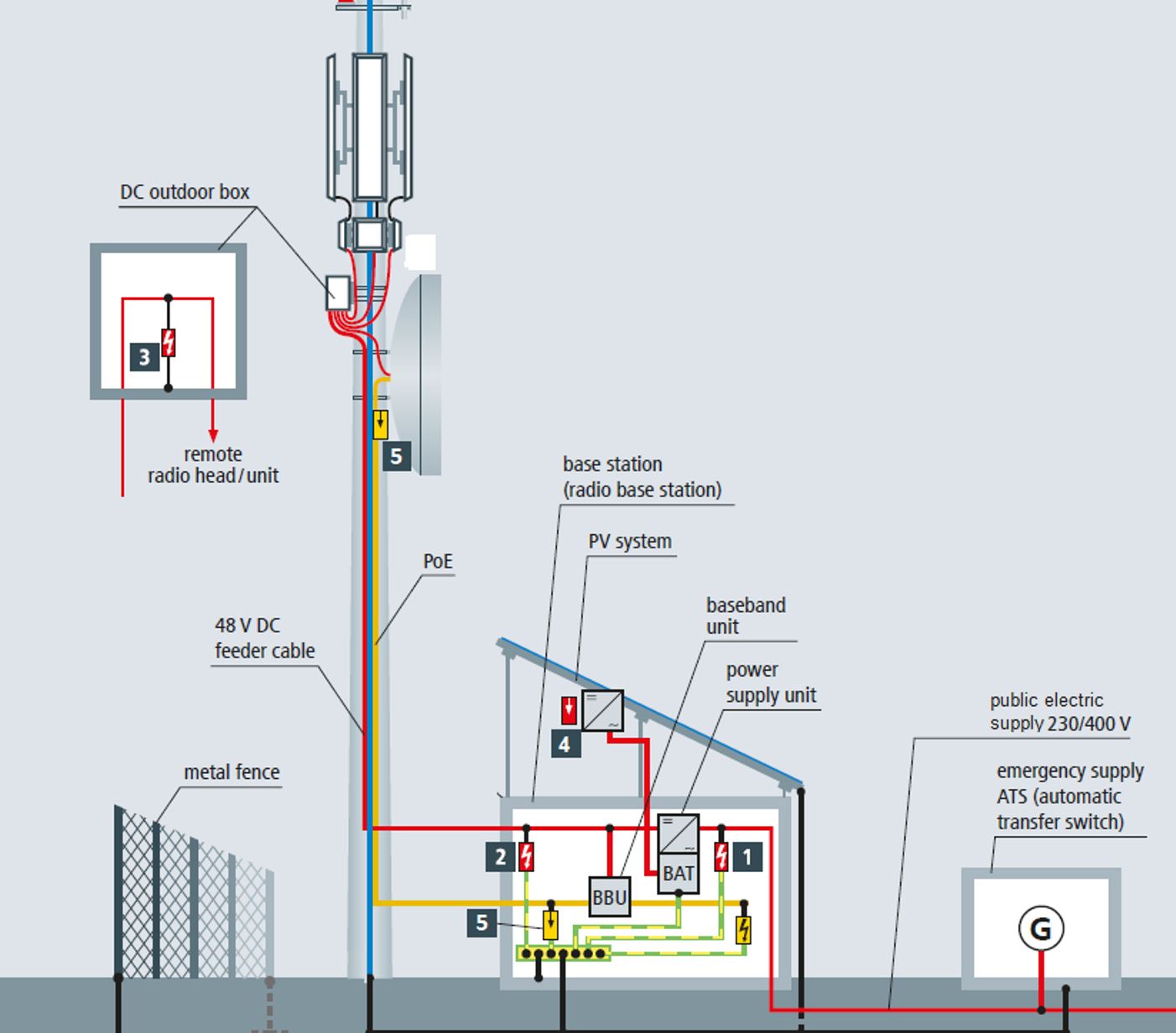

The most common implementation scenario implemented in the transport network is displayed in Figure 4:

Figure 4. Transport network scenario based on Carrier Ethernet with L3VPNs

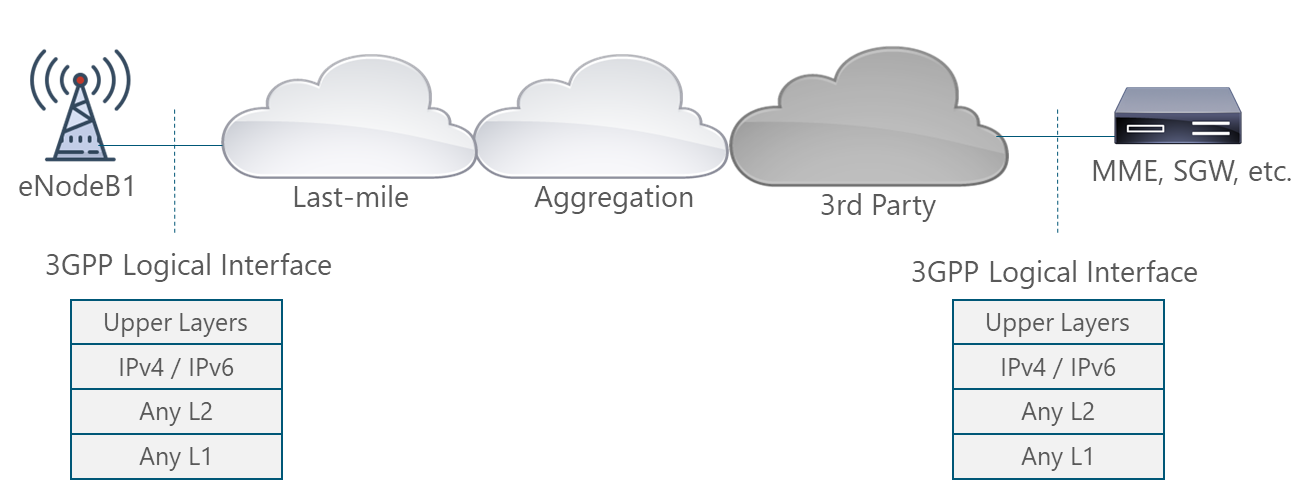

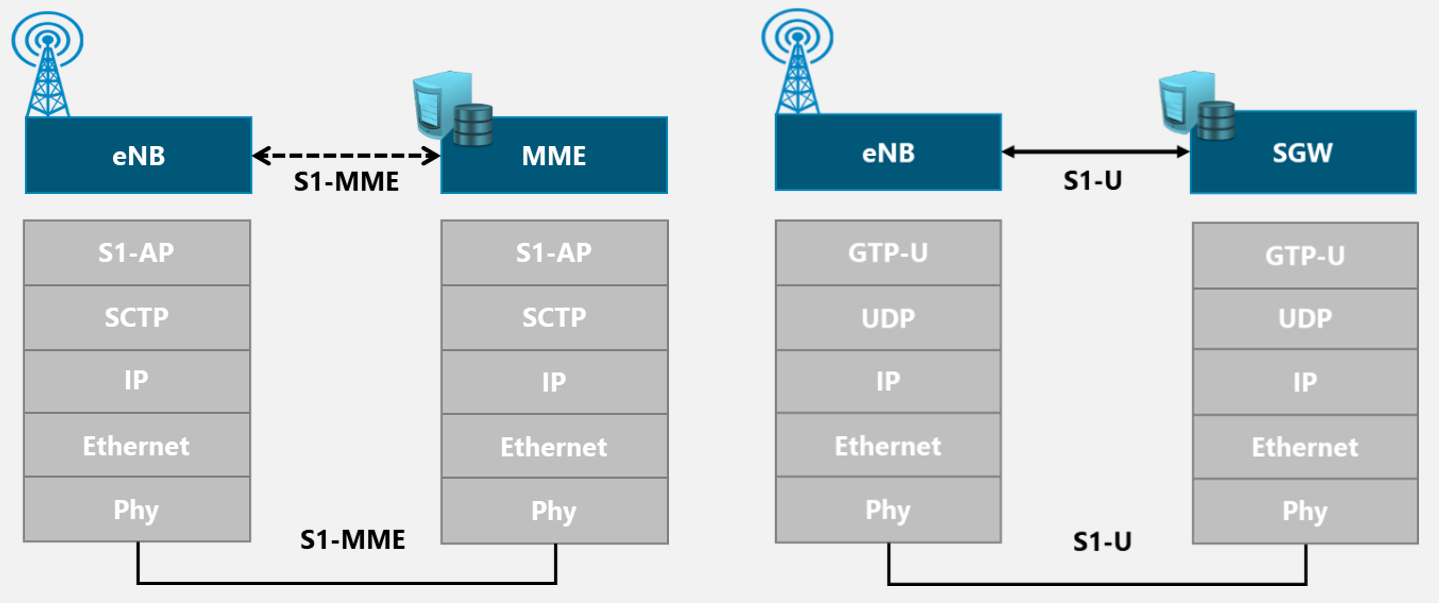

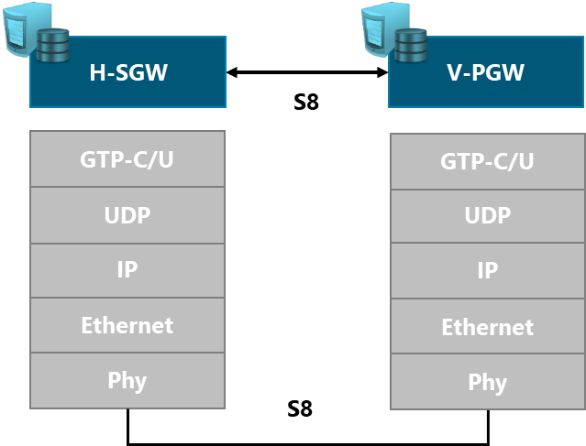

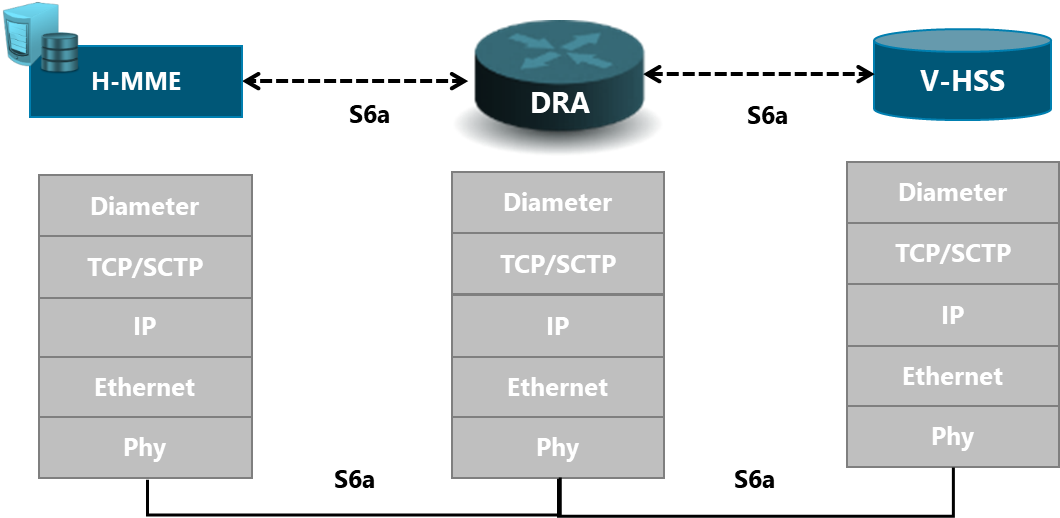

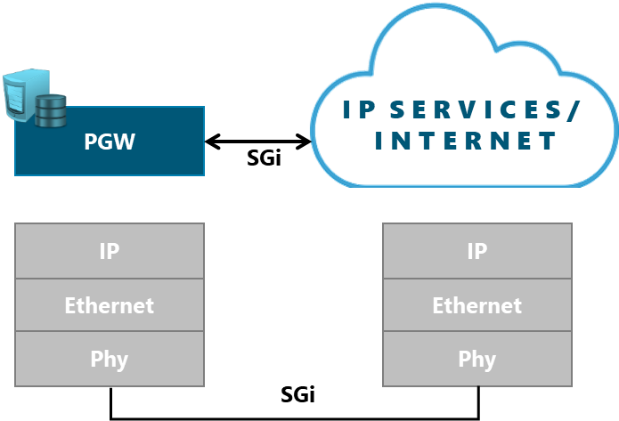

In order to support the scenario presented in Figure 5, NaaS operators must define the different protocols and technologies to be used at different layers.

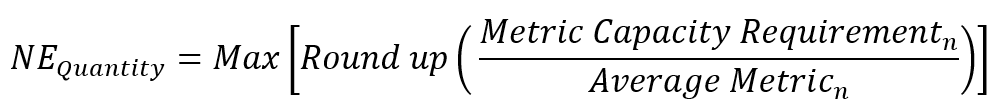

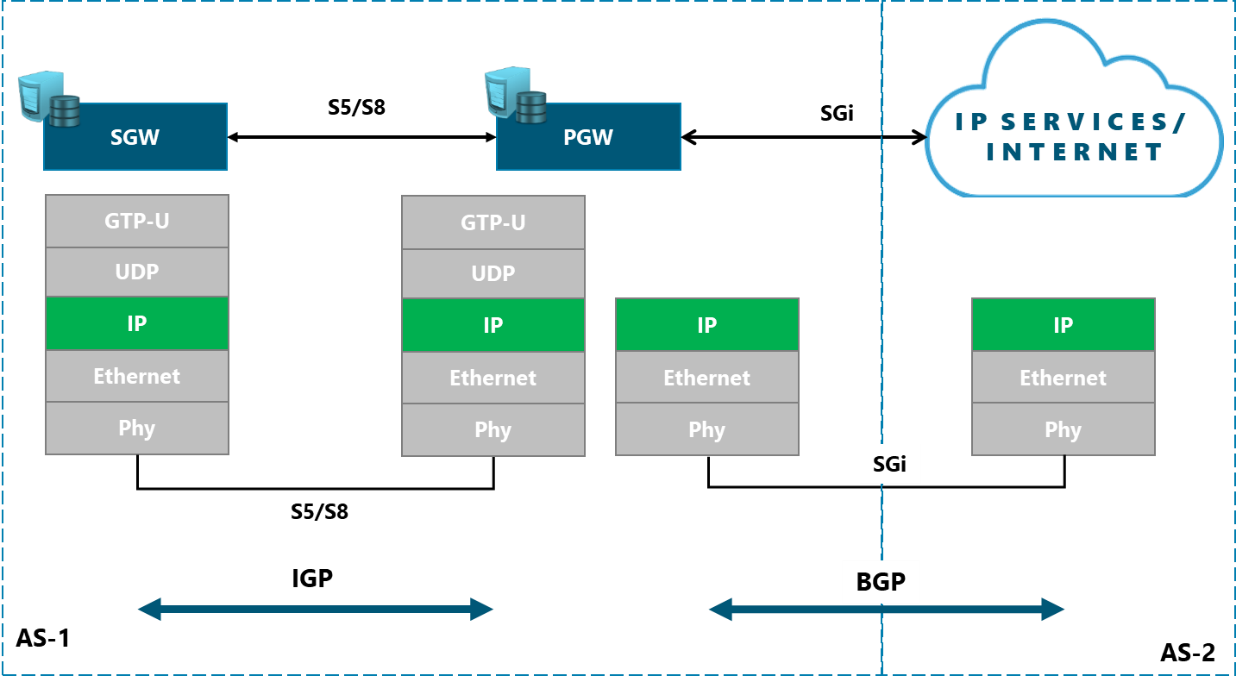

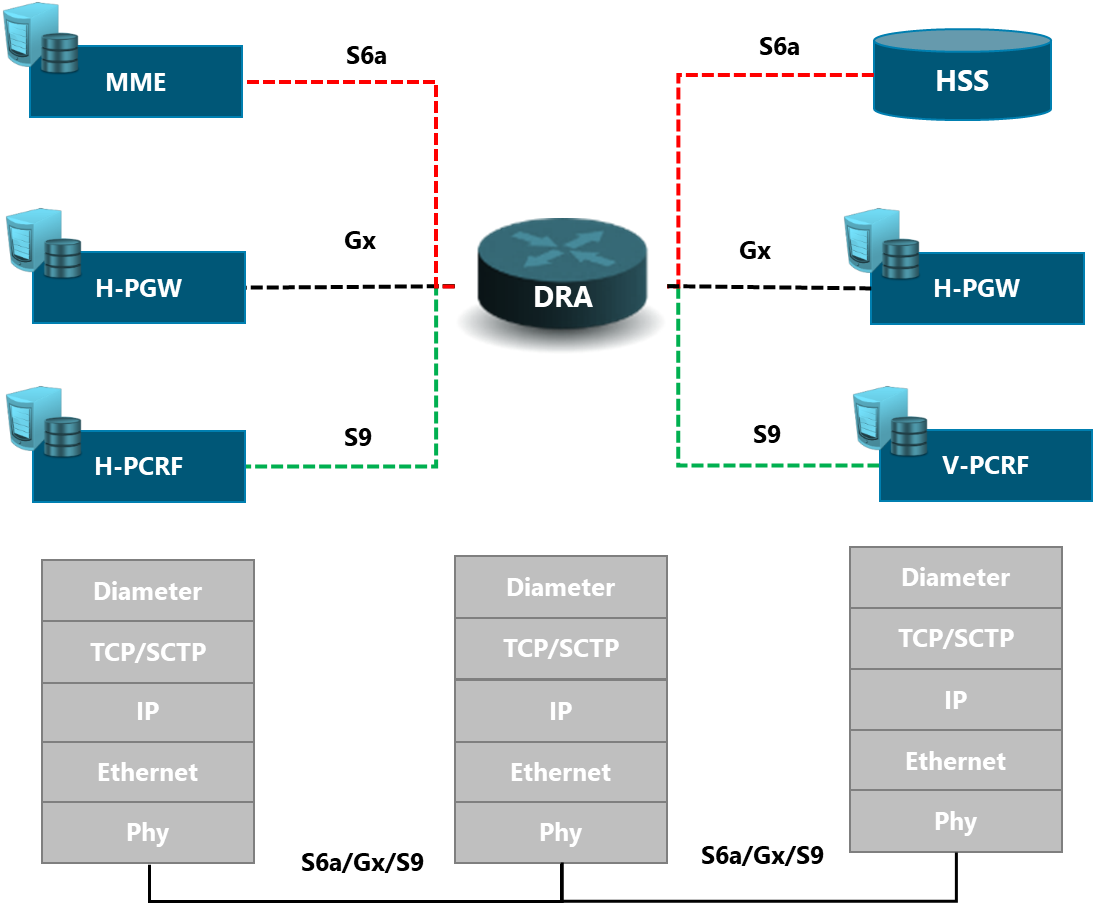

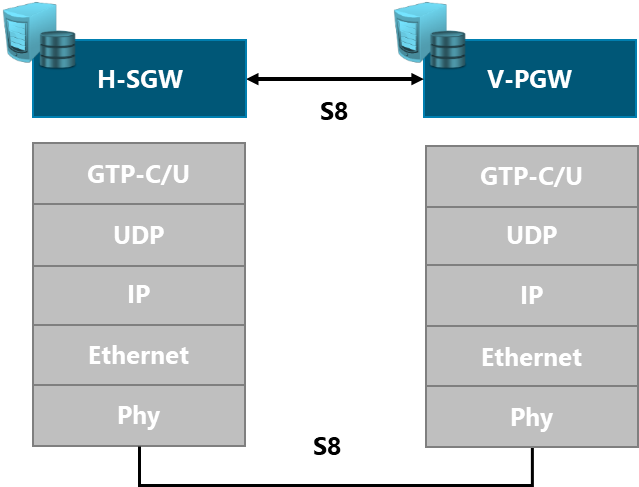

Figure 5. 3GPP Logical Interfaces Protocol Stack

The definition of the stack protocols to be implemented is an input from the Transport & IP Architecture Module. The scope of the LLD process is to define the main configurations parameters for each of them.

2.5 IP Planning

In order to simplify the IP address management of the overall network, support several technologies and be able to perform an efficient troubleshooting, an IP Address Distribution Plan must be defined. An IP Address Distribution Plan is a document developed by the NaaS Operator that displays how the universe of available IP addresses will be distributed in a way that supports the required services. This plan should satisfy the following conditions:

Section 3.3 details the methodology to generate the Detailed IP Distribution Plan and the IP Address Allocation process using IPv4 version. A similar process can be performed when using IPv6 version considering that 16 octets are available. A broader view on the fundamentals of this process can be found on the Primer on IP Planning Principles.

3 Functions & Methodologies

Methodologies to perform critical tasks/subtasks involved in the Tx Network Low-level design process.

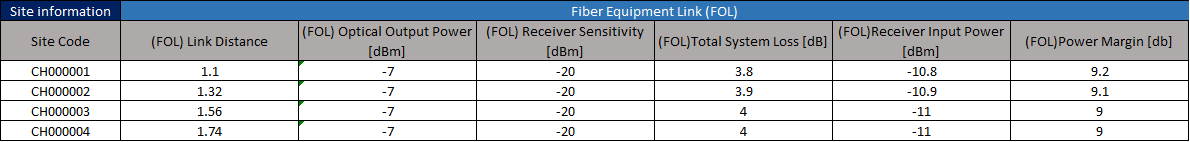

3.1 Fiber Optic Link Design

This section provides general methodologies to perform the Passive Fiber Optic Link Design. The inclusion of active elements in the fiber path (e.g. amplifiers) is out of the scope of this module.

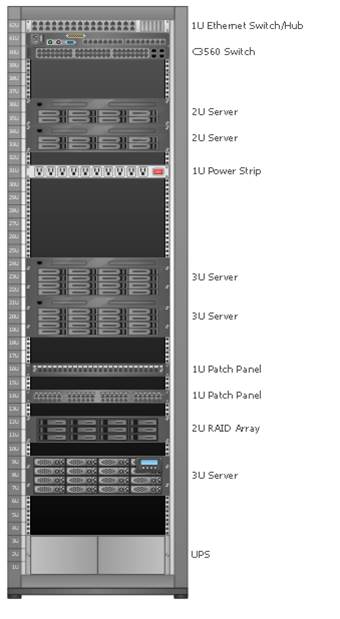

3.1.1 Fiber Optic Link Design Process

A Fiber Optic Link Design must be developed for each of the fiber paths defined by the Tx High-Level Design in order to complete the Tx information. The principal analysis is the Fiber Optic Link Budget, which is the verification of a fiber optic link operating characteristics. This encompasses items such as routing, circuit length, fiber type, number of connectors and splices and wavelengths of operation.

Although the specific steps can vary depending on the selected Fiber Optic Link Design Tool, the general steps to perform the Fiber Optic Link Budget are presented in the following subsections.

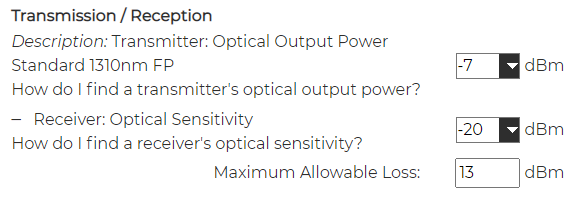

Step 1. Load Fiber Optic Equipment Characteristics

The information regarding the Fiber Optic Equipment that can be included depends on the selected tool characteristics. However, the following elements are the most relevant in the Fiber Optic Link Design:

Figure 6 shows an example of the configuration of the Fiber Optic Equipment Characteristics in the Fiber Optic Link Design Tool.

Figure 6. Example of Fiber Optic Equipment Characteristics in Fiber Optic Link Design Tool.

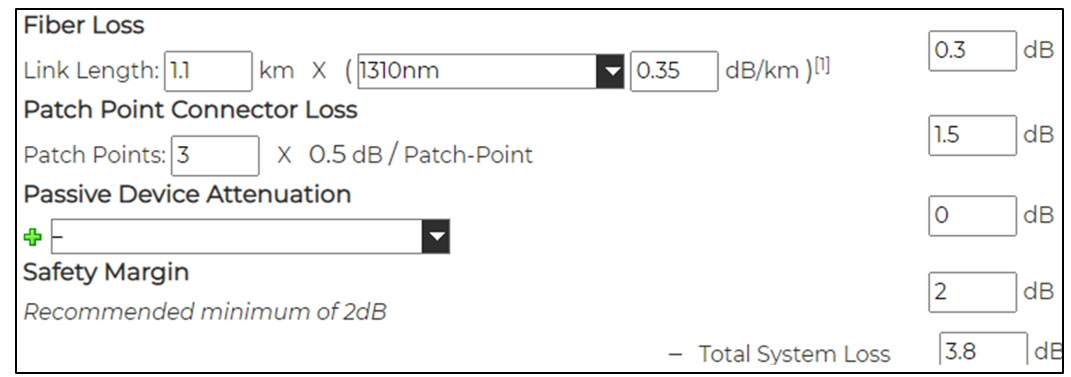

Step 2. Calculate the Losses in the Fiber Path

The information regarding multiple losses in the fiber path that can be included depends on the selected tool. However, the following elements are the most relevant in the Fiber Optic Link Design:

In absence of specific values, the use of conservative defaults is advisable, as noted in Table 2:

|

Wavelength |

Fiber Loss / km |

Connector Loss |

Splice Loss |

|

1310 nm |

0.35 dB |

0.75 dB |

0.3 dB |

|

1550 nm |

0.22 dB |

0.75 dB |

0.3 dB |

Table 2. Default values for Link Budget Calculations

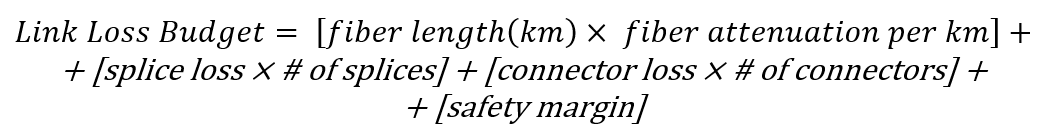

The total loss in the optical link is thus computed, using a typical safety margin or 3dB, as:

(Eq. 1)

Figure 7 shows an example of the configuration of the Fiber Losses in the Fiber Optic Link Design Tool.

Figure 7. Example of Fiber Losses in Fiber Optic Link Design Tool.

Step 3. Fiber Optic Link Budget Generation.

A link budget is a calculation that considers the transmitted power with all the gains and losses in the Tx link in order to estimate the strength of the received signal. This calculation provides, for a link Loss Budget and a given dynamic range (the difference between the transmitter power and the receiver sensitivity) the adjustment margin for that configuration as:

![]()

(Eq. 2)

Per design, the power margin should be greater than 3dB. The Fiber Optic Link budget is calculated by using the options provided by the selected tool.

Step 4. Fiber Optic Link Requirements Validation

In order to validate the Fiber Optic link design, the Link Budget must be fulfilled (i.e. it must confirm that the power margin is greater than 3dB).

In case the Link Budget is not fulfilled, a change in the link parameters can be modified (e.g. change the transmission power) as long as the link still complies with design directives.

3.1.2 Fiber Optic Link Design Report

After generating the Fiber Optic Link Design, a report that contains information of the designed link must be constructed including the following information:

3.2 MW Link Design

This section provides general methodologies to perform the Tx Link Design for each available MW Link identified in the High-Level Design based on the established requirements.

3.2.1 MW Link Design Process

Although the specific steps can vary depending on the selected Microwave Link Design Tool, the general steps to perform the Microwave Link Design are presented in the following subsections. It is worth to note that the illustrative images along the process belong to Pathloss Tool.

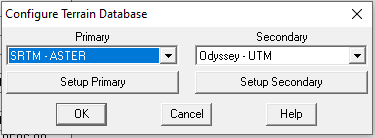

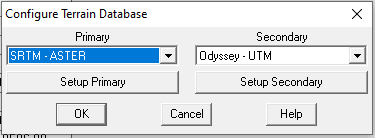

Step 1. Digital Terrain Databases Selection

In general, most propagation predictions are based on detailed topographical information. The topographical information is provided by Digital Terrain Elevation (DTE) Models that is composed by digital terrain topographical databases and represented in the form of digital terrain maps. These models provide accurate information that will be used to evaluate the path clearance and the potential loss associated with diffraction.

Depending on the selected tool to perform the design operations, these DTEs models can be included as part of the tool. If they are not included, some DTEs are available as open source data for many places in the world (e.g Google Maps Elevation API).

Figure 8 shows an example of the configuration of the Digital Terrain Database in the MW link Design Tool.

Figure 8. Example of Digital Terrain Database Configuration on MW Link Design Tool.

Step 2. Load Site Information

The site information must be introduced to the tool, including:

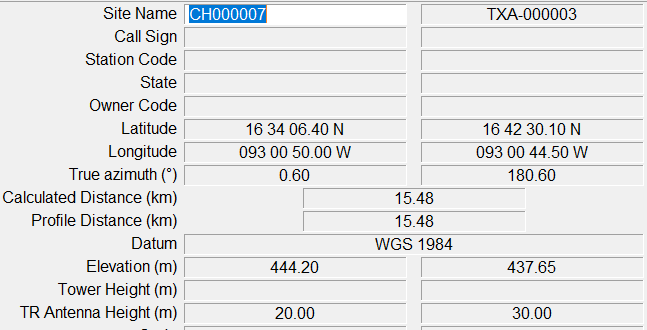

Figure 9 shows an example of the configuration of the Site Information in the MW link Design Tool.

Figure 9. Example of Site Information Configuration on MW Link Design Tool.

Step 3. Load MW Equipment Specifications

The information regarding the MW equipment that can be included depends on the selected tool features. However, the following elements are the most relevant in the MW Link Design:

Furthermore, depending on the tool, this information may be entered via a user interface or equipment files (e.g. MRS files in Pathloss). Figure 10 shows an example of the configuration of the MW Equipment in the MW link Design Tool.

Figure 10. Example of MW Equipment Configuration on MW Link Design Tool.

Step 4. Additional MW Link Model Characteristics Setting

Depending on the selected tool features, additional Microwave Link Model Characteristics can be configured, such as:

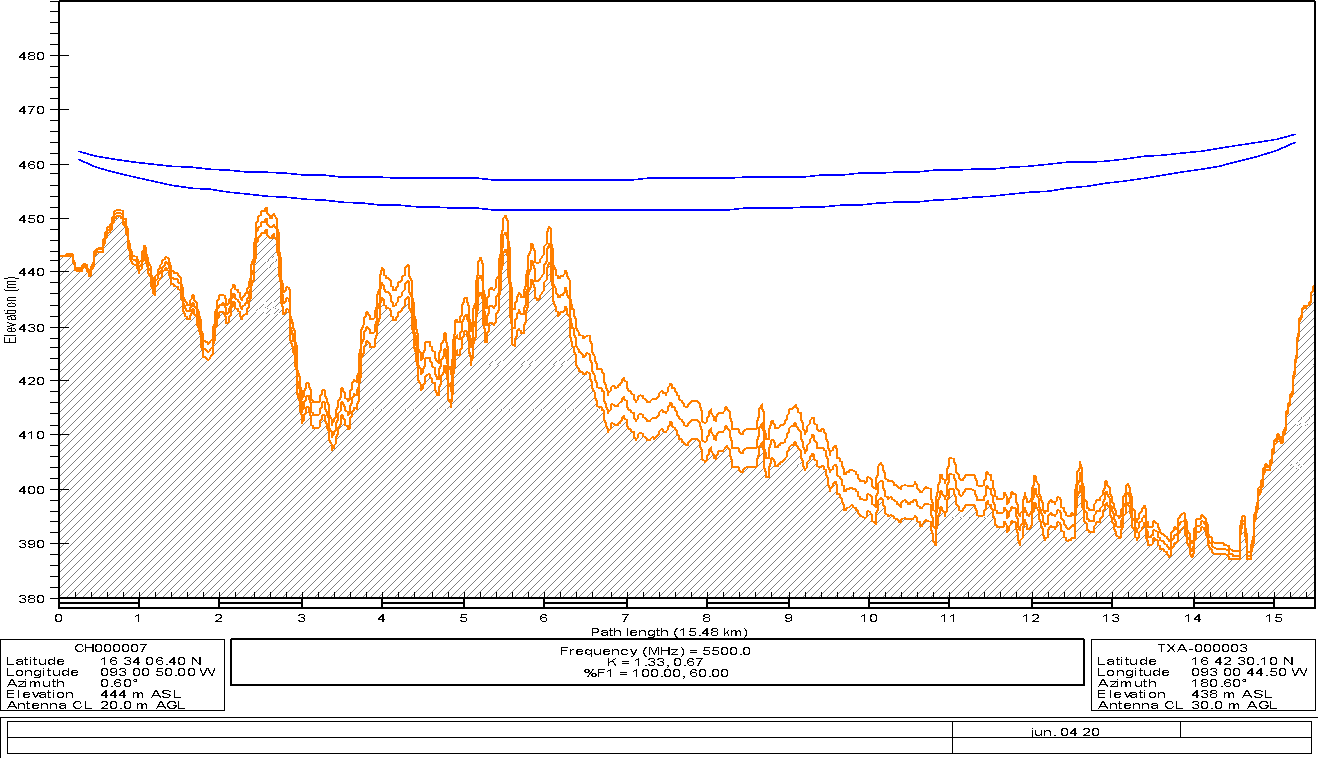

Step 5. MW Link Budget and Profile Generation

Generate the Microwave Link budget and profile using the options provided by the selected tool. Figure 11 displays an example of a MW Link Profile.

Figure 11. MW Profile Example

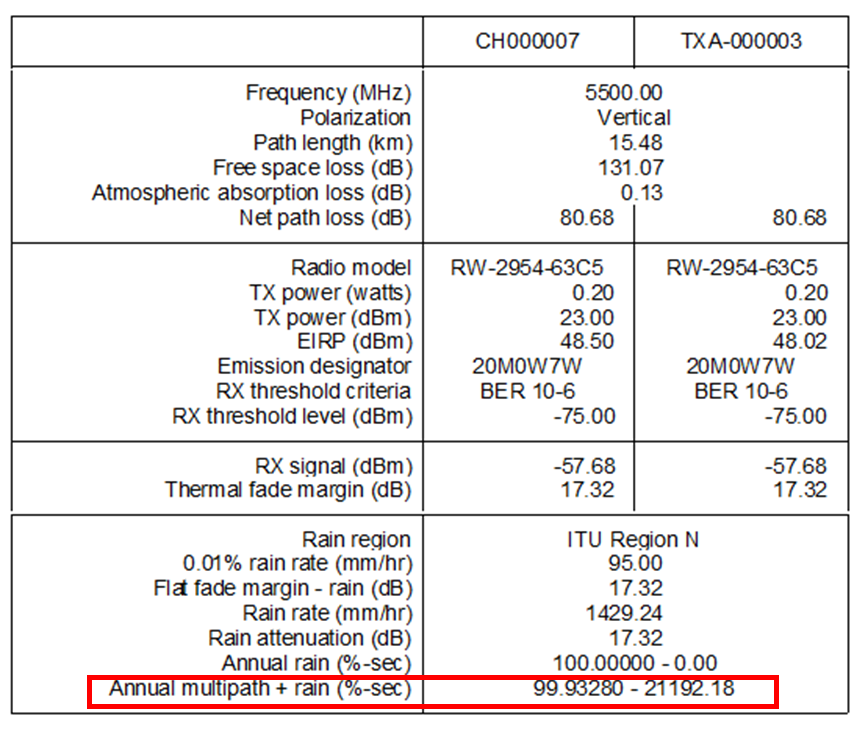

Step 6. MW Link Requirements Validation

All the MW links must comply with the general requirements established in the Tx & IP Architecture module as well as with the specific MW link parameters (e.g. availability, throughput). In case one of the requirements is not fulfilled change in the link parameters can be applied as long as the link still complies with the design directives.

For instance, if the received signal strength do not surpass the radio sensitivity, a lower modulation scheme can be selected. This change, inherently increase the radio sensitivity, allowing to receive weaker signals. However, a change in the modulation scheme may impact in the link throughput.

Furthermore, in case the link is not feasible due to lack of Line of Sight (e.g. no Line of Sight due to an insuperable obstacle), the methodology presented in Section 3.2 of Tx HLD Module must be applied to re-design the MW Link.

3.2.2 Microwave Link Design Report

After generating the MW Link Design, a report that contains information of the designed link must be constructed including the following information:

Figure 12 displays an example of the Microwave Link Design Report generated by the Pathloss tool.

Figure 12. MW Link Report Example

3.3 IP Address Subnetting Process

IP Subnetting is the process used to partition a network address space into smaller segments (e.g. divide one segment /8 into 16 segments /12). This process is important in the context of the NaaS operator because it allows to logically divide the network traffic type for different uses (e.g. separate the traffic of the eNodeBs from the Core elements). Furthermore, IP Subnetting allows to use different masks for each subnet, and thereby use address space efficiently. Finally, subnetted networks are much easier to manage and troubleshoot.

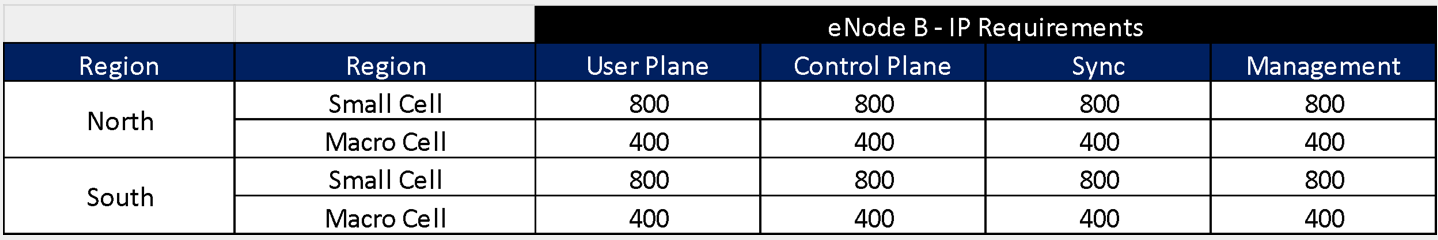

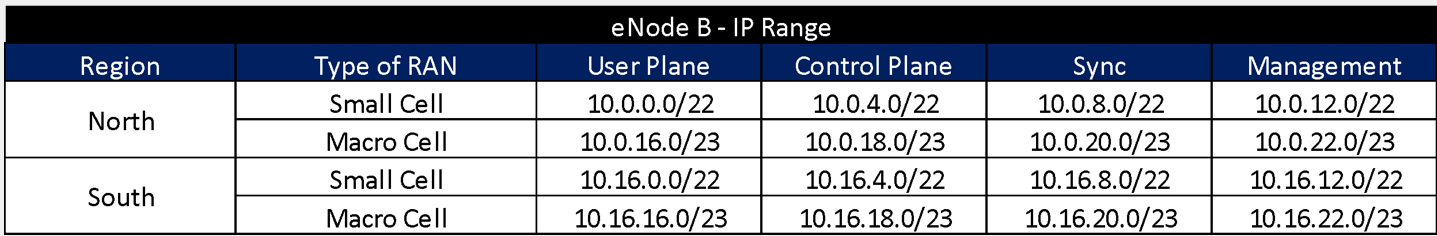

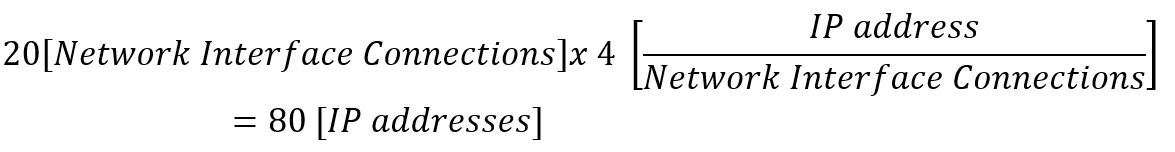

In order to illustrate the process, a practical Example is followed along each of the processes steps. In this particular example, the process to divide the segment 10.0.0.0/16 in appropriate network subnets that can allocate the number of IP addresses in Table 3 is presented.

| |

Number of required IP addresses |

|

Subnet 1 |

20,000 |

|

Subnet 2 |

10,000 |

|

Subnet 3 |

15,000 |

Table 3. IP Address Requirements in Subnetting Example

Step 1. Calculate the required block sizes

In order to calculate the length mask (i.e. block size) required to support the number of required IP addresses, the following formula is used:

![]()

(Eq. 3)

Using Eq. 3 in the example, the results presented in Table 4 are obtained. In practice, the assign block size must be greater than the actual requirement. A Web-based IP address Calculator tool can be used to simplify the planning on network subnets.

|

Subnet |

Required Mask Length |

Mask Notation |

Block Size |

|

Subnet 1 |

/17 |

255.255.128.0 |

32,768 |

|

Subnet 2 |

/18 |

255.255.192.0 |

16,384 |

|

Subnet 3 |

/18 |

255.255.192.0 |

16,384 |

Table 4. Block size Requirements in Subnetting Example

Step 2. Subnet Sorting

The next step is to sort the resulting network segments based on the block size in descending order. In this way, the IP address space is used efficiently. In some cases, it is possible that the sum of the calculated block for the required subnets, does not make complete use of the available IP segment. These additional IP addresses can be reserved for future expansion.

It is important to note that the subnetting definition process must consider future additional network elements. Further changes in the existing subnets definition are difficult and can result in the modification of the IP Distribution Plan. It is recommended to be conservative in the calculation of the required block sizes to reduce the likelihood of having to re-work on the IP subnetting process.

Step 3. Subnets Assignment

Finally, assign the first resulting network segment to the first available segment from the original segment and continue following the ordering. The Example Result is displayed in Table 5.

|

Subnet |

Required Mask Length |

|

Subnet 1 |

10.0.0.0 / 17 |

|

Subnet 2 |

10.0.128.0 / 18 |

|

Subnet 3 |

10.0.192.0 / 18 |

Table 5. IP Subnetting Result in Subnetting Example

Additionally, Naas operators can use the High Level IP Distribution Plan Widget as support in the generation of the IP Distribution Plan.

3.4 IP Address Allocation

IP Address Management is the collection of procedures for organizing, tracking and adjusting the information related to the IP addressing space. It supports the network designers and operators to guarantee that the IP addresses remain updated. Furthermore, given the possible thousands of IP addresses in the network, this process also helps the operations team when debugging / troubleshooting issues.

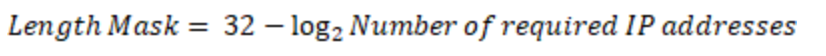

Although the specific steps can vary depending on the selected IP Address Management Tool, the general steps to perform the IP Address Allocation are presented in the following subsections. It is worth to note that the illustrative images along the process belong to the free phpIPAM Tool.

Step 1. Define Network Segments

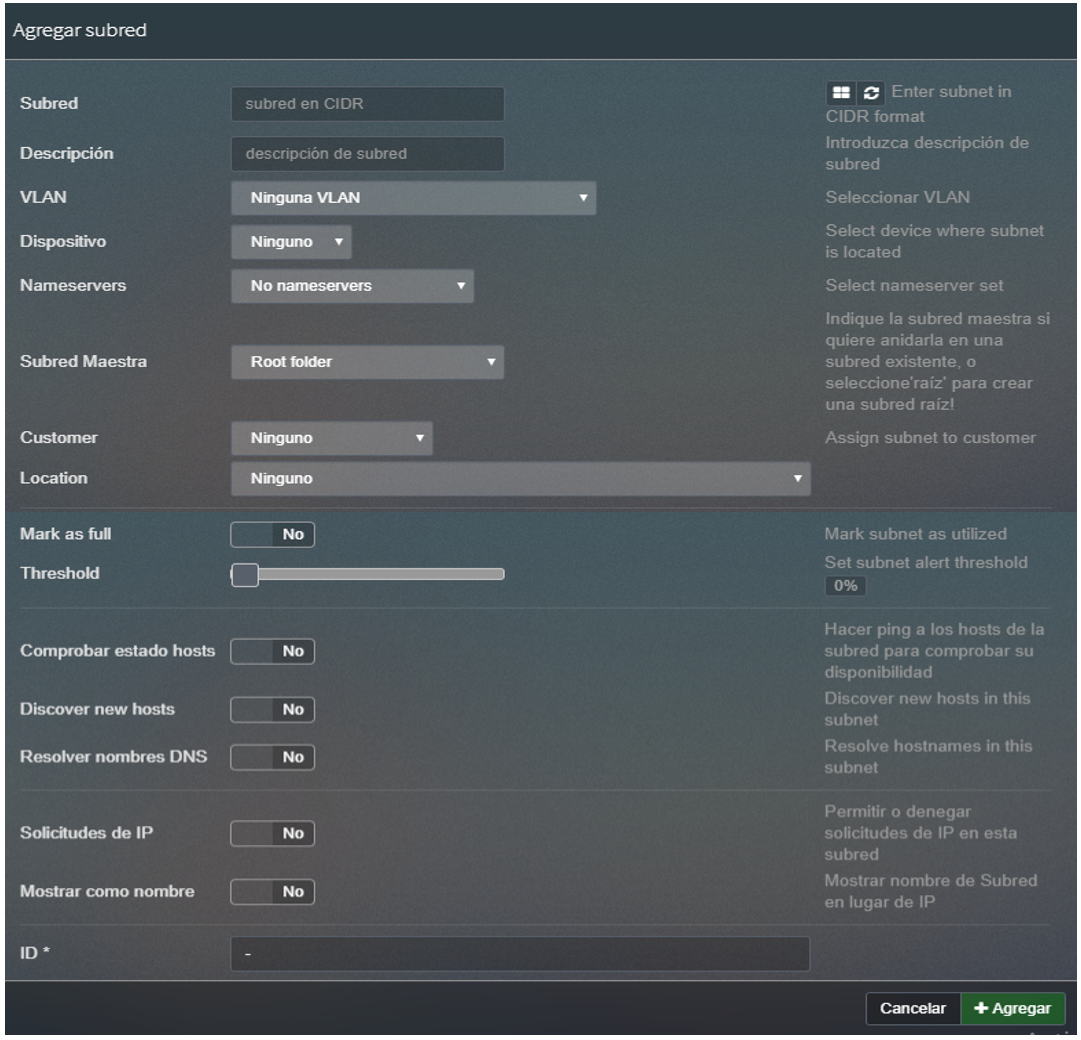

Introduce the main network segments included in the General IP Distribution Plan in descending order of block size. Then introduce all the network segments included in the Detailed IP Distribution Plan.

Step 2. Allocate IP Network Addresses

To allocate a specific IP address (e.g. for eNodeB services or transport equipment), identify the segment that corresponds to the scenario in the Detailed IP Distribution Plan.

Once the specific segment is identified, the first available IP address within the segment is selected, and the specific information is introduced to describe the segment purpose. Following the basic information that must be registered for all the IP addresses within the IPAM Tool:

Figure 13 displays an example of the IP Allocation in the phpIPAM tool.

Figure 13. Subnet Allocation Example

4 E2E Process Flow

This section presents a generic yet customizable end-to-end process flow to perform the transport design for the transport network.

4.1 End-to-end Process Overview

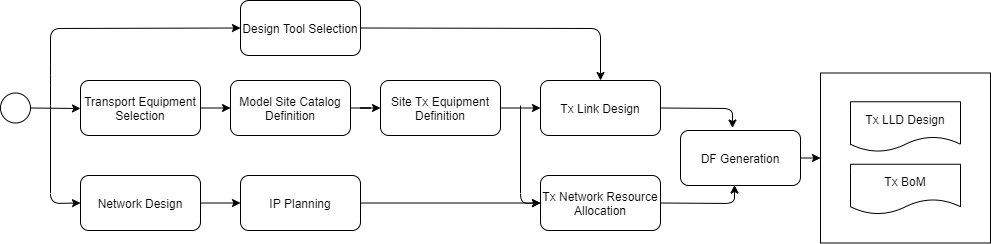

The generic end-to-end (E2E) process flow displayed in Figure 14 orchestrates general tasks to perform the Transport Network LLD in a logical and well-structured sequence.

Figure 14. Generic E2E Process Flow.

Details and customization options of each step are reviewed in the following sections. In addition, a Tx LLD Process Flow Designer is provided as part of the Methods of Engagement.

4.2 Step-by-step Analysis

This section presents an examination of each of the steps involved in the Low-level Tx Design process to identify, isolate and describe the range of implementation options on the path towards customization based on NaaS operator requirements and constraints.

In order to demonstrate a practical exercise to implement the process design flow, a Design Example is followed along each of the process steps.

Design Example

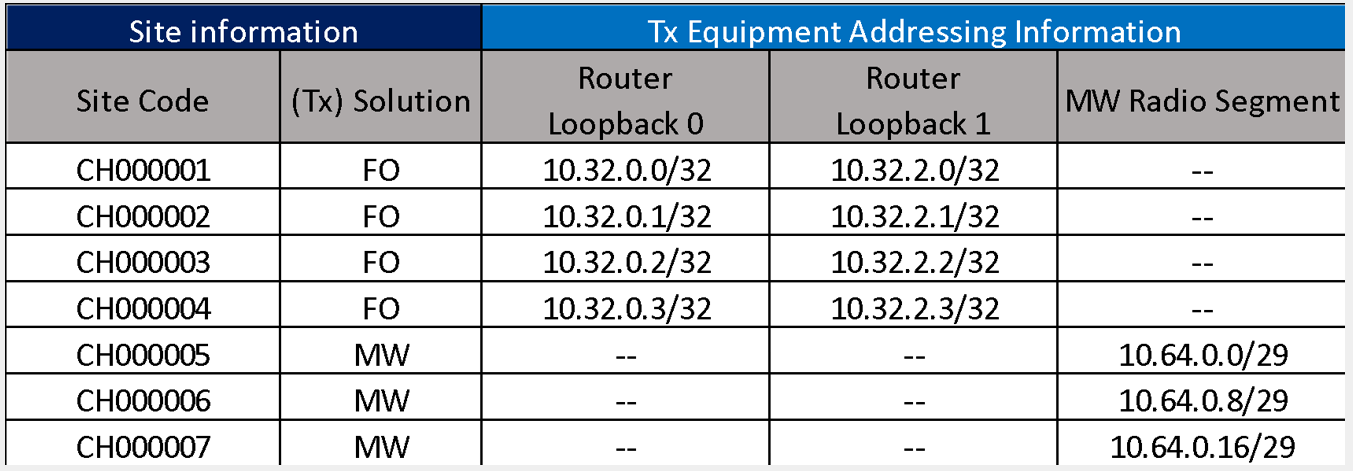

In this particular example, the process to generate the LLD Design for the scenario in Figure 15 is presented. It must be noted that this is the continuation of the Example presented in the Tx HLD Design.

Figure 15. Design Example Scenario.

The scenario is composed of eight RAN sites that will be analyzed to perform the Transport LLD Design. The example considers two geographical regions (North and South), where all the RAN sites presented are located in the North Region.

The distribution of transport technologies is the following: Four nodes use Fiber Optic, three nodes use Microwave and one site uses Satellite Link. The details on the transport technologies selection can be found in the Tx HLD Module.

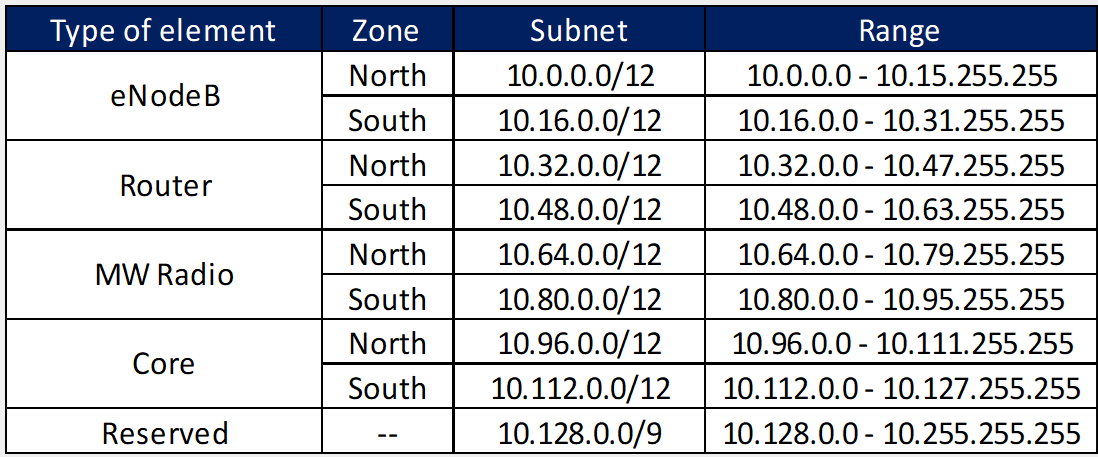

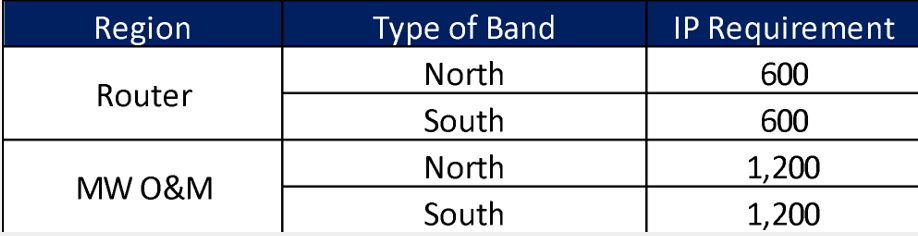

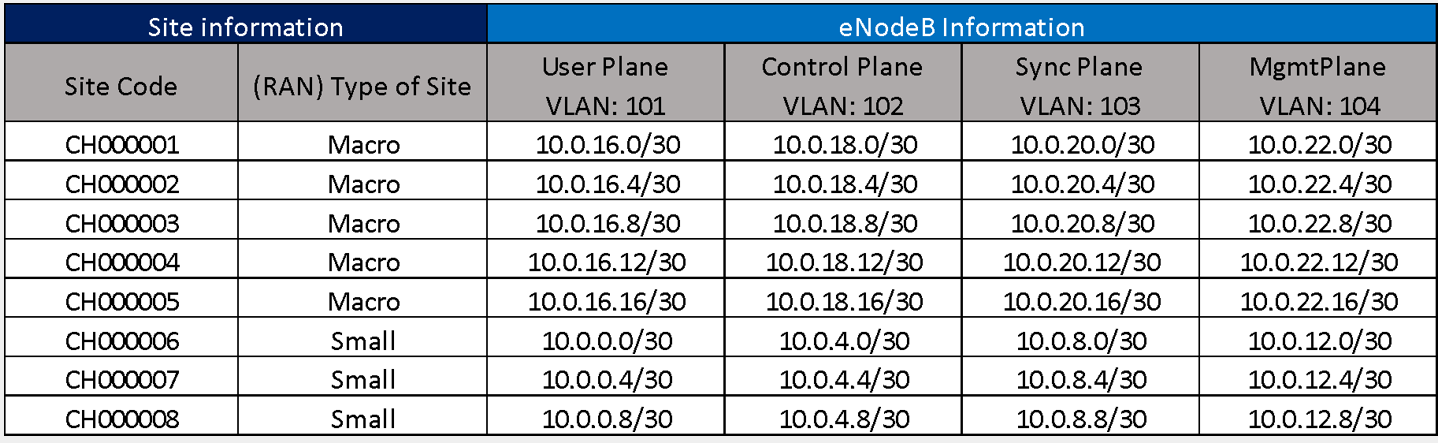

The General IP Distribution Plan is presented in Table 6. The General IP Distribution Plan is presented in Table 6, and it describes the general segments defined by each of the different network elements. The details on the General IP Distribution Plan generation can be found in the Tx & IP Architecture Module.

Table 6. General IP Plan Distribution for the Example Scenario.

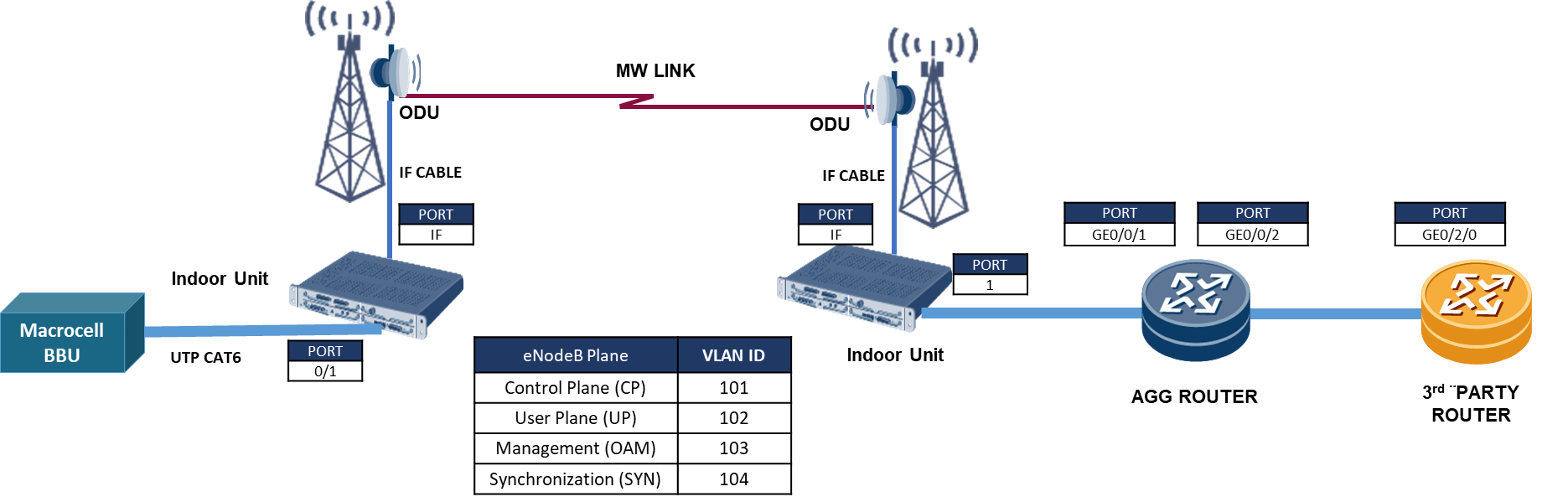

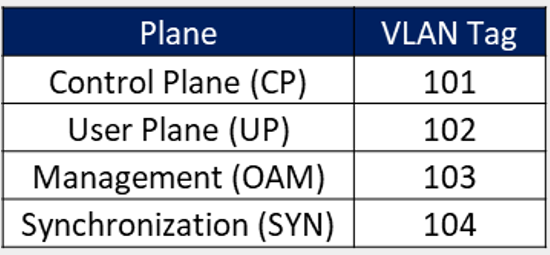

The VLAN Definition to be used to separate the traffic for different planes is presented in the Table 7. The details on the VLAN Definition can be found in the Tx & IP Architecture Module.

Table 7. VLAN Definition in Design Example.

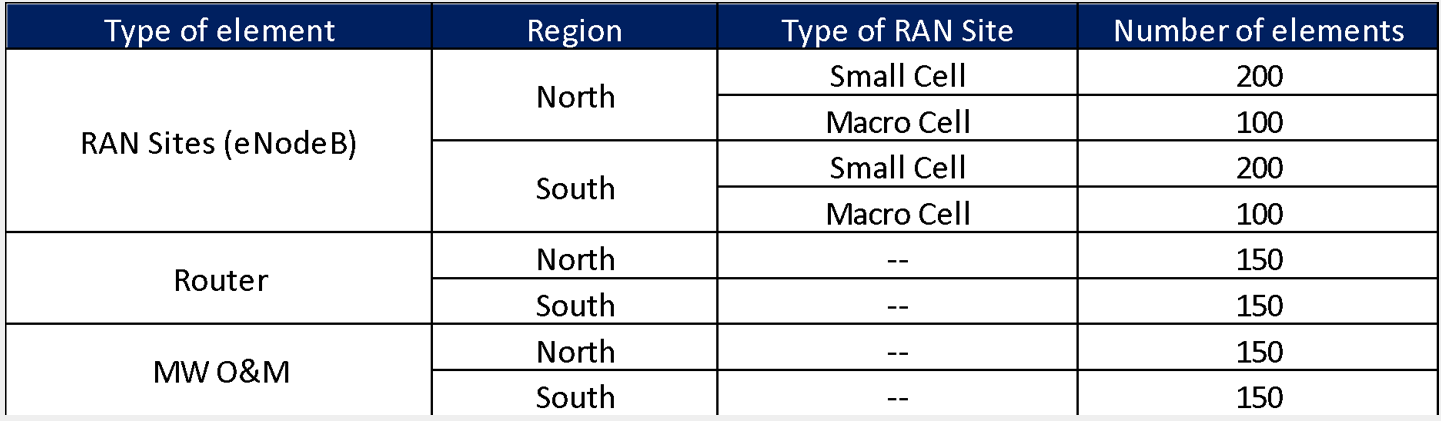

Whereas this Design Example only focuses on the eight RAN sites presented in Figure 15, the total number of expected RAN sites and Tx links to be deployed are presented in Table 8. These numbers already consider the future growth of the network.

Table 8. Total number of elements for the Example Scenario

Even if the number of required IP addresses for the number of elements does not cover in totality the corresponding IP segment, the methodology presented in Section 3.3 must be performed to enhance the network management and support future expansions.

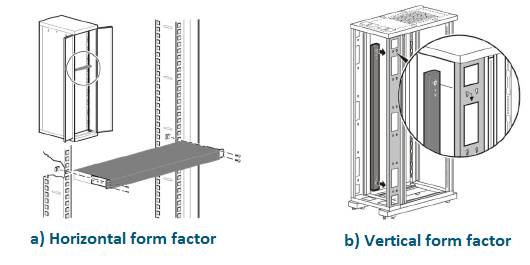

4.2.1 Transport Equipment Selection

NaaS operators must perform the selection of the Tx Equipment from multiple vendor alternatives. The complete process to select the Tx Equipment is included within the Procurement Module (RFx Process Module). This section focuses on the technical aspects to perform the evaluation of transport equipment from different vendors.

From the technical perspective, Table 9 displays the typical requirements that the transport equipment must satisfy which must be defined according to the required network segment (e.g., last-mile or aggregation link implementation scenario).

|

|

|

|

Supported Protocols |

Support for the protocols defined in Tx & IP Architecture Module |

|

Availability |

Reliability and uptime |

|

Time-to-Repair |

|

|

Time-to-Deploy |

|

|

Performance |

Throughput performance under normal and stressing conditions |

|

Maximum number of active connections |

|

|

Dimensions |

Standard dimensions compliance |

|

Power Requirements |

Type of energy required (AD/DC) and power consumption profile |

|

Security |

Implemented security mechanisms |

|

Cost |

Cost of the equipment. |

Table 9. Typical specifications to be evaluated in transport equipment.

>

The decision to select the Tx Equipment is also affected by the financial constraints of the project. The final selection of the Tx Equipment is performed during the RFx process and in conjunction with the Procurement Team.

Design Example

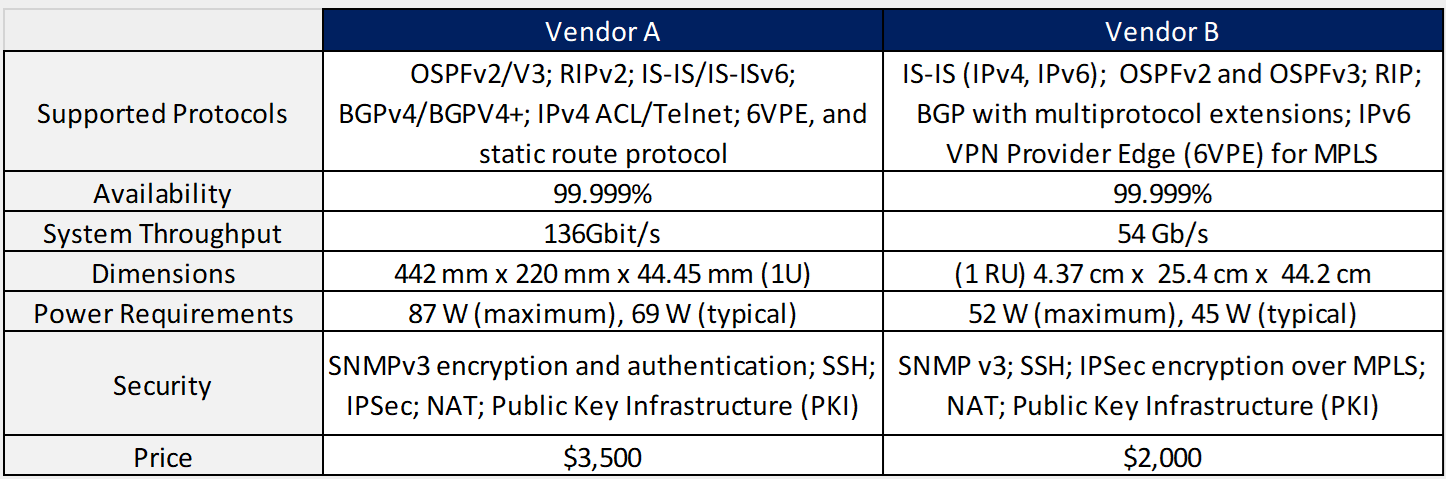

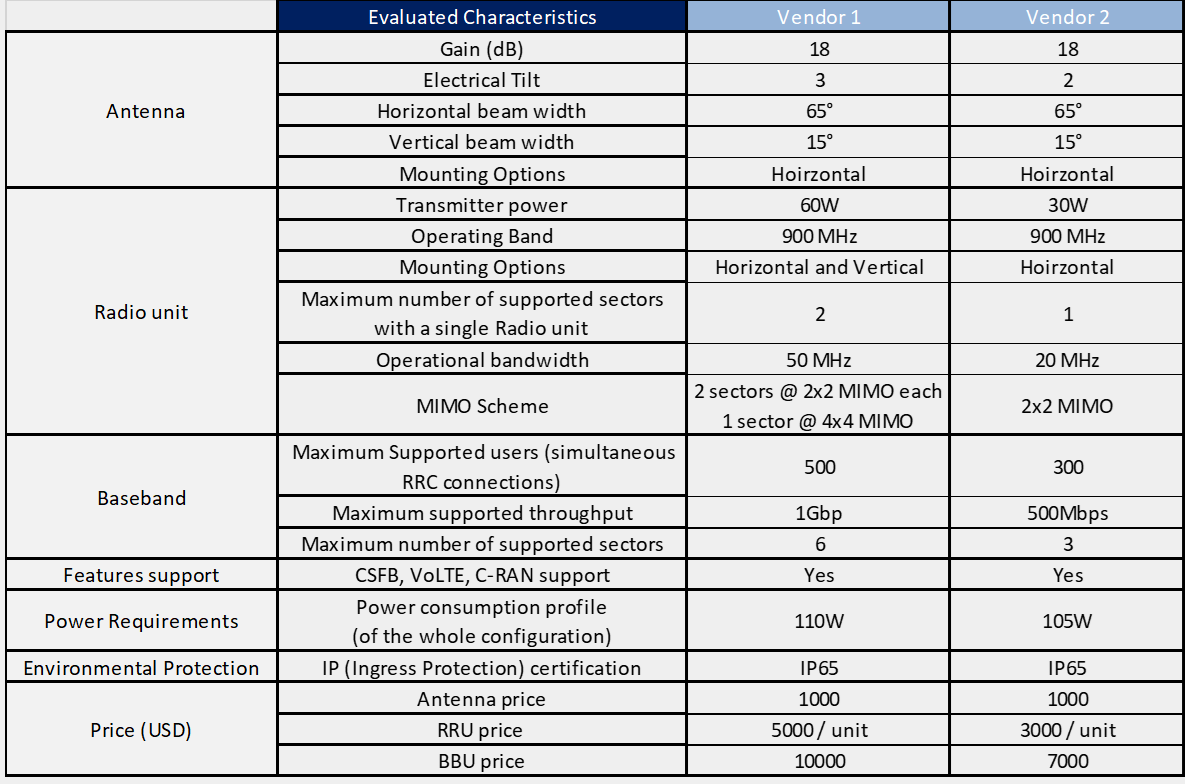

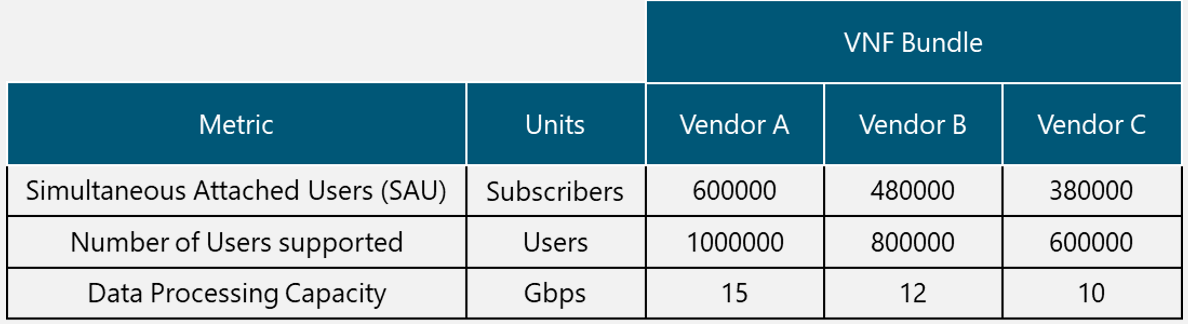

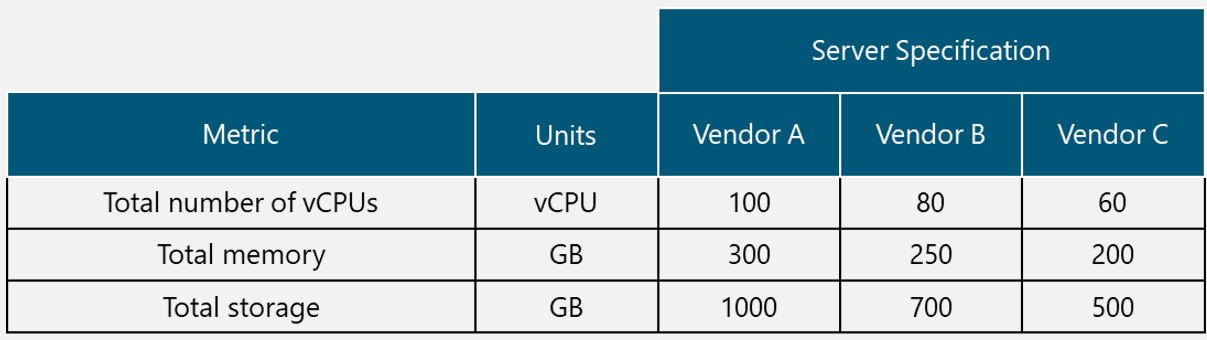

In the Design Example, there are two Tx equipment provided by different vendors (Vendor A and Vendor B) that are evaluated for selection. The characteristics of the Tx equipment are shown in Table 10.

Table 10. Tx Equipment Specification for Design Example.

From Table 10, it can be seen that both options comply with the required protocol stack and security features; they also have a similar value of the availability figure and both require a standardized unit rack for installation. Vendor A offers more system throughput, but it also has more power requirements and the price is considerably higher.

Therefore, Vendor B is the selected option in the Design Example since it complies with the required protocol stack, security features, system throughput (it fully complies with the architecture requirement). Furthermore, the power requirements are low (a critical aspect in rural environments) and the price is the most accessible.

>

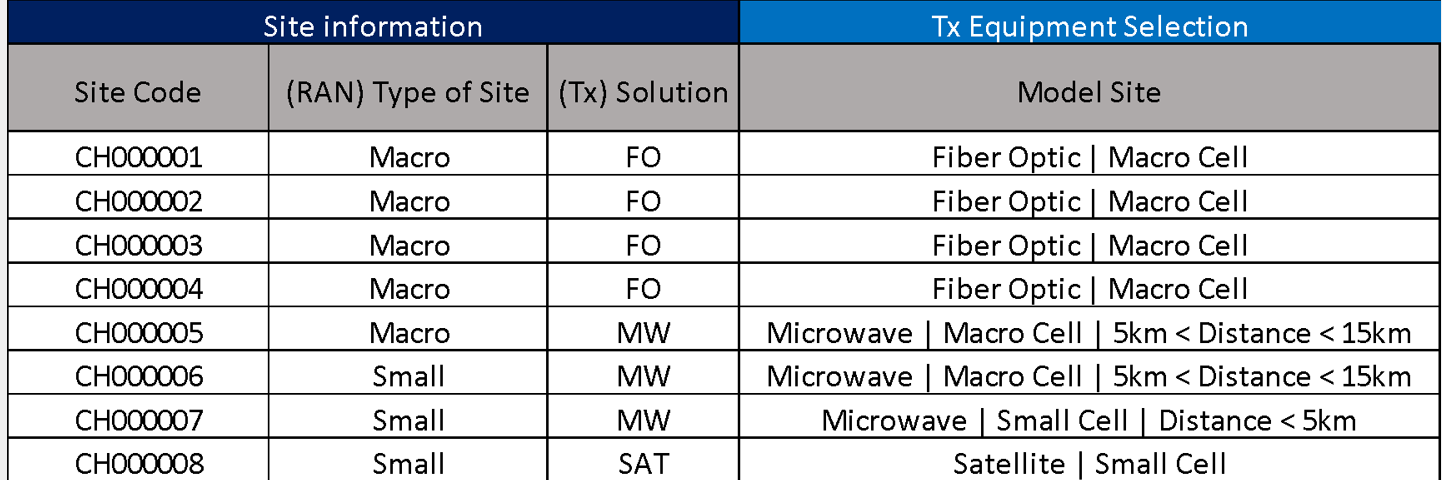

4.2.2 Model Site Catalogue Definition

The aim of Model Site Catalogue Definition is to standardize and document the possible Tx Site equipment configurations to be implemented. By doing this, the design options are constrained which simplifies the overall process.

4.2.2.1 Model Site Granularity Definition

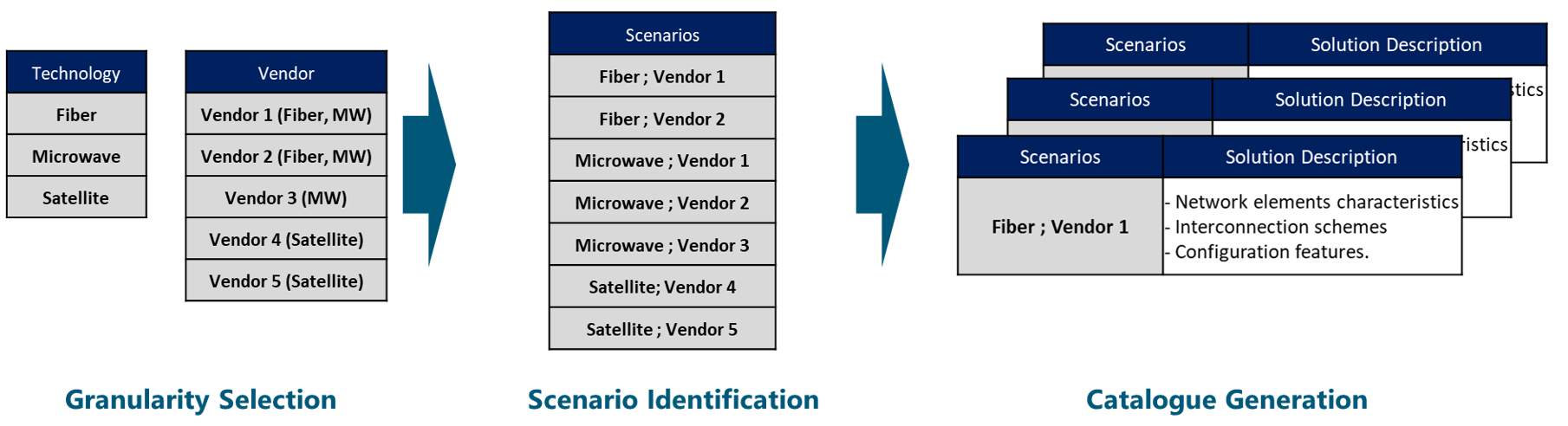

In order to create the catalogue, the granularity to be used must be defined. Granularity is the level of detail present in the scenarios included in the catalogue and will dictate the different variations to be included in the catalogue. The possible alternatives to be used to establish the granularity are:

NaaS operator should select the appropriate level of granularity that better suits its requirements. However, it is highly recommended that a high level of granularity is chosen in order to minimize the number of possible alternatives and simplify the design process.

4.2.2.2 Model Site Catalogue Generation

Based on selected granularity, an identification of all possible scenarios is performed. Then, a high-level description of each scenario solution must be described. Following, a list of characteristics that must be identified for each scenario:

Figure 16 displays a brief example of the Model Site Catalogue Generation considering Available Transport Technologies and Tx Equipment Vendor as granularity. NaaS operators can take this example to customize its own catalogue generation process.

Figure 16. Example of Model Site Catalogue Generation

The NaaS Operator can use the Model Site Catalogue Template as a base to create its own version.

Design Example

In order to generate the Model Site Catalog Standard Configurations for the transport equipment on the Design Example, the following scenarios are identified:

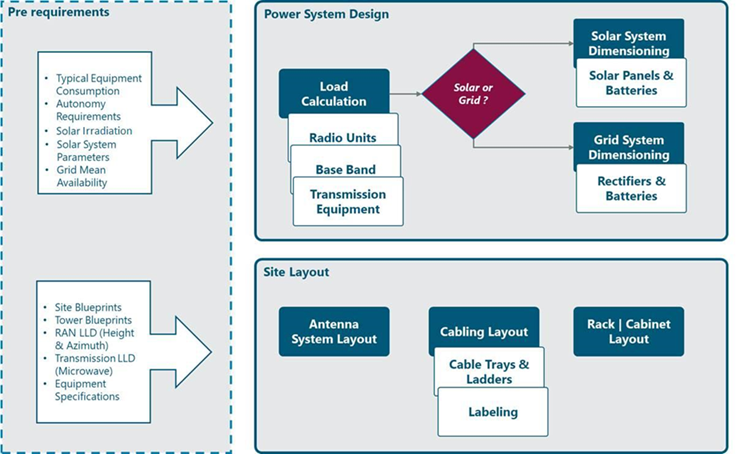

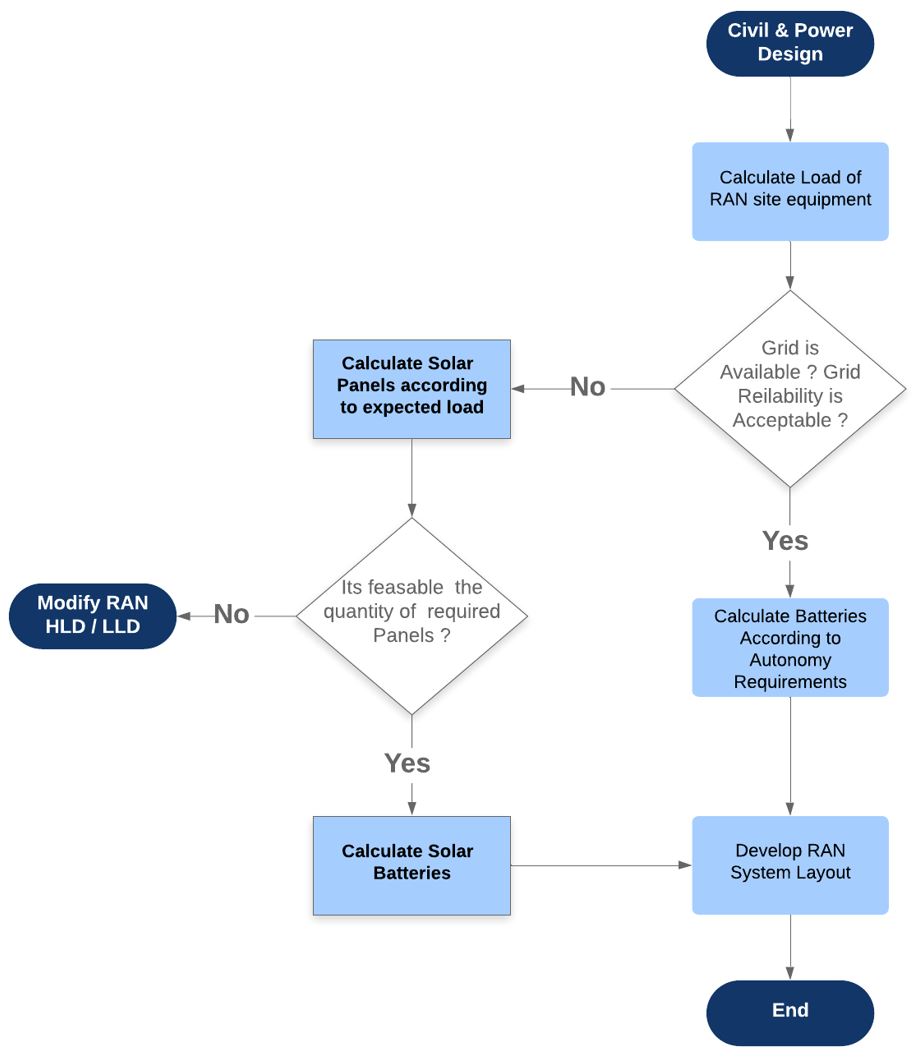

Table 11. Model Site Catalogue Scenarios for Design Example.